New brain-machine interfaces that exploit the plasticity of the brain may allow people to control prosthetic devices in a natural way

Imagine a piece of technology that would let you control an apparatus simply by thinking about it. Lots of people, it turns out, have dreamed of just such a system, which for decades has fired the imaginations of scientists, engineers, and science fiction authors. It’s easy to see why: By transforming thought into action, a brain-machine interface could let paralyzed people control devices like wheelchairs, prosthetic limbs, or computers. Farther out in the future, in the realm of sci-fi writers, it’s possible to envision truly remarkable things, like brain implants that would allow people to augment their sensory, motor, and cognitive abilities.

That melding of mind and machine suddenly seemed a little less far-fetched in 1999, when John Chapin, Miguel Nicolelis, and their colleagues at the MCP Hahnemann School of Medicine, in Philadelphia, and Duke University, in Durham, N.C., reported that rats in their laboratory had controlled a simple robotic device using brain activity alone. Initially, when the animals were thirsty, they had to use their paws to press a lever, thus activating a robotic arm that brought a straw close to their mouths. But after receiving a brain implant that recorded and interpreted activity in their motor cortices, the animals could just think about pressing the lever and the robotic arm would instantly give them a sip of water.

Suddenly, a practical brain-machine interface, or BMI, seemed attainable. The implications were enormous for people who, because of paralysis caused by spinal-cord or brain damage, find it difficult or impossible to move their upper or lower limbs. In the United States alone, more than 5.5 million people suffer from such forms of paralysis, according to the Christopher and Dana Reeve Foundation.

The Hahnemann-Duke breakthrough energized the BMI field. Starting in 2000, researchers began unveiling proof-of-concept systems that demonstrated how rats, monkeys, and humans could control computer cursors and robotic prostheses in real time using brain signals. BMI systems have also revealed new ways of studying how the brain learns and adapts, which in turn have helped improve BMI design.

But despite all the advances, we are still a long way from a really dependable, sophisticated, and long-lasting BMI that could radically improve the lives of the physically disabled, let alone one that could let you see the infrared spectrum or download Wikipedia entries directly into your cerebral cortex. Researchers all over the world are still struggling to solve the most basic and critical problems, which include keeping the implants working reliably inside the brain and making them capable of controlling complex robotic prostheses that are useful for daily activities. At the risk of losing its credibility, the field now needs to transform BMI systems from one-of-a-kind prototypes into clinically proven technology, like pacemakers and cochlear implants.

It’s time for a fresh approach to BMI design. In my laboratory at the University of California, Berkeley, we have zeroed in on one crucial piece of the puzzle that we feel is missing in today’s standard approach: how to make the brain adapt to a prosthetic device, assimilating it as if it were a natural part of the body. Most current research focuses on implants that tap into specific neural circuits, known as cortical motor maps. With such a system, if you want to control a prosthetic arm, you try to tap the cortical map associated with the human arm. But is that really necessary?

Our research has suggested—counterintuitive though it may seem—that to operate a robotic arm you may not need to use the cortical map that controls a person’s arm. Why not? Because that person’s brain is apparently capable of developing a dedicated neural circuit, called a motor memory, for controlling a virtual device or robotic arm in a manner similar to the way it creates such memories for countless other movements and activities in life. Much to our surprise, our experiments demonstrated that learning to control a disembodied device is, for your brain, not much different from learning to ski or to swing a tennis racket. It’s this extraordinary plasticity of the brain, we believe, that researchers should exploit to usher in a new wave of BMI discoveries that will finally deliver on the promises of this technology.

The BMI field started more than 40 years ago at the University of Washington, in Seattle. In a pioneering experiment in 1969, Eberhard Fetz implanted electrodes in the brains of monkeys to monitor the activity of neurons in the motor cortex, the part of the brain that controls movement. When a neuron fired at a certain rate, a corresponding electrode would pick up a small electrical discharge, deflecting a needle and emitting a chirp in a monitoring device. Whenever that happened, the animals would receive a treat. Crucially, the animals could see and hear the monitoring device, which gave them feedback about their neural activity. Within minutes, the monkeys learned to intentionally fire specific neurons to make the needle move, so that they could get more treats. Fetz showed that it was possible to teach the brain to control a device external to the body.

Today, BMI systems vary greatly in their designs. A major distinction is the location of the electrodes. In some, the electrodes are implanted inside the brain, where they monitor the firing of individual neurons. Other researchers work with electrocorticography (ECoG) systems, which use electrodes placed on the surface of the brain just under the skull, or electroencephalography (EEG) systems, which use electrodes that sit on the scalp. ECoG and EEG monitor the rhythmic activity created by the collective behavior of large groups of neurons [see sidebar, “Invasive vs. Noninvasive”].

In the case of electrodes inside the brain, which are the ones I study and the focus of this article, the neural signals captured by the implant are fed into a computer program called a decoder. It consists of a mathematical model that transforms the neural activity into the movements of a computer cursor or robotic arm, typically. To measure neural activity, researchers usually count the number of times individual neurons fire in a certain time span, known as a bin, which is usually about 100 milliseconds. In a 100-ms bin, you might record zero to a few firings. That number is called a spike count. The mathematical model that translates the spike counts of a group of neurons into movement might be a simple linear relationship. Increasingly, however, researchers are using models that are more complex and nonlinear in their translation of spike counts to movement.

In the traditional approach to BMI, you create a decoder by monitoring the neural activity of the areas of the brain responsible for control of the natural arm. As a first step, you’d monitor those neurons while test subjects moved their arms in predetermined ways. Next you’d take the activity of the neurons and the motion of the arms recorded during that trial and compute the decoder’s parameters. The decoder would then be able to transform the firings of the neurons into movements of the prosthetic device.

The decoder’s function is in some sense like that of the spinal cord. The spinal cord is connected to hundreds of thousands of neurons in the brain. After these neurons fire, the spinal cord transforms that activity into a small number of signals that travel, in the case of the human arm, to about 15 muscle groups. So the spinal cord takes, say, 100 000 inputs and transforms them into about 15 outputs. The decoder does something similar, although on a much smaller scale. Current state-of-the-art BMI systems typically monitor the activity of a few dozen to a few hundred neurons and transform their firings into a small number of outputs, such as the position, velocity, and gripping force of a prosthetic device.

In the early 2000s, groups at Brown University, Arizona State University, and Duke reported more breakthroughs, demonstrating closed-loop BMI control for the first time. I was involved in the Duke study, conducted at Nicolelis’s lab, where I was a postdoctoral researcher from 2002 to 2005. In that study, we reported that two macaques were able to use brain activity alone to control a robotic arm with two articulations and a gripper (three degrees of freedom, in robotics parlance) to reach for and grasp objects. One of the key findings was that learning to control the BMI triggered plastic changes in different brain areas of the monkeys, suggesting that the brain had even greater flexibility than previously thought. Other groups at Caltech, Stanford, the University of Pittsburgh, the University of Washington, and elsewhere followed with their own studies, further advancing different aspects of BMI control. The team at Brown spun off a BMI start-up called Cyberkinetics and succeeded in getting approval from the U.S. Food and Drug Administration for clinical trials with five severely disabled patients. The trials of their device yielded promising results, and the effort continues as part of a large research consortium called BrainGate2.

A common problem in this first batch of studies was that the BMI system had to be recalibrated for every session. Subjects were able to control the computer cursor or robotic arm to perform a task, but they weren’t able to retain the skills from one session to the next. In other words, the BMI systems weren’t “plug and play.” And this limitation would become even more prominent as the complexity of the task increased. Another major obstacle soon became apparent, too: The electrodes’ ability to read brain activity would degrade over time, and the device had to be removed from the subject’s brain. With such shortcomings, a BMI system could never be practical.

Back to square one. At Berkeley, my group set out to investigate an intriguing hypothesis based on a simple question: If we’re trying to control a prosthetic device that’s completely different from a natural arm, why are we relying on brain signals related to a natural arm? If we want to control an artificial arm, we’d ideally use brain activity tailored to that specific arm. But could the brain learn to produce such activity for something that’s not even part of the body?

The answer is a resounding yes, according to a series of experiments and trials we’ve done over the past five years. In this new view of BMI design, the focus isn’t on using the existing nervous system to control an artificial device but rather on creating a new, hybrid nervous system that spans the biological and artificial components. This approach grew out of a series of experiments I carried out with Karunesh Ganguly, a postdoc in my lab. We implanted arrays of 128 tiny electrodes into the motor cortices of macaque monkeys. But unlike in previous studies, we chose to use only a subset of about 40 electrodes that provided reliable readings for several days. Another difference was that, rather than recalibrating the decoder every session, we kept it exactly the same during the whole time. Those differences would prove crucial for the results we would achieve.

In the experiment, the monkeys had to move a computer cursor to the center of a monitor screen and, upon seeing a circle elsewhere on the screen change color, move the cursor there. The reward was a sip of fruit juice. In an initial phase, the animals used their actual arms to operate a robotic exoskeleton and move the cursor. At the same time, we recorded brain activity from the subset of neurons. In a subsequent phase, we removed the exoskeleton and switched the experiment from manual to BMI control. In this case, the monkeys had to learn how to move the cursor with brain activity alone, irrespective of natural arm movement. And learn they did. Within a week, the monkeys had mastered the task and could repeat it proficiently day after day.

What was new here was the discovery that an animal’s brain could develop a motor map representing how to control a disembodied device—in this case, the computer cursor. In past studies, researchers didn’t keep track of a stable neuron group, and they inevitably had to recalibrate the decoder to adapt it to the new activity the neurons were producing in every session. But that recalibration resulted in a serious problem: It meant that the brain wasn’t being allowed to retain the skill learned in the previous sessions and thus wasn’t able to develop a cortical map of the prosthetic device. In our study we left the decoder unchanged, allowing the brain to assimilate the prosthetic device as if it were a new part of the body.

After two weeks, we performed two follow-up experiments with one of the monkeys. In the first, we started with a decoder that the animal had never used for BMI control. Within a short period of time, the monkey had mastered this new decoder, just as before. The interesting thing this time was that we could switch between this new decoder and an earlier decoder and the monkey’s brain would correctly choose the motor map that corresponded to whichever decoder was active. In the second experiment, we went further and created a shuffled decoder by completely scrambling its parameters. To our surprise, the animal was again able to learn this decoder and operate the computer cursor just as skillfully as before.

These findings highlight the brain’s remarkable plastic properties and suggest that a newly formed prosthetic motor map meets the essential properties of memory. Namely, the map remains unchanged over time and can be readily recalled: Every day the monkeys could promptly control the device; it seems to be a BMI that’s truly plug and play. The map was also resistant to interference from other maps comprising the same set of neurons—just as learning to play tennis doesn’t erase your ability to ride a bicycle.

And in our most recent study, with my students Aaron Koralek and John Long, and my colleagues Xin Jin, of the U.S. National Institutes of Health, and Rui Costa, of the Champalimaud Centre for the Unknown, in Lisbon, we went one step further. Our results showed that other brain areas that had not been explored in a BMI context—including neural circuits between the cortex and deep-brain structures such as the basal ganglia—are actually key to the learning of prosthetic skills. This means that in principle, learning how to control a prosthetic device using a BMI may feel completely natural to a person, because this learning uses the brain’s existing built-in circuits for natural motor control.

So is a truly practical BMI system at hand? Not quite. There are many challenges and obstacles, some obvious, others less so. In the obvious category are the basic parameters of the implants: They need to be tiny, use very little power, and work wirelessly. Another conspicuous challenge is the reliability of all the subsystems of a BMI, including the biophysical interface, the decoder, and also the feedback loop that allows the brain’s error-correcting mechanisms to kick in and improve performance. Each component would have to work for the user’s entire lifetime, of course.

Even after those challenges are met, there is a whole category of other obstacles involved with making the BMI system more sophisticated and capable. Ultimately, we want to build a BMI that can control not only primitive systems but also complex bionic prostheses with multiple degrees of freedom to perform dexterous tasks. And we want the BMI to be able to transmit signals from the brain to the prosthesis as well as from the prosthesis to the brain. That’s BMI’s holy grail: a system that’s part of your body in the sense that you not only control it but also feel it.

Most BMI studies today rely only on visual feedback: A monkey’s brain can form a motor memory for a given task because the animal can see how it is performing during the task and adjust its brain activity to improve its performance. But when we learn a task—riding a bicycle, playing tennis, typing on a keyboard—we’re not using just visual feedback; we’re also relying on our tactile senses and our proprioceptive system, which uses receptors on muscles and joints to tell you where a certain part of your body is in space. Could BMI systems do something similar? Could you use tactile and position information from a robotic gripper and stimulate the brain to allow a user to find a glass of water on a nightstand in the dark, for example?

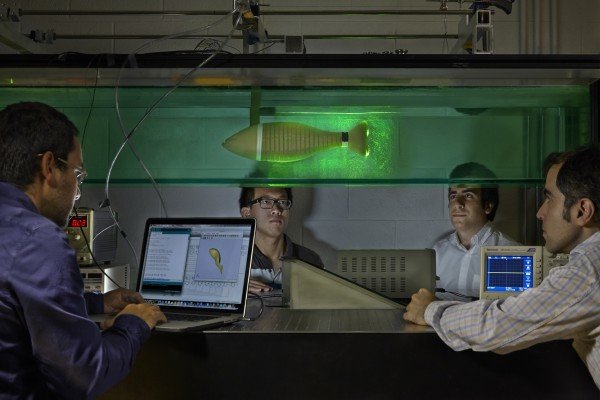

We’ve begun trying to answer that question in our lab, and we’ve had some promising results already. We’re using a technique known as intracortical electrical microstimulation to attempt to induce tactile sensations. In a recent study, Subramaniam Venkatraman, a graduate student in my lab, used rats with electrodes implanted in their sensory cortices. He placed the rats in a cage and used a motion-tracking system to precisely monitor the position of one of their whiskers in real time. When the whisker hit a virtual target—a line located at one of various possible positions—the BMI system delivered a precise pulse of stimulation into the rat’s cortex, giving the animal the illusion of touching an object. Whenever the animal was able to consecutively hit the correct target four times, it received a drop of fruit juice as a reward.

The study demonstrated that the rats were able to combine natural signals from their proprioceptive system with artificially delivered tactile stimuli to encode object location. This result suggests that in addition to readout functions, we can also perform write-in operations to the brain, a capability that will be useful in providing feedback for future users of neuroprostheses. In fact, Nicolelis and his team at Duke recently described an experiment in which monkeys could control a computer with their minds and also apparently “feel” the texture of virtual objects. The interface was both extracting and sending signals to the brain—a bidirectional BMI.

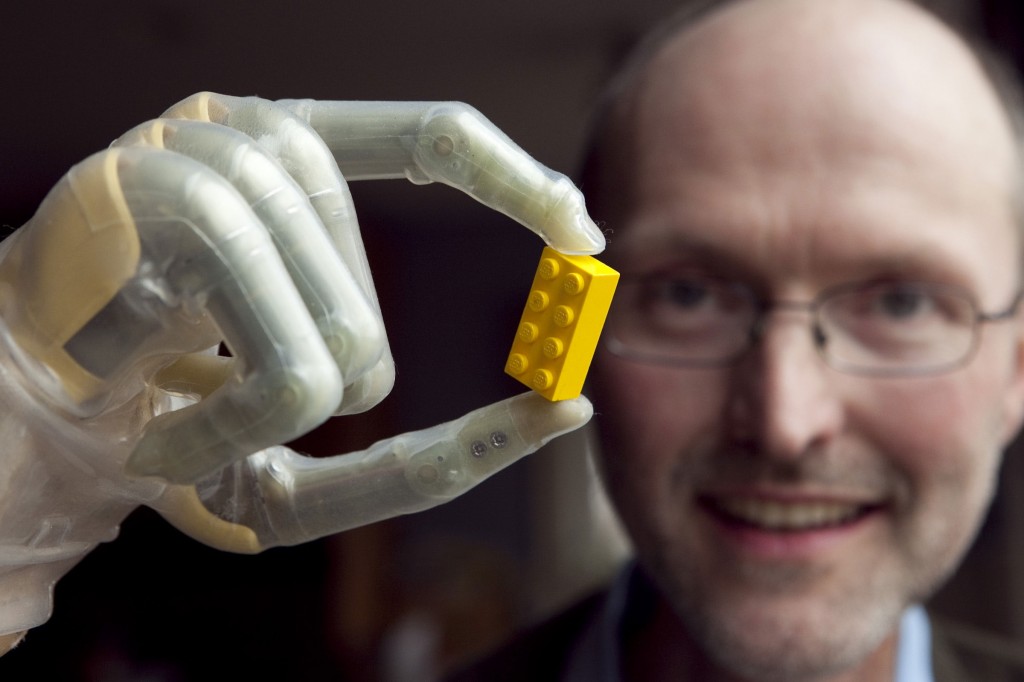

Finally, another research area that BMI needs to advance in is that of the prostheses themselves. Advances in biomechatronics toward exploiting compact sensor, actuator, and energy-storage technologies will play a big role in the development of these systems. The good news is we’re already seeing major progress in this realm: Take Dean Kamen’s Deka Research and Development Corp., which built a robotic arm so advanced that it was nicknamed the “Luke arm,” after the remarkably lifelike prosthetic worn by Luke Skywalker in Star Wars.

BMI research is entering a new phase—call it BMI 2.0—thanks to work at universities, companies, and medical centers all over the world. (These include a recently launched Center for Neural Engineering and Prostheses, which I codirect, based at Berkeley and at the University of California, San Francisco, and which will focus on motor and speech prostheses.) There is a palpable sense that we are quite close to cracking several of the fundamental problems standing in the way of clinical and commercial use of BMI systems.

The initial applications of BMI, in helping patients suffering from paralysis due to spinal cord injury or other neurological disorders, including amyotrophic lateral sclerosis and stroke, are still probably a decade or two away. But after this technology becomes mainstream in health care, other realms await in the augmentation of sensory, motor, and cognitive capabilities in healthy subjects—a fascinating possibility for sure, but one that promises to unleash a big ethical debate. The world where we’re able to do a Google search or drive a car just by thinking will be a very different place. But that’s a BMI 3.0 story.

Story Source:

The above story is reprinted from materials provided by IEEE Spectrum, Jose M. Carmena