Interdisciplinary consortium aims to develop intelligent adaptive storage systems

Credit: ill./©: ADMIRE project

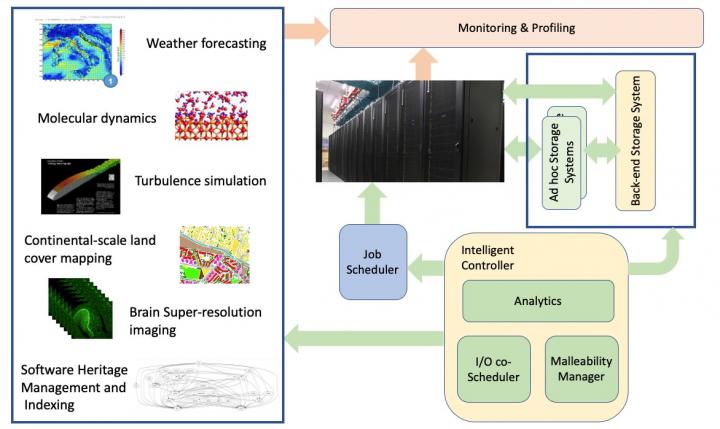

Fourteen institutions from six European countries are developing a new adaptive storage system for high-performance computing within the ADMIRE project. Their aim is to significantly improve the runtime of applications in fields such as weather forecasting, molecular dynamics, turbulence modeling, cartography, brain research, and software cataloging. Coordinated by the University Carlos III of Madrid (UC3M), the project is being funded by the European High-Performance Computing Joint Undertaking (EuroHPC JU) and the participating states.

Processing vast amounts of data, as often required for artificial intelligence, is one of the driving forces behind the concept of high-performance computers – a trend that is questioning the traditional design that mainly focused on computationally intensive tasks. Their flat storage hierarchies with a central parallel file system are proving to be increasingly inadequate. New technologies providing fast, non-volatile, and at the same time energy-efficient bulk storage open up opportunities to meet the needs of data-intensive applications. However, there is a current lack of adequate control mechanisms for the available resources and specifically tailored file systems to realize this potential. Solving this challenge is the main goal of the European ADMIRE project.

The ADMIRE software stack and applications

Four German institutions are playing major roles in the project and will work on various components of the overall ADMIRE system over the next three years.

* Johannes Gutenberg University Mainz (JGU):

Researchers at JGU are focusing on so-called ad hoc storage systems, which will be controlled by the overall ADMIRE system when required by applications. With ad hoc storage systems, the central parallel file system load can be decreased while allowing considerably higher data and metadata throughputs. Therefore, these file systems can help meet the I/O requirements of a wide variety of applications. JGU’s contribution will center around the GekkoFS file system, which has already been developed in previous projects. In the ADMIRE project, GekkoFS will be extended to meet the needs of modern high-performance computing applications with regard to their semantical and consistency requirements and their I/O access patterns, among others. As a result, the file system can provide the best possible I/O performance and react dynamically to decisions made by the ADMIRE system.

* Technical University of Darmstadt:

TU Darmstadt develops algorithms and tools to adjust the resources used by a program during runtime in such a way that both the runtime of individual programs and the processing capacity of the overall system are optimized. Extra-P, a performance modeling tool created by TU Darmstadt during previous projects, will be extended as part of ADMIRE to include a functionality for modeling I/O.

* Forschungszentrum Jülich:

The Jülich Supercomputing Center (JSC) at Forschungszentrum Jülich generates scalable and efficient processing workflows for Earth Observation (EO) applications based on machine learning and deep learning methods. As their contribution to ADMIRE, the JSC will optimize the I/O performance of entire processing pipelines, which will then be able to automatically update existing land use maps by analyzing a real-time captured series of multi-spectral satellite images. The new ADMIRE software stack will speed up the access to and analysis of data in the processing stages from data ingestion to end products. The JSC aims to make available efficient EO processing workflows that can process huge amounts of remote sensor data from multiple sources in operational scenarios to provide decision-makers with clear-cut, up-to-date, and useful information.

* The Max Planck Computing and Data Facility (MPCDF):

The MPCDF works on producing in-situ methods by which data can be processed, e.g., compressed, and analyzed during on-going simulations. This means that many tasks, which are usually only performed after a simulation has finished and the data has been saved to the file system, can already be undertaken for active data still in the main memory, thereby significantly reducing the amount of data that is eventually stored. In-situ methods were originally developed for visualization applications but will be adapted for more general use in the course of the ADMIRE project, the objective being to facilitate the use of artificial intelligence and other data analysis techniques.

About ADMIRE

ADMIRE is an EU-funded project. It was launched on April 1, 2021 and has been granted a budget of EUR 7.9 million over three years. Coordinated by the University Carlos III of Madrid (Spain), the multidisciplinary collaborative research team consists of members from the Barcelona Supercomputing Center (Spain), Johannes Gutenberg University Mainz (Germany), the Technical University of Darmstadt (Germany), the Max Planck Society (Germany), Forschungszentrum Jülich GmbH (Germany), DataDirect Networks (France), Paratools (France), Institut national de recherche en sciences et technologies du numérique (France), Consorzio Interuniversitario Nazionale per l’Informatica (Italy), CINECA Consorzio Interuniversitario (Italy), E4 Computer Engineering SpA (Italy), Instytut Chemii Bioorganicznej Polskiej Akademii Nauk (Poland), and Kungliga Tekniska högskolan (Sweden).

The ADMIRE project has received funding from the European High-Performance Computing Joint Undertaking (JU) under grant agreement No. 956748. The JU is supported by the European Union’s Horizon 2020 research and innovation program and the participating countries of Spain, Germany, France, Italy, Poland, and Sweden.

Related link:

https:/

###

Media Contact

Vera Lejsek

[email protected]

Original Source

https:/