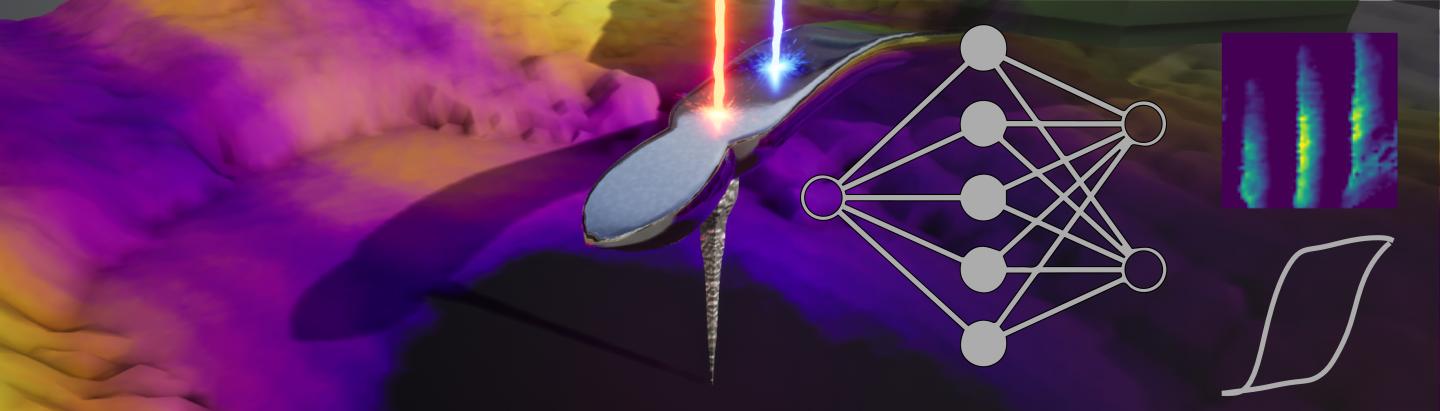

Credit: Joshua C. Agar and Joshua Willey

Innovations in material science are as essential to modern life as indoor plumbing – and go about as unnoticed.

For example, innovations in semiconducting devices continue to enable the transmission of more information, faster and through smaller hardware – such as through a device that fits in the palms of our hands.

Improvements in imaging techniques have made it possible to collect mounds of data about the properties of the nanomaterials used in such devices. (One nanometer is one billionth of a meter. For scale, a strand of human hair is between 50,000 and 100,000 nanometers thick.)

“The challenge is that analytical approaches that produce human-interpretable data remains ill equipped for the complexity and magnitude of the data,” says Joshua Agar, assistant professor of materials science at Lehigh University. “Only an infinitesimally small fraction of the data collected is translated into knowledge.”

Agar studies nanoscale ferroelectrics, which are materials that exhibit spontaneous electric polarization – as a result of small shifts in charged atoms – that can be reversed by the application of an external electric field. Despite promising applications in next-generation low-power information storage/computation, energy efficiency via harvesting waste energy, environmentally-friendly solid-state cooling and much more, a number of issues still need to be solved for ferroelectrics to reach their full potential.

Agar uses a multimodal hyperspectral imaging technique – available through the user program at the Center for Nanophase Materials Sciences at Oak Ridge National Laboratory – called band-excitation piezoresponse force microscopy, which measures the mechanical properties of the materials as they respond to electrical stimuli. These so-called in situ characterization techniques allows for the direct observation of nanoscale processes in action.

“Our experiments involve touching the material with a cantilever and measuring the material’s properties as we drive it with an electrical field,” says Agar. “Essentially, we go to every single pixel and measure the response of a very small region of the material as we drive it through transformations.”

The technique yields vast amounts of information about how the material is responding and the kinds of processes that are happening as it transitions between different states, explains Agar.

“You get this map for every pixel with many spectra and different responses,” says Agar. “All this information comes out at once with this technique. The problem is how do you actually figure out what’s going on because the data is not clean – it’s noisy.”

Agar and his colleagues have developed an artificial intelligence (AI) technique that uses deep neural networks to learn from the massive amounts of data generated by their experiments and extract useful information. Applying this method he and his team have identified – and visualized for the first time – geometrically-driven differences in ferroelectric domain switching.

The technique, and how it was utilized to make this discovery, has been described in an article published today in Nature Communications called “Revealing Ferroelectric Switching Character Using Deep Recurrent Neural Networks.” Additional authors include researchers from University of California, Berkeley; Lawrence Berkeley National Laboratory; University Texas at Arlington; Pennsylvania State University, University Park; and, The Center for Nanophase Materials Science at Oak Ridge National Laboratory.

The team is among the first in the materials science field to publish the paper via open source software designed to enable interactive computing. The paper, as well as the code, are available as a Jupyter Notebook, which runs on Google Collaboratory, a free cloud computing service. Any researcher can access the paper and the code, test out the method, modify parameters and, even, try it on their own data. By sharing data, analysis codes and descriptions Agar hopes this approach is used in communities outside of those who use this hyperspectral characterization technique at the Center for Nanophase Materials Science at Oak Ridge National Laboratory.

According to Agar, the neural network approach could have broad applications: “It could be used in electron microscopy, in scanning tunneling microscopy and even in aerial photography,” says Agar. “It crosses boundaries.”

In fact, the neural network technique grew out of work Agar did with Joshua Bloom, Professor of Astronomy at Berkeley which was previously published in Nature Astronomy. Agar adapted and applied the technique to a materials use.

“My astronomy colleague was surveying the night sky, looking at different stars and trying to classify what type of star they are based on their light intensity profiles,” says Agar.

Using a neural network approach to analyze hyperspectral imaging data

Applying the neural network technique, which uses models utilized in Natural Language Processing, Agar and his colleagues were able to directly image and visualize an important subtlety in the switching of a classical ferroelectric material: lead zirconium titanate which, prior to this, had never been done.

When the material switches its polarization state under an external electrical field, explains Agar, it forms a domain wall, or a boundary between two different orientations of polarization. Depending on the geometry, charges can then accumulate at that boundary. The modular conductivity at these domain wall interfaces is key to the material’s strong potential for use in transistors and memory devices.

“What we are detecting here from a physics perspective is the formation of different types of domain walls that are either charged or uncharged, depending on the geometry,” says Agar.

According to Agar, this discovery could not have been possible using more primitive machine learning approaches, as those techniques tend use linear models to identify linear correlations. Such models cannot efficiently deal with structured data or make the complex correlations needed to understand the data generated by hyperspectral imaging.

There is a black box nature to the type of neural network Agar has developed. The method works through a stacking of individual math components into complex architectures. The system then optimizes itself by “chugging through the data over and over again until it identifies what’s important.”

Agar then creates a simple, low dimensional representation of that model with fewer parameters.

“To interpret the output I might: ‘What 10 parameters are most important to define all the features in the dataset?'” says Agar. “And then I can visualize how those 10 parameters affect the response and, by using that information, identify important features.”

The nano-human interface

Agar’s work on this project was partially supported by a TRIPODS+X grant, a National Science Foundation award program supporting collaborative teams to bring new perspectives to bear on complex and entrenched data science problems.

The work is also part of Lehigh’s Nano/Human Interface Presidential Engineering Research Initiative. This multidisciplinary initiative, funded by $3-million institutional investment, proposes to develop a human-machine interface that will improve the ability of visualize and interpret the vast amounts of data that are generated by scientific research. The initiative aims to change the way human beings’ harness and interact with data and with the instruments of scientific discovery, eventually creating representations that are easy for humans to interpret and visualize.

“This tool could be one approach because, once trained, a neural network system can evaluate a new piece of data very fast,” says Agar. “It could make it possible to take very large data streams and process them on the fly. Once processed, the data can be shared with someone in a way that is interpretable, turning that large data stream into actionable information.”

###

Media Contact

Lori Friedman

[email protected]

610-758-3224

Related Journal Article

http://dx.