Revealing the relationship between attentional states and emotions from human pupillary reactions

Credit: COPYRIGHT (C) TOYOHASHI UNIVERSITY OF TECHNOLOGY. ALL RIGHTS RESERVED.

Overview

A research team from the Department of Computer Science and Engineering and the Electronics-Inspired Interdisciplinary Research Institute at Toyohashi University of Technology has indicated that the relationship between attentional states in response to pictures and sounds and the emotions elicited by them may be different in visual perception and auditory perception. This result was obtained by measuring pupillary reactions related to human emotions. It suggests that visual perception elicits emotions in all attentional states, whereas auditory perception elicits emotions only when attention is paid to sounds, thus showing the differences in the relationships between attentional states and emotions in response to visual and auditory stimuli.

Details

In our daily lives, our emotions are often elicited by the information we receive from visual and auditory perception. As such, many studies up until now have investigated human emotional processing using emotional stimuli such as pictures and sounds. However, it was not clear whether such emotional processing differed between visual and auditory perception.

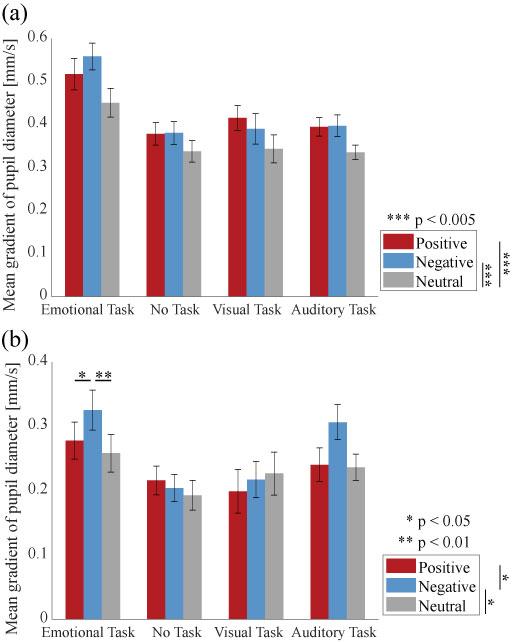

Our research team asked participants in the experiment to perform four tasks to alert them to various attentional states when they were presented with emotionally arousing pictures and sounds, in order to investigate how emotional responses differed between visual and auditory perception. We also compared the pupillary responses obtained by eye movement measurements as a physiological indicator of emotional responses. As a result, visual perception (pictures) elicited emotions during the execution of all tasks, whereas auditory perception (sounds) did so only during the execution of tasks where attention was paid to the sounds. These results suggest that there are differences in the relationship between attentional states and emotional responses to visual and auditory stimuli.

“Traditionally, subjective questionnaires have been the most common method for assessing emotional states. However, in this study, we wanted to extract emotional states while some kind of task was being performed. We therefore focused on pupillary response, which is receiving a lot of attention as one of the biological signals that reflect cognitive states. Although many studies have reported about attentional states during emotional arousal owing to visual and auditory perception, there have been no previous studies comparing these states across senses, and this is the first attempt”, explains the lead author, Satoshi Nakakoga, Ph. D. student.

Besides, Professor Tetsuto Minami, the leader of the research team, said, “There are more opportunities to come into contact with various visual media via smartphones and other devices and to evoke emotions through that visual and auditory information. We will continue investigating about sensory perception that elicits emotions, including the effects of elicited emotions on human behavior.”

Future Outlook

Based on the results of this research, our research team indicates the possibility of a new method of emotion regulation in which the emotional responses elicited by a certain sense are promoted or suppressed by stimuli input from another sense. Ultimately, we hope to establish this new method of emotion regulation to help treat psychiatric disorders such as panic and mood disorders.

###

Reference

Nakakoga, S., Higashi, H., Muramatsu, J., Nakauchi, S., & Minami, T. (2020). Asymmetrical characteristics of emotional responses to pictures and sounds: Evidence from pupillometry. PLoS ONE, 15(4), e0230775.

doi: 10.1371/journal.pone.0230775

Media Contact

Yuko Ito

[email protected]

Related Journal Article

http://dx.