UNIGE scientists have tracked the eye movements of children to show how they make the link – spontaneously and without instructions – between vocal emotion (happiness or anger) followed by a natural or virtual face.

Credit: UNIGE

Do children have to wait until age 8 to recognise – spontaneously and without instructions – the same emotion of happiness or anger depending on whether it is expressed by a voice or on a face? A team of scientists from the University of Geneva (UNIGE) and the Swiss Centre for Affective Sciences (CISA) has provided an initial response to this question. They compared the ability of children age 5, 8 and 10 years and adults to make a spontaneous link between a heard voice (expressing happiness or anger) and the corresponding emotional expression on a natural or virtual face (also expressing happiness or anger). The results, published in the journal Emotion, demonstrate that children from 8 years look at a happy face for longer if they have previously heard a happy voice. These visual preferences for congruent emotion reflect a child’s ability for the spontaneous amodal coding of emotions, i.e. independent of perceptual modality (auditory or visual).

Emotions are an integral part of our lives and influence our behaviour, perceptions and day-to-day decisions. The spontaneous amodal coding of emotions – i.e. independently of perceptual modalities and, therefore, the physical characteristics of faces or voices – is easy for adults, but how does the same capacity develop in children?

In an attempt to answer this question, researchers from the Faculty of Psychology and Educational Sciences – together with members of the Swiss Centre for Affective Sciences – led by Professor Edouard Gentaz, studied the development of the capacity to establish links between vocal emotion and the emotion conveyed by a natural or artificial face in children age 5, 8 and 10 years, as well as in adults. Unlike more usual studies that include instructions (generally verbal in nature), this research did not call on the language skills of children. It is a promising new method that could be used to assess capacities in children with disabilities or with language and communication disorders.

Exposed for 10 seconds to two emotional faces

The research team employed an experimental paradigm originally designed for use with babies, a task known as emotional intermodal transfer. The children were exposed to emotional voices and faces expressing happiness and anger. In the first phase, devoted to hearing familiarisation, each participant sat facing a black screen and listened to three voices – neutral, happy and angry – for 20 seconds. In the second, visual discrimination phase, which lasted 10 seconds, the same individual was exposed to two emotional faces, one expressing happiness and the other anger, one with a facial expression corresponding to the voice and the other with a facial expression that was different to the voice.

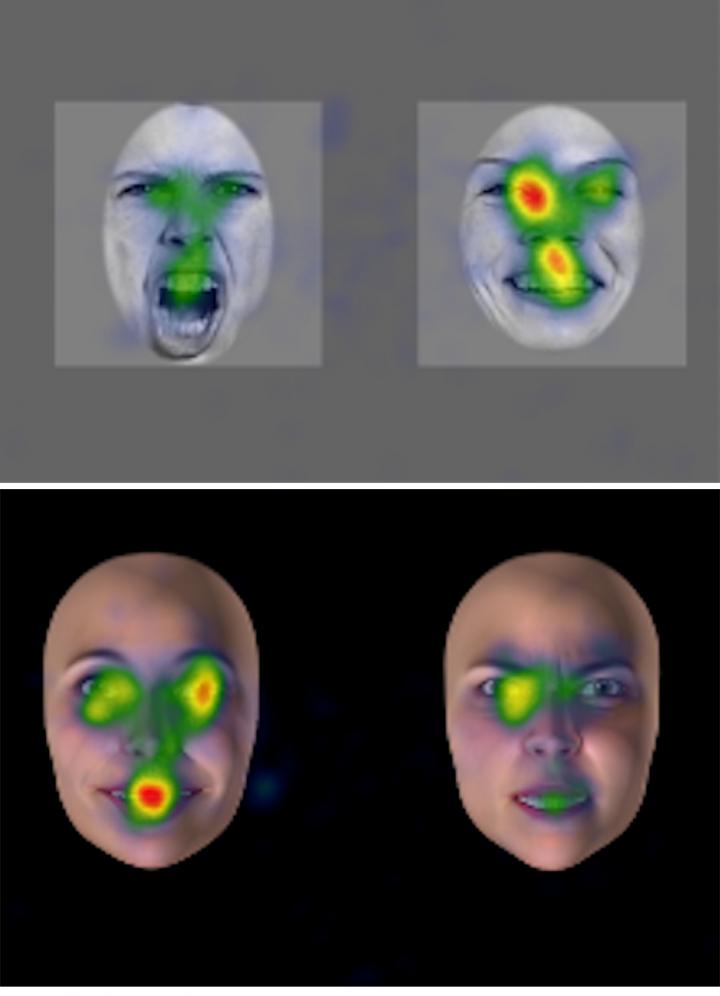

The scientists used eye-tracking technology to measure precisely the eye movements of 80 participants. They were then able to determine whether the time spent looking at one or other of the emotional faces – or particular areas of the natural or virtual face (the mouth or eyes) – varied according to the voice heard. The use of a virtual face, produced with CISA’s FACSGen software, gave greater control over the emotional characteristics compared to a natural face. «If the participants made the connection between the emotion in the voice they heard and the emotion expressed by the face they saw, we can assume that they recognise and code the emotion in an amodal manner, i.e. independently of perceptive modalities», explains Amaya Palama, a researcher in the Laboratory of Sensorimotor, Affective and Social Development in the Faculty of Psychology and Educational Sciences at UNIGE.

The results show that after a control phase (without a voice or a neutral voice), there is no difference in the visual preference between the happy and angry faces. So, after the emotional voices (happiness or anger), participants looked at the face (natural or virtual) congruent with the voice for longer. More specifically, the results showed a spontaneous transfer of the emotional voice of joy, with a preference for the congruent face of joy from the age of 8 and a spontaneous transfer of the emotional voice of anger, with a preference for the congruent face of anger from the age of 10.

Revealing unsuspected abilities

These results suggest a spontaneous amodal coding of emotions. The research was part of a project designed to study the development of emotional discrimination capacities in childhood funded by the Swiss National Science Foundation (SNSF) obtained by Professor Gentaz. Current and future research is trying to validate whether this task is suitable for revealing unsuspected abilities to understand emotions in children with multiple disabilities, who are unable to understand verbal instructions or produce verbal responses

###

Media Contact

Edouard Gentaz

[email protected]

Original Source

https:/

Related Journal Article

http://dx.