Researchers propose a novel auditory perception based feature for extracting emotions from human speech using neural networks

Credit: Masashi Unoki

Ishikawa, Japan – Human beings have the ability to recognize emotions in others, but the same cannot be said for robots. Although perfectly capable of communicating with humans through speech, robots and virtual agents are only good at processing logical instructions, which greatly restricts human-robot interaction (HRI). Consequently, a great deal of research in HRI is about emotion recognition from speech. But first, how do we describe emotions?

Categorical emotions such as happiness, sadness, and anger are well-understood by us but can be hard for robots to register. Researchers have focused on “dimensional emotions,” which constitute a gradual emotional transition in natural speech. “Continuous dimensional emotion can help a robot capture the time dynamics of a speaker’s emotional state and accordingly adjust its manner of interaction and content in real time,” explains Prof. Masashi Unoki from Japan Advanced Institute of Science and Technology (JAIST), who works on speech recognition and processing.

Studies have shown that an auditory perception model simulating the working of a human ear can generate what are called “temporal modulation cues,” which faithfully capture the time dynamics of dimensional emotions. Neural networks can then be employed to extract features from these cues that reflect this time dynamics. However, due to the complexity and variety of auditory perception models, the feature extraction part turns out to be pretty challenging.

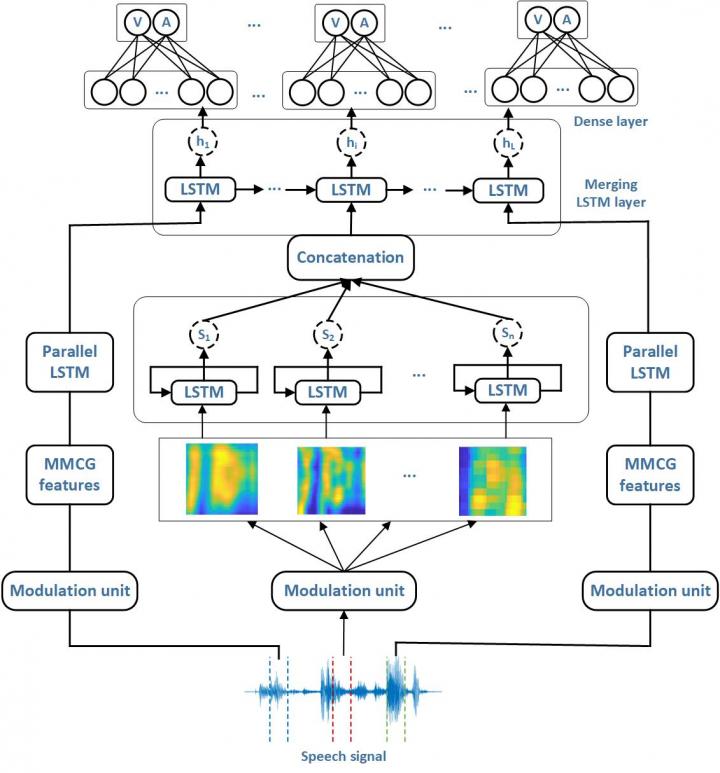

In a new study published in Neural Networks, Prof. Unoki and his colleagues, including Zhichao Peng, from Tianjin University, China (who led the study), Jianwu Dang from Pengcheng Laboratory, China, and Prof. Masato Akagi from JAIST, have now taken inspiration from a recent finding in cognitive neuroscience suggesting that our brain forms multiple representations of natural sounds with different degrees of spectral (i.e., frequency) and temporal resolutions through a combined analysis of spectral-temporal modulations. Accordingly, they have proposed a novel feature called multi-resolution modulation-filtered cochleagram (MMCG), which combines four modulation-filtered cochleagrams (time-frequency representations of the input sound) at different resolutions to obtain the temporal and contextual modulation cues. To account for the diversity of the cochleagrams, researchers designed a parallel neural network architecture called “long short-term memory” (LSTM), which modeled the time variations of multi-resolution signals from the cochleagrams and carried out extensive experiments on two datasets of spontaneous speech.

The results were encouraging. The researchers found that MMCG showed a significantly better emotion recognition performance than traditional acoustic-based features and other auditory-based features for both the datasets. Furthermore, the parallel LSTM network demonstrated a superior prediction of dimensional emotions than that with a plain LSTM-based approach.

Prof. Unoki is thrilled and contemplates improving upon the MMCG feature in future research. “Our next goal is to analyze the robustness of environmental noise sources and investigate our feature for other tasks, such as categorical emotion recognition, speech separation, and voice activity detection,” he concludes.

Looks like it may not be too long before emotionally intelligent robots become a reality!

###

Reference

Title of original paper: “Multi-resolution modulation-filtered cochleagram feature for LSTM-based dimensional emotion recognition from speech”

Journal: Neural Networks

DOI: 10.1016/j.neunet.2021.03.027

About Japan Advanced Institute of Science and Technology, Japan

Founded in 1990 in Ishikawa prefecture, the Japan Advanced Institute of Science and Technology (JAIST) was the first independent national graduate school in Japan. Now, after 30 years of steady progress, JAIST has become one of Japan’s top-ranking universities. JAIST counts with multiple satellite campuses and strives to foster capable leaders with a state-of-the-art education system where diversity is key; about 40% of its alumni are international students. The university has a unique style of graduate education based on a carefully designed coursework-oriented curriculum to ensure that its students have a solid foundation on which to carry out cutting-edge research. JAIST also works closely both with local and overseas communities by promoting industry-academia collaborative research.

About Professor Masashi Unoki from Japan Advanced Institute of Science and Technology, Japan

Masashi Unoki is a Professor at the School of Information Science at Japan Advanced Institute of Science and Technology (JAIST), where he received his M.S. and Ph.D. degrees in 1996 and 1999, respectively. His main research interests lie in auditory motivated signal processing and modeling auditory systems. Prof. Unoki received the Sato Prize from the Acoustical Society of Japan (ASJ) in 1999, 2010, and 2013 for an Outstanding Paper and the Yamashita Taro “Young Researcher” Prize from the Yamashita Taro Research Foundation in 2005. As a senior researcher, he has 420 publications to his credit, with over 2000 citations.

Funding information

The study was funded by a Grant in Aid for Innovative Areas (no. 18H05004) from MEXT, Japan, and was partially supported by the Research Foundation of Education Bureau of Hunan Province, China (grant no. 18A414).

Media Contact

Zhichao PENG

[email protected]

Related Journal Article

http://dx.