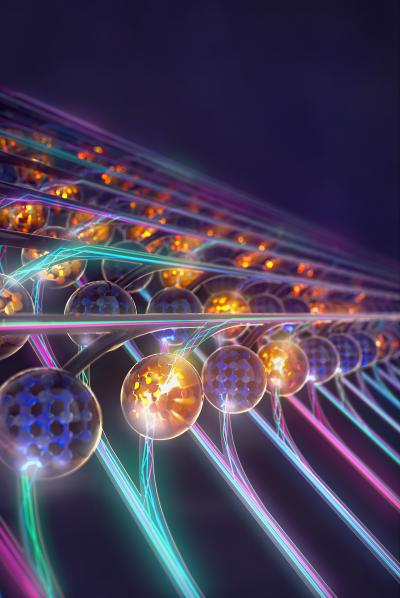

Credit: University of Oxford

The exponential growth of data traffic in our digital age poses some real challenges on processing power. And with the advent of machine learning and AI in, for example, self-driving vehicles and speech recognition, the upward trend is set to continue. All this places a heavy burden on the ability of current computer processors to keep up with demand.

Now, an international team of scientists has turned to light to tackle the problem. The researchers developed a new approach and architecture that combines processing and data storage onto a single chip by using light-based, or “photonic” processors, which are shown to surpass conventional electronic chips by processing information much more rapidly and in parallel.

The scientists developed a hardware accelerator for so-called matrix-vector multiplications, which are the backbone of neural networks (algorithms that simulate the human brain), which themselves are used for machine-learning algorithms. Since different light wavelengths (colors) don’t interfere with each other, the researchers could use multiple wavelengths of light for parallel calculations. But to do this, they used another innovative technology, developed at EPFL, a chip-based “frequency comb”, as a light source.

“Our study is the first to apply frequency combs in the field of artificially neural networks,” says Professor Tobias Kippenberg at EPFL, one the study’s leads. Professor Kippenberg’s research has pioneered the development of frequency combs. “The frequency comb provides a variety of optical wavelengths that are processed independently of one another in the same photonic chip.”

“Light-based processors for speeding up tasks in the field of machine learning enable complex mathematical tasks to be processed at high speeds and throughputs,” says senior co-author Wolfram Pernice at Münster University, one of the professors who led the research. “This is much faster than conventional chips which rely on electronic data transfer, such as graphic cards or specialized hardware like TPU’s (Tensor Processing Unit).”

After designing and fabricating the photonic chips, the researchers tested them on a neural network that recognizes of hand-written numbers. Inspired by biology, these networks are a concept in the field of machine learning and are used primarily in the processing of image or audio data. “The convolution operation between input data and one or more filters – which can identify edges in an image, for example, are well suited to our matrix architecture,” says Johannes Feldmann, now based at the University of Oxford Department of Materials. Nathan Youngblood (Oxford University) adds: “Exploiting wavelength multiplexing permits higher data rates and computing densities, i.e. operations per area of processer, not previously attained.”

“This work is a real showcase of European collaborative research,” says David Wright at the University of Exeter, who leads the EU project FunComp, which funded the work. “Whilst every research group involved is world-leading in their own way, it was bringing all these parts together that made this work truly possible.”

The study is published in Nature this week, and has far-reaching applications: higher simultaneous (and energy-saving) processing of data in artificial intelligence, larger neural networks for more accurate forecasts and more precise data analysis, large amounts of clinical data for diagnoses, enhancing rapid evaluation of sensor data in self-driving vehicles, and expanding cloud computing infrastructures with more storage space, computing power, and applications software.

###

Reference

J. Feldmann, N. Youngblood, M. Karpov, H. Gehring, X. Li, M. Stappers, M. Le Gallo, X. Fu, A. Lukashchuk, A.S. Raja, J. Liu, C.D. Wright, A. Sebastian, T.J. Kippenberg, W.H.P. Pernice, H. Bhaskaran. Parallel convolution processing using an integrated photonic tensor core. Nature 07 January 2021. DOI: 10.1038/s41586-020-03070-1

Media Contact

Nik Papageorgiou

[email protected]

Related Journal Article

http://dx.