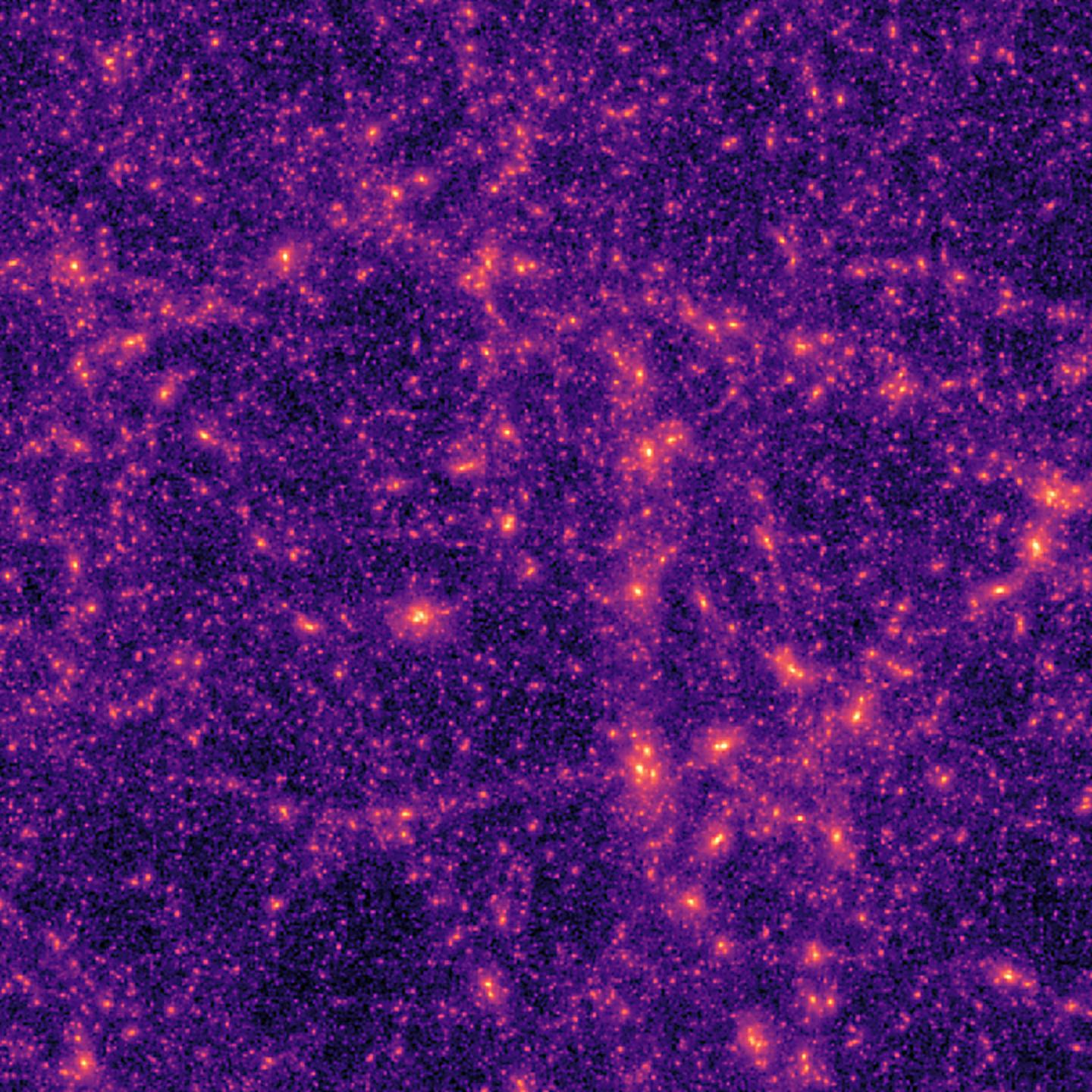

Credit: ETH Zurich

Understanding the how our universe came to be what it is today and what will be its final destiny is one of the biggest challenges in science. The awe-inspiring display of countless stars on a clear night gives us some idea of the magnitude of the problem, and yet that is only part of the story. The deeper riddle lies in what we cannot see, at least not directly: dark matter and dark energy. With dark matter pulling the universe together and dark energy causing it to expand faster, cosmologists need to know exactly how much of those two is out there in order to refine their models.

At ETH Zurich, scientists from the Department of Physics and the Department of Computer Science have now joined forces to improve on standard methods for estimating the dark matter content of the universe through artificial intelligence. They used cutting-edge machine learning algorithms for cosmological data analysis that have a lot in common with those used for facial recognition by Facebook and other social media. Their results have recently been published in the scientific journal Physical Review D.

Facial recognition for cosmology

While there are no faces to be recognized in pictures taken of the night sky, cosmologists still look for something rather similar, as Tomasz Kacprzak, a researcher in the group of Alexandre Refregier at the Institute of Particle Physics and Astrophysics, explains: “Facebook uses its algorithms to find eyes, mouths or ears in images; we use ours to look for the tell-tale signs of dark matter and dark energy.” As dark matter cannot be seen directly in telescope images, physicists rely on the fact that all matter – including the dark variety – slightly bends the path of light rays arriving at the Earth from distant galaxies. This effect, known as “weak gravitational lensing”, distorts the images of those galaxies very subtly, much like far-away objects appear blurred on a hot day as light passes through layers of air at different temperatures.

Cosmologists can use that distortion to work backwards and create mass maps of the sky showing where dark matter is located. Next, they compare those dark matter maps to theoretical predictions in order to find which cosmological model most closely matches the data. Traditionally, this is done using human-designed statistics such as so-called correlation functions that describe how different parts of the maps are related to each other. Such statistics, however, are limited as to how well they can find complex patterns in the matter maps.

Neural networks teach themselves

“In our recent work, we have used a completely new methodology”, says Alexandre Refregier. “Instead of inventing the appropriate statistical analysis ourselves, we let computers do the job.” This is where Aurelien Lucchi and his colleagues from the Data Analytics Lab at the Department of Computer Science come in. Together with Janis Fluri, a PhD student in Refregier’s group and lead author of the study, they used machine learning algorithms called deep artificial neural networks and taught them to extract the largest possible amount of information from the dark matter maps.

In a first step, the scientists trained the neural networks by feeding them computer-generated data that simulates the universe. That way, they knew what the correct answer for a given cosmological parameter – for instance, the ratio between the total amount of dark matter and dark energy – should be for each simulated dark matter map. By repeatedly analysing the dark matter maps, the neural network taught itself to look for the right kind of features in them and to extract more and more of the desired information. In the Facebook analogy, it got better at distinguishing random oval shapes from eyes or mouths.

More accurate than human-made analysis

The results of that training were encouraging: the neural networks came up with values that were 30% more accurate than those obtained by traditional methods based on human-made statistical analysis. For cosmologists, that is a huge improvement as reaching the same accuracy by increasing the number of telescope images would require twice as much observation time – which is expensive.

Finally, the scientists used their fully trained neural network to analyse actual dark matter maps from the KiDS-450 dataset. “This is the first time such machine learning tools have been used in this context,” says Fluri, “and we found that the deep artificial neural network enables us to extract more information from the data than previous approaches. We believe that this usage of machine learning in cosmology will have many future applications.”

As a next step, he and his colleagues are planning to apply their method to bigger image sets such as the Dark Energy Survey. Also, more cosmological parameters and refinements such as details about the nature of dark energy will be fed to the neural networks.

###

Reference

Fluri J, Kacprzak T, Lucchi A, Refregier A, Amara A, Hofmann T, Schneider A: Cosmological constraints with deep learning from KiDS-450 weak lensing maps. Physical Review D. 100: 063514, doi: 10.1103/PhysRevD.100.063514

Media Contact

Janis Fluri

[email protected]

Original Source

https:/

Related Journal Article

http://dx.