What a perception system doesn’t see can help it understand what it sees

Credit: Carnegie Mellon University

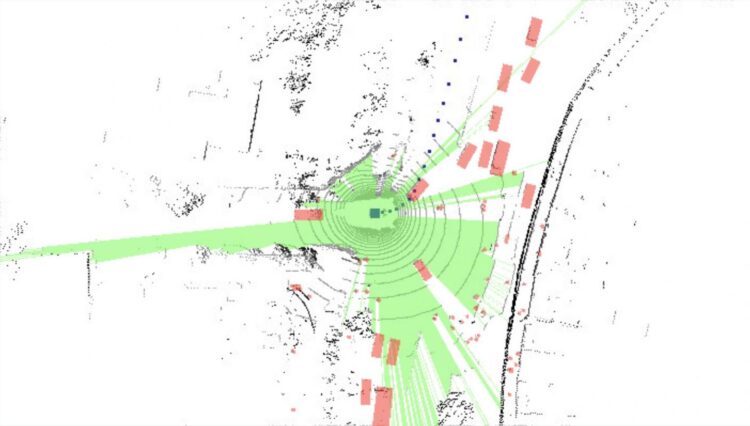

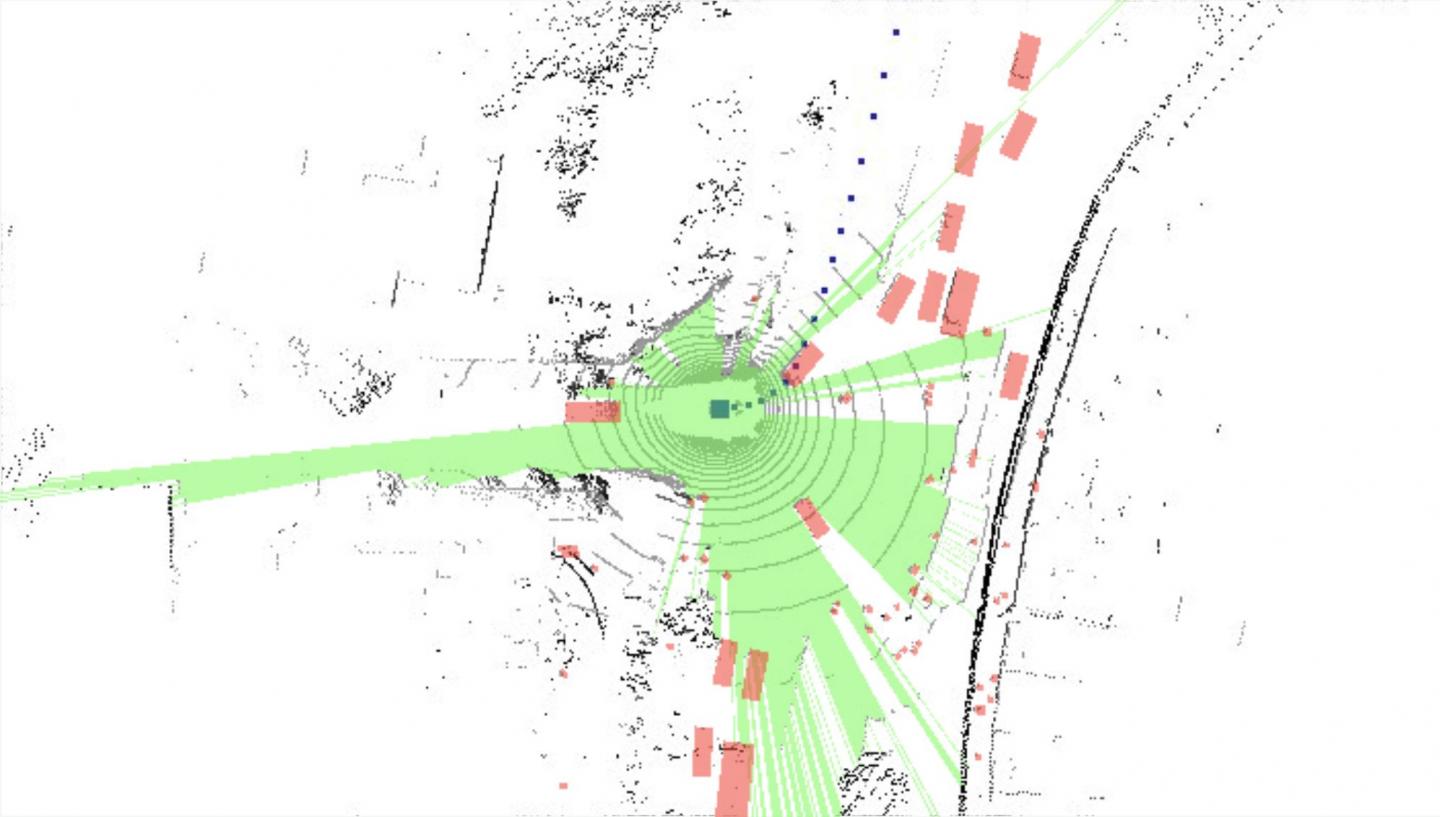

PITTSBURGH–It’s important that self-driving cars quickly detect other cars or pedestrians sharing the road. Researchers at Carnegie Mellon University have shown that they can significantly improve detection accuracy by helping the vehicle also recognize what it doesn’t see.

Empty space, that is.

The very fact that objects in your sight may obscure your view of things that lie further ahead is blindingly obvious to people. But Peiyun Hu, a Ph.D. student in CMU’s Robotics Institute, said that’s not how self-driving cars typically reason about objects around them.

Rather, they use 3D data from lidar to represent objects as a point cloud and then try to match those point clouds to a library of 3D representations of objects. The problem, Hu said, is that the 3D data from the vehicle’s lidar isn’t really 3D — the sensor can’t see the occluded parts of an object, and current algorithms don’t reason about such occlusions.

“Perception systems need to know their unknowns,” Hu observed.

Hu’s work enables a self-driving car’s perception systems to consider visibility as it reasons about what its sensors are seeing. In fact, reasoning about visibility is already used when companies build digital maps.

“Map-building fundamentally reasons about what’s empty space and what’s occupied,” said Deva Ramanan, an associate professor of robotics and director of the CMU Argo AI Center for Autonomous Vehicle Research. “But that doesn’t always occur for live, on-the-fly processing of obstacles moving at traffic speeds.”

In research to be presented at the Computer Vision and Pattern Recognition (CVPR) conference, which will be held virtually June 13-19, Hu and his colleagues borrow techniques from map-making to help the system reason about visibility when trying to recognize objects.

When tested against a standard benchmark, the CMU method outperformed the previous top-performing technique, improving detection by 10.7% for cars, 5.3% for pedestrians, 7.4% for trucks, 18.4% for buses and 16.7% for trailers.

One reason previous systems may not have taken visibility into account is a concern about computation time. But Hu said his team found that was not a problem: their method takes just 24 milliseconds to run. (For comparison, each sweep of the lidar is 100 milliseconds.)

###

In addition to Hu and Ramanan, the research team included Jason Ziglar of Argo AI and David Held, assistant professor of robotics. The Argo AI Center supported this research.

Media Contact

Byron Spice

[email protected]

Original Source

https:/