A multi-institution team from Australia and Germany simulates turbulence happening on both sides of the so-called “sonic scale,” opening the door for more detailed and realistic galaxy formation simulations

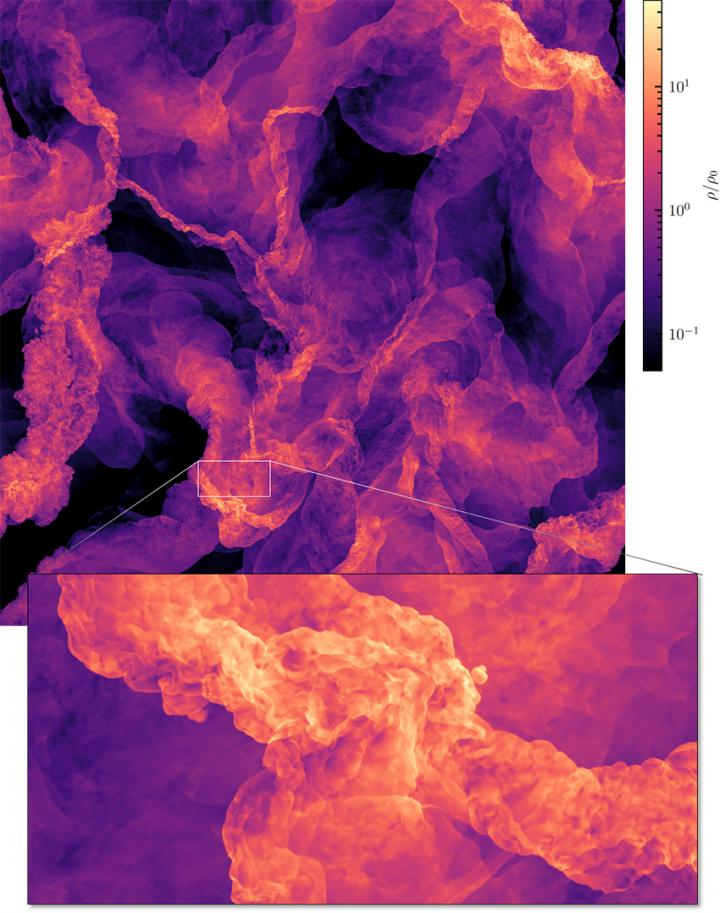

Credit: Federrath et al. Nature Astronomy. DOI: 10.1038/s41550-020-01282-z

Through the centuries, scientists and non-scientists alike have looked at the night sky and felt excitement, intrigue, and overwhelming mystery while pondering questions about how our universe came to be, and how humanity developed and thrived in this exact place and time. Early astronomers painstakingly studied stars’ subtle movements in the night sky to try and determine how our planet moves in relation to other celestial bodies. As technology has increased, so too has our understanding of how the universe works and our relative position within it.

What remains a mystery, however, is a more detailed understanding of how stars and planets formed in the first place. Astrophysicists and cosmologists understand that the movement of materials across the interstellar medium (ISM) helped form planets and stars, but how this complex mixture of gas and dust–the fuel for star formation–moves across the universe is even more mysterious.

To help better understand this mystery, researchers have turned to the power of high-performance computing (HPC) to develop high-resolution recreations of phenomena in the galaxy. Much like several terrestrial challenges in engineering and fluid dynamics research, astrophysicists are focused on developing a better understanding of the role of turbulence in helping shape our universe.

Over the last several years, a multi-institution collaboration being led by Australian National University Associate Professor Christoph Federrath and Heidelberg University Professor Ralf Klessen has been using HPC resources at the Leibniz Supercomputing Centre (LRZ) in Garching near Munich to study turbulence’s influence on galaxy formation. The team recently revealed the so-called “sonic scale” of astrophysical turbulence–marking the transition moving from supersonic to subsonic speeds (faster or slower than the speed of sound, respectively)–creating the largest-ever simulation of supersonic turbulence in the process. The team published its research in Nature Astronomy.

Many scales in a simulation

To simulate turbulence in their research, Federrath and his collaborators needed to solve the complex equations of gas dynamics representing a wide variety of scales. Specifically, the team needed to simulate turbulent dynamics on both sides of the sonic scale in the complex, gaseous mixture travelling across the ISM. This meant having a sufficiently large simulation to capture these large-scale phenomena happening faster than the speed of sound, while also advancing the simulation slowly and with enough detail to accurately model the smaller, slower dynamics taking place at subsonic speeds.

“Turbulent flows only occur on scales far away from the energy source that drives on large scales, and also far away from the so-called dissipation (where the kinetic energy of the turbulence turns into heat) on small scales” Federrath said. “For our particular simulation, in which we want to resolve both the supersonic and the subsonic cascade of turbulence with the sonic scale in between, this requires at least 4 orders of magnitude in spatial scales to be resolved.”

In addition to scale, the complexity of the simulations is another major computational challenge. While turbulence on Earth is one of the last major unsolved mysteries of physics, researchers who are studying terrestrial turbulence have one major advantage–the majority of these fluids are incompressible or only mildly compressible, meaning that the density of terrestrial fluids stays close to constant. In the ISM, though, the gaseous mix of elements is highly compressible, meaning researchers not only have to account for the large range of scales that influences turbulence, they also have to solve equations throughout the simulation to know the gases’ density before proceeding.

Understanding the influence that density near the sonic scale plays in star formation is important for Federrath and his collaborators, because modern theories of star formation suggest that the sonic scale itself serves as a “Goldilocks zone” for star formation. Astrophysicists have long used similar terms to discuss how a planet’s proximity to a star determines its ability to host life, but for star formation itself, the sonic scale strikes a balance between the forces of turbulence and gravity, creating the conditions for stars to more easily form. Scales larger than the sonic scale tend to have too much turbulence, leading to sparse star formation, while in smaller, subsonic regions, gravity wins the day and leads to localized clusters of stars forming.

In order to accurately simulate the sonic scale and the supersonic and subsonic scales on either side, the team worked with LRZ to scale its application to more than 65,000 compute cores on the SuperMUC HPC system. Having so many compute cores available allowed the team to create a simulation with more than 1 trillion resolution elements, making it the largest-ever simulation of its kind.

“With this simulation, we were able to resolve the sonic scale for the first time,” Federrath said. “We found its location was close to theoretical predictions, but with certain modifications that will hopefully lead to more refined star formation models and more accurate predictions of star formation rates of molecular clouds in the universe. The formation of stars powers the evolution of galaxies on large scales and sets the initial conditions for planet formation on small scales, and turbulence is playing a big role in all of this. We ultimately hope that this simulation advances our understanding of the different types of turbulence on Earth and in space.”

Cosmological collaborations and computational advancements

While the team is proud of its record-breaking simulation, it is already turning its attention to adding more details into its simulations, leading toward an even more accurate picture of star formation. Federrath indicated that the team planned to start incorporating the effects of magnetic fields on the simulation, leading to a substantial increase in memory for a simulation that already requires significant memory and computing power as well as multiple petabytes of storage–the current simulation requires 131 terabytes of memory and 23 terabytes of disk space per snapshot, with the whole simulation consisting of more than 100 snapshots.

Since he was working on his doctoral degree at the University of Heidelberg, Federrath has collaborated with staff at LRZ’s AstroLab to help scale his simulations to take full advantage of modern HPC systems. Running the largest-ever simulation of its type serves as validation of the merits of this long-running collaboration. During this period, Federrath has worked closely with LRZ’s Dr. Luigi Iapichino, Head of LRZ’s AstroLab, who was a co-author on the Nature Astronomy publication.

“I see our mission as being the interface between the ever-increasing complexity of the HPC architectures, which is a burden on the application developers, and the scientists, which don’t always have the right skill set for using HPC resource in the most effective way,” Iapichino said. “From this viewpoint, collaborating with Christoph was quite simple because he is very skilled in programming for HPC performance. I am glad that in this kind of collaborations, application specialists are often full-fledged partners of researchers, because it stresses the key role centres’ staffs play in the evolving HPC framework.”

###

-Eric Gedenk

Media Contact

Eric Gedenk

[email protected]

Related Journal Article

http://dx.