Current artificial intelligence models utilize billions of trainable parameters to achieve challenging tasks. However, this large number of parameters comes with a hefty cost. Training and deploying these huge models require immense memory space and computing capability that can only be provided by hangar-sized data centers in processes that consume energy equivalent to the electricity needs of midsized cities. The research community is presently making efforts to rethink both the related computing hardware and the machine learning algorithms to sustainably keep the development of artificial intelligence at its current pace.

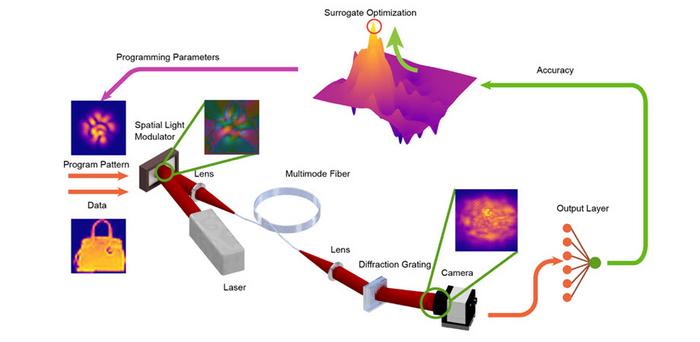

Credit: Oguz et al., doi 10.1117/1.AP.6.1.016002.

Current artificial intelligence models utilize billions of trainable parameters to achieve challenging tasks. However, this large number of parameters comes with a hefty cost. Training and deploying these huge models require immense memory space and computing capability that can only be provided by hangar-sized data centers in processes that consume energy equivalent to the electricity needs of midsized cities. The research community is presently making efforts to rethink both the related computing hardware and the machine learning algorithms to sustainably keep the development of artificial intelligence at its current pace.

Optical implementation of neural network architectures is a promising avenue because of the low power implementation of the connections between the units. New research reported in Advanced Photonics combines light propagation inside multimode fibers with a small number of digitally programmable parameters and achieves the same performance on image classification tasks with fully digital systems with more than 100 times more programmable parameters. This computational framework streamlines the memory requirement and reduces the need for energy-intensive digital processes, while achieving the same level of accuracy in a variety of machine learning tasks.

The heart of this groundbreaking work, led by Professors Demetri Psaltis and Christophe Moser of EPFL (Swiss Federal Institute of Technology in Lausanne), lies in the precise control of ultrashort pulses within multimode fibers through a technique known as wavefront shaping. This allows for the implementation of nonlinear optical computations with microwatts of average optical power, reaching a crucial step in realizing the potential of optical neural networks. “In this study, we found out that with a small group of parameters, we can select a specific set of model weights from the weight bank that optics provides and employ it for the aimed computing task. This way, we used naturally occurring phenomena as a computing hardware without going into the trouble of manufacturing and operating a device specialized for this purpose,” states Ilker Oguz, lead co-author of the work.

This result marks a significant stride towards addressing the challenges posed by the escalating demand for larger machine learning models. By harnessing the computational power of light propagation through multimode fibers, the researchers have paved the way for low-energy, highly efficient hardware solutions in artificial intelligence. As showcased in the reported nonlinear optics experiment, this computational framework can also be put to use for efficiently programming different high-dimensional, nonlinear phenomena for performing machine learning tasks and can offer a transformative solution to the resource-intensive nature of current AI models.

For details, see the Gold Open Access article by Oguz et al., “Programming nonlinear propagation for efficient optical learning machines,” Adv. Photon. 6(1) 016002 (2024), doi 10.1117/1.AP.6.1.016002.

Journal

Advanced Photonics

DOI

10.1117/1.AP.6.1.016002

Article Title

Programming nonlinear propagation for efficient optical learning machines

Article Publication Date

25-Jan-2024