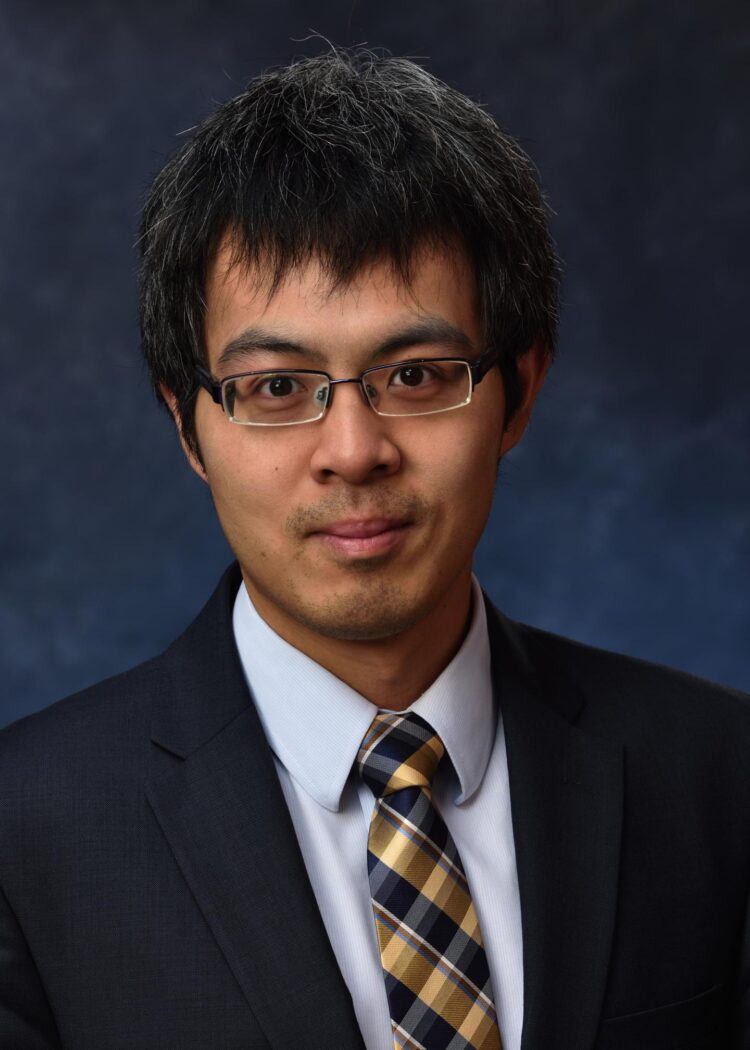

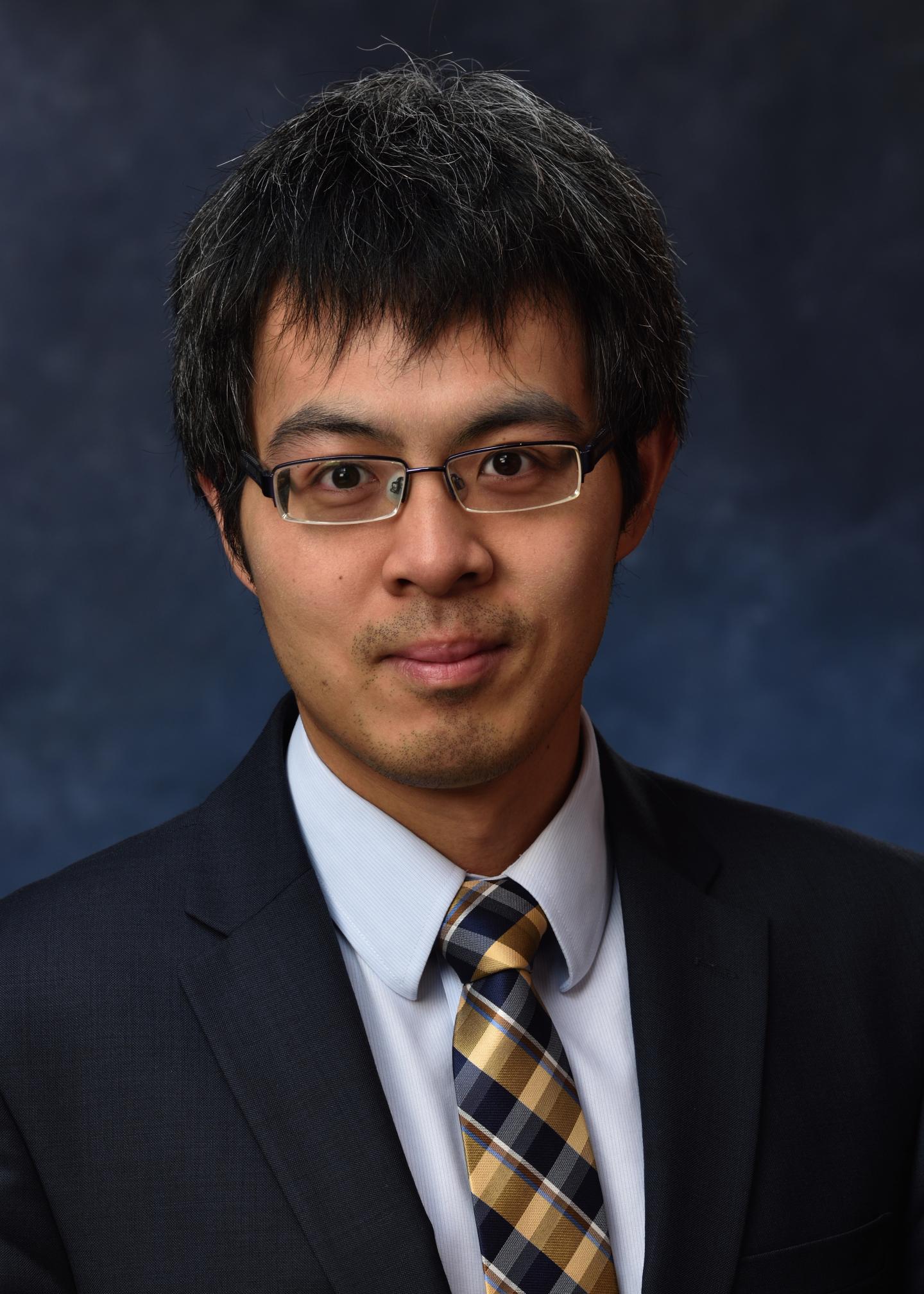

Credit: University of Pittsburgh

PITTSBURGH (June 10, 2020) — The world runs on data. Self-driving cars, security, healthcare and automated manufacturing all are part of a “big data revolution,” which has created a critical need for a way to more efficiently sift through vast datasets and extract valuable insights.

When it comes to the level of efficiency needed for these tasks, however, the human brain is unparalleled. Taking inspiration from the brain, Feng Xiong, assistant professor of electrical and computer engineering at the University of Pittsburgh’s Swanson School of Engineering, is collaborating with Duke University’s Yiran Chen to develop a two-dimensional synaptic array that will allow computers to do this work with less power and greater speed. Xiong has received a $300,000 award from the National Science Foundation for this project.

“Deep neural networks (DNN) work by training a neural network with massive datasets for applications like pattern recognition, image reconstruction or video and language processing,” said Xiong. “For example, if airport security wanted to create a program that could identify firearms, they would need to input thousands of pictures of different firearms in different situations to teach the program what it should look for. It’s not unlike how we as humans learn to identify different objects.”

To do this, supercomputing systems transfer data back and forth constantly from the computation and memory units, making DNNs computationally intensive and power hungry. Their inefficiency makes it impractical for them to be scaled up to the level of the complexity needed for true artificial intelligence (AI). In contrast, computation and memory in the human brain uses a network of neurons and synapses that are closely and densely connected, resulting in the brain’s extremely low power consumption, about 20W.

“The way our brains learn is gradual. For example, say you’re learning what an apple is. Each time you see the apple, it might be in a different context: on the ground, on a table, in a hand. Your brain learns to recognize that it’s still an apple,” said Xiong. “Each time you see it, the neural connection changes a bit. In computing we want this high-precision synapse to mimic that, so that over time, the connections strengthen. The finer the adjustments we can make, the more powerful the program can be, and the more memory it can have.”

With existing consumer electronic devices, the kind of gradual, slight adjustment needed is difficult to attain because they rely on binary, meaning their states are essentially limited to on or off, yes or no. The artificial synapse will instead allow a precision of 1,000 states, with precision and control in navigating between each.

Additionally, smaller devices, like sensors and other embedded systems, need to communicate their data to a larger computer to process it. The proposed device’s small size, flexibility and low power usage could make it able to run those calculations in much smaller devices, allowing sensors to process information on-site.

“What we’re proposing is that, theoretically, we could lower the energy needed to run these algorithms, hopefully by 1,000 times or more. This way, it can make power requirement more reasonable, so a flexible or wearable electronic device could run it with a very small power supply,” said Xiong.

The project, titled “Collaborative Research: Two-dimensional Synapatic Array for Advanced Hardware Acceleration of Deep Neural Networks,” is expected to last three years, beginning on Sept. 1, 2020.

###

Media Contact

Maggie Pavlick

[email protected]

Original Source

https:/