In a groundbreaking advancement at the intersection of artificial intelligence and pediatric orthodontics, researchers have unveiled an innovative AI-powered system designed to revolutionize bone age assessment using cervical vertebrae analysis. The novel model, dubbed the Attend-and-Refine Network version two (ARNet-v2), leverages routine neck X-rays to precisely identify anatomical keypoints on cervical vertebrae, enabling highly accurate predictions of a child’s pubertal growth spurt. This milestone promises to enhance treatment timing in orthodontics, reduce manual workload, and minimize radiation exposure in young patients.

Traditional methods for estimating growth spurts—a critical factor in orthodontic treatment planning—rely on manual annotation of lateral cephalometric radiographs. Clinicians painstakingly mark specific landmarks on cervical vertebrae, correlating these features with skeletal maturity. However, this process is labor-intensive and subject to significant intra- and interobserver variability, limiting its consistency and efficiency. The demand for an automated, reliable alternative has long persisted in clinical practice.

Addressing this challenge, the multidisciplinary team from Korea University Anam Hospital, KAIST, and the University of Ulsan developed ARNet-v2, an interactive deep learning framework that precisely automates landmark detection on cervical vertebrae. Their approach represents a significant leap over previous AI models by enabling clinicians to apply a single manual correction, which the system automatically propagates across related anatomical points. This innovate interactive mechanism drastically reduces the need for continual manual refinement, accelerating workflow and improving accuracy.

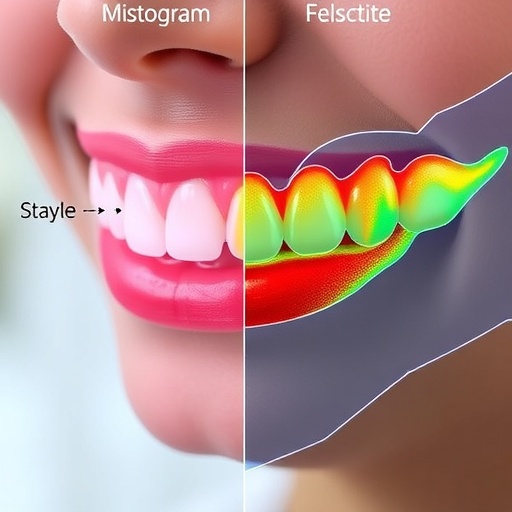

The architecture of ARNet-v2 is centered on its Attend-and-Refine mechanism, which iteratively focuses attention on challenging regions of the image and refines landmark locations through successive stages. This progressive refinement strategy allows the network to quickly converge on high-confidence feature points while incorporating minimal user input. Such nuanced interactivity distinguishes ARNet-v2 from conventional one-shot detection algorithms that often falter in anatomical complexity or image variability.

The model was trained on an extensive dataset exceeding 5,700 radiographs, incorporating diverse anatomical presentations and image qualities to ensure robustness. Rigorous evaluation included validation against four publicly available medical imaging datasets to confirm generalizability. Comparative testing revealed that ARNet-v2 reduced landmark prediction failures by as much as 67% compared to state-of-the-art baselines. Furthermore, the number of required manual adjustments dropped by nearly 50%, substantiating the model’s enhanced precision and efficiency.

Clinically, the implications extend beyond algorithmic elegance. By extracting comprehensive skeletal maturity information from a single lateral cephalometric X-ray, ARNet-v2 obviates the need for additional hand-wrist radiographs traditionally employed in bone age estimation. This innovation directly translates to reduced radiation exposure, lowered healthcare costs, and streamlined diagnostic workflows. Orthodontists can thus make timely, more informed treatment decisions while alleviating burdens on healthcare infrastructure.

Professor In-Seok Song, a leading expert in oral and maxillofacial surgery, emphasized the system’s potential to transform clinical standards. He highlighted that ARNet-v2’s ability to pinpoint precise cervical vertebra keypoints “enables accurate estimation of a child’s pubertal growth peak, a key factor in determining the timing of orthodontic treatment.” By mitigating the dependency on additional radiographic procedures, this technology promises safer, cost-effective patient management.

Importantly, the framework’s versatility suggests applicability beyond orthodontics. The underlying Attend-and-Refine methodology shows promise for enhancing annotation precision in other complex medical imaging tasks, including brain MRI segmentation, retinal imaging diagnostics, and cardiac ultrasound analysis. The iterative refinement paradigm could be adapted to diverse anatomical landmarks and imaging modalities, unlocking broader clinical utility.

Moreover, the algorithm’s capacity for interactive correction holds promise outside medicine. Fields requiring rapid, accurate annotation of visual data, such as robotics, autonomous vehicle navigation, and augmented reality, could benefit immensely from this technology. The model’s ability to integrate minimal human feedback for high-fidelity automated labeling represents a leap forward in human-AI collaboration paradigms.

From a workflow perspective, integrating ARNet-v2 into clinical environments can significantly reduce practitioner workload. Its interactive design caters to real-time adjustment needs, making it ideal for busy hospitals, resource-limited clinics, and remote telemedicine services. By streamlining bone age and growth peak assessment, the system equips clinicians with actionable insights while minimizing tedious manual labor.

Looking ahead, the success of ARNet-v2 suggests a future where AI-assisted skeletal maturity and orthodontic growth analysis become routine clinical procedures. The fusion of automated radiographic interpretation with personalized growth prediction marks a paradigm shift in pediatric care. Dr. Jinhee Kim, one of the project’s lead researchers, remarked that “together, these aspects position our work as a significant step forward in AI-assisted bone-age assessment and pediatric orthodontics.”

In summary, ARNet-v2’s introduction heralds a new era of precision, efficiency, and safety in pediatric orthodontic diagnostics. By harnessing deep learning and interactive refinement, this system offers tangible benefits—reducing unnecessary imaging, lowering costs, enhancing diagnostic fidelity, and ultimately improving patient outcomes. As the technology matures, it holds the promise of reshaping clinical standards in bone age assessment and beyond, ushering in a future where AI augments human expertise seamlessly.

Subject of Research: Not applicable

Article Title: Attend-and-Refine: Interactive keypoint estimation and quantitative cervical vertebrae analysis for bone age assessment

News Publication Date: 29-Jul-2025

References: DOI: 10.1016/j.media.2025.103715

Image Credits: Korea University College of Medicine

Keywords: Orthodontics, Dentistry, Medical specialties, Human health, Health and medicine

Tags: AI in orthodonticsAI-powered healthcare solutionsautomated growth prediction systembone age assessment technologycervical vertebrae analysisdeep learning in healthcareintra-observer variability in growth assessmentmanual annotation in orthodonticsminimizing radiation exposure in childrenorthodontic treatment planning advancementspediatric orthodontics innovationX-ray landmark detection