Music, often referred to as the universal language, is known to be a common component in all cultures. Then, could ‘musical instinct’ be something that is shared to some degree despite the extensive environmental differences amongst cultures?

Credit: KAIST Complex Systetms and Statistical Physics Lab

Music, often referred to as the universal language, is known to be a common component in all cultures. Then, could ‘musical instinct’ be something that is shared to some degree despite the extensive environmental differences amongst cultures?

On January 16, a KAIST research team led by Professor Hawoong Jung from the Department of Physics announced to have identified the principle by which musical instincts emerge from the human brain without special learning using an artificial neural network model.

Previously, many researchers have attempted to identify the similarities and differences between the music that exist in various different cultures, and tried to understand the origin of the universality. A paper published in Science in 2019 had revealed that music is produced in all ethnographically distinct cultures, and that similar forms of beats and tunes are used. Neuroscientist have also previously found out that a specific part of the human brain, namely the auditory cortex, is responsible for processing musical information.

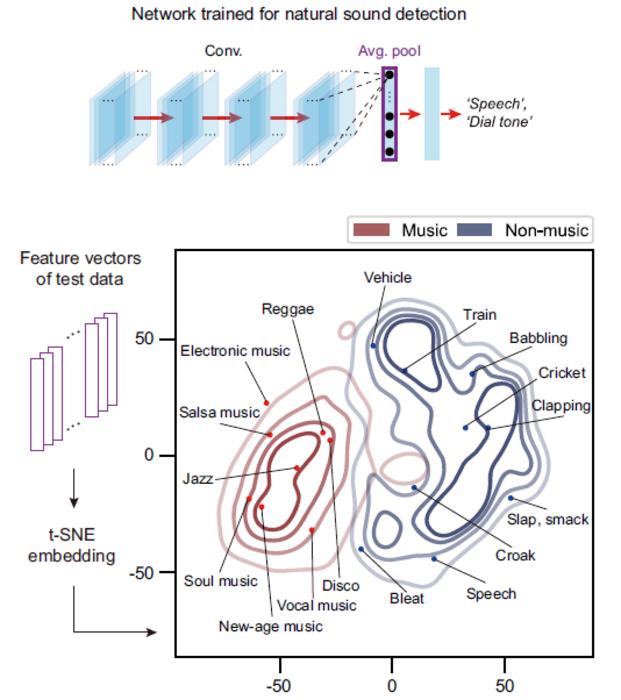

Professor Jung’s team used an artificial neural network model to show that cognitive functions for music forms spontaneously as a result of processing auditory information received from nature, without being taught music. The research team utilized AudioSet, a large-scale collection of sound data provided by Google, and taught the artificial neural network to learn the various sounds. Interestingly, the research team discovered that certain neurons within the network model would respond selectively to music. In other words, they observed the spontaneous generation of neurons that reacted minimally to various other sounds like those of animals, nature, or machines, but showed high levels of response to various forms of music including both instrumental and vocal.

The neurons in the artificial neural network model showed similar reactive behaviours to those in the auditory cortex of a real brain. For example, artificial neurons responded less to the sound of music that was cropped into short intervals and were rearranged. This indicates that the spontaneously-generated music-selective neurons encode the temporal structure of music. This property was not limited to a specific genre of music, but emerged across 25 different genres including classic, pop, rock, jazz, and electronic.

< Figure 1. Illustration of the musicality of the brain and artificial neural network (created with DALL·E3 AI based on the paper content) >

Furthermore, suppressing the activity of the music-selective neurons was found to greatly impede the cognitive accuracy for other natural sounds. That is to say, the neural function that processes musical information helps process other sounds, and that ‘musical ability’ may be an instinct formed as a result of an evolutionary adaptation acquired to better process sounds from nature.

Professor Hawoong Jung, who advised the research, said, “The results of our study imply that evolutionary pressure has contributed to forming the universal basis for processing musical information in various cultures.” As for the significance of the research, he explained, “We look forward for this artificially built model with human-like musicality to become an original model for various applications including AI music generation, musical therapy, and for research in musical cognition.” He also commented on its limitations, adding, “This research however does not take into consideration the developmental process that follows the learning of music, and it must be noted that this is a study on the foundation of processing musical information in early development.”

< Figure 2. The artificial neural network that learned to recognize non-musical natural sounds in the cyber space distinguishes between music and non-music. >

This research, conducted by first author Dr. Gwangsu Kim of the KAIST Department of Physics (current affiliation: MIT Department of Brain and Cognitive Sciences) and Dr. Dong-Kyum Kim (current affiliation: IBS) was published in Nature Communications under the title, “Spontaneous emergence of rudimentary music detectors in deep neural networks”.

This research was supported by the National Research Foundation of Korea.

Journal

Nature Communications

DOI

10.1038/s41467-023-44516-0

Method of Research

Meta-analysis

Subject of Research

Not applicable

Article Title

Spontaneous emergence of rudimentary music detectors in deep neural networks

Article Publication Date

2-Jan-2024

COI Statement

N/A