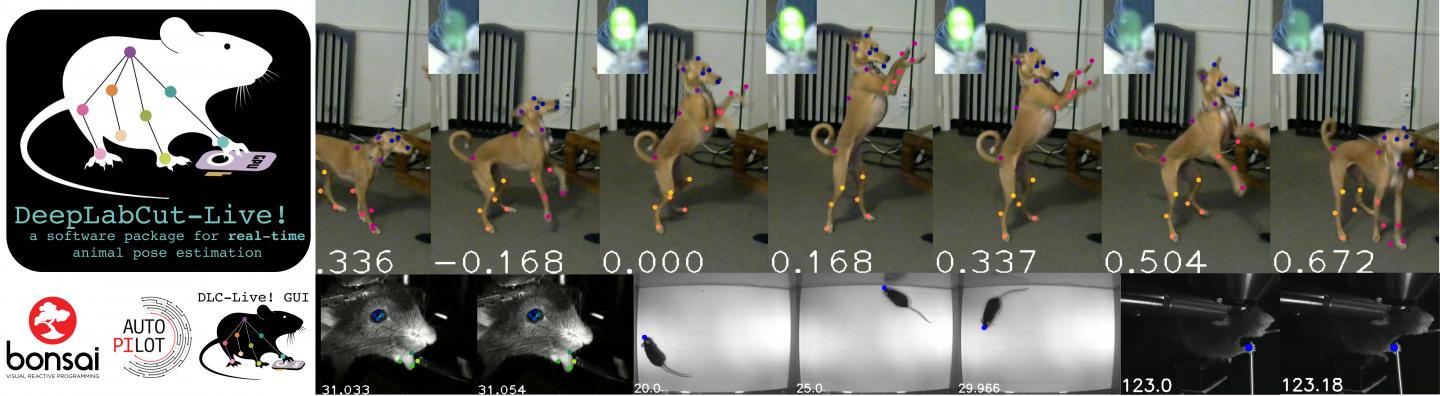

Credit: Kane et al, eLife 2020

Gollum in “The Lord of the Rings”, Thanos in the “Avengers”, Snoke in “Star Wars”, the Na’vi in “Avatar”: we have all experienced the wonders of motion-capture, a cinema technique that tracks an actor’s movements and “translates” them into computer animation to create a moving, emoting – and maybe one day Oscar-winning – digital character.

But what many might not realize is that motion capture isn’t limited to the big screen, but extends into science. Behavioral scientists have been developing and using similar tools to study and analyze the posture and movement of animals under a variety of conditions. But motion-capture approaches also require that the subject wears a complex suit with markers that let the computer “know” where each part of the body is in three-dimensional space. That might be okay for a professional actor, but animals tend to resist dressing up.

To solve the problem, scientists have begun combining motion-capture with deep learning, a method that lets a computer essentially teach itself how to optimize performing a task, e.g., recognizing a specific “key-point” in videos. The idea is to teach the computer to track and even predict the movements or posture of an animal without the need for motion capture markers.

But to be of meaningful use to behavioral science, “marker-less” tracking tools must also allow scientists to quickly – literally in real-time – control or stimulate the neural activity of the animal. This is particularly important in experiments that try to work out which part of the nervous system underlies a specific movement of posture.

DeepLabCut: deep-learning, marker-less posture tracking

One of the scientists spearheading the marker-less approach is Mackenzie Mathis who recently joined EPFL’s School of Life Sciences from Harvard. Mathis’ lab has been developing a deep-learning software toolbox named DeepLabCut, that can track and identify animal movements in real time directly from video. Now, in a paper published in eLife, Mathis and her Harvard post-doctoral fellow Gary Kane present a new version named DeepLabCut-Live! (DLC-Live!), which features low-latency (within 15 msec at over 100 FPS) – or with a module we provide to forward-predict posture one can achieve zero latency feedback – and can be integrated into other software packages.

DeepLabCut was originally developed in order to study and analyze the way animals adapt their posture in response to changes in their environment. “We’re interested in how neural circuits control behavior and in particular in how animals adapt to quick changes in their environment,” says Mathis.

“For example, you pour coffee in a mug and when it’s full it has a particular weight. But, as you drink it the weight changes, yet you don’t need to actively think about changing your grip force or how much you have to lift your arm to reach your mouth. This is a very natural thing we do and we can adapt to these changes very quickly. But this actually involves a massive amount of interconnected neurocircuitry, from the cortex all the way to the spinal cord.”

DLC-Live!, the new update to a state-of-the-art “animal pose estimation package” that uses tailored networks to predict the posture of animals based on video frames, which offline allows for up to 2,500 FPS on a standard GPU. Its high-throughput analysis makes it invaluable for studying and probing the neural mechanisms of behavior. Now, with this new package, it’s low-latency allows researchers to give animals feedback in real time and test the behavioral functions of specific neural circuits. And, more importantly, it can interface with hardware used in posture studies to provide feedback to the animals.

“This is important for things in our own research program where you want to be able to manipulate the behavior of an animal,” says Mathis. “For example, in one behavioral study we do, we train a mouse to play a video game in the lab, and we want to shut down particular neurons or brain circuits in a really specific time window, i.e., trigger a laser to do optogenetics or trigger an external reward.”

“We wanted to make DLC-Live! super user-friendly and make it work for any species in any setting,” she adds. “It’s really modular and can be used in a lot of different contexts; the person who’s running the experiments can set up kind of the conditions and what they want to trigger quite easily with our graphical user interface. And we’ve also built in the ability to use it with other common neuroscience platforms.” Two of those commonly used platforms are Bonsai and Autopilot, and in the paper, Mathis and her colleagues who developed those software packages show how DLC-Live! can easily work with them.

“It’s economical, it’s scalable, and we hope it’s a technical advance that allows even more questions to be asked about how the brain controls behavior,” says Mathis.

###

Other contributors

Harvard University

NeuroGEARS

Institute of Neuroscience

University of Oregon

Funding

Rowland Institute at Harvard University

Chan Zuckerberg Initiative DAF

Silicon Valley Community Foundation

Harvard Mind, Brain, Behavior Award

National Science Foundation

Reference

Gary Kane, Gonçalo Lopes, Jonny L. Saunders, Alexander Mathis, Mackenzie W. Mathis. Real-time, low-latency closed-loop feedback using markerless posture tracking. eLife 08 December 2020;9:e61909 DOI: 10.7554/eLife.61909

Media Contact

Nik Papageorgiou

[email protected]

Related Journal Article

http://dx.

https://scienmag.com/deeplabcut-live-real-time-marker-less-motion-capture-for-animals/