For the last decade, scientists have deployed increasingly capable underwater robots to map and monitor pockets of the ocean to track the health of fisheries, and survey marine habitats and species. In general, such robots are effective at carrying out low-level tasks, specifically assigned to them by human engineers — a tedious and time-consuming process for the engineers.

Researchers watch underwater footage taken by various AUVs exploring Australia’s Scott Reef. Courtesy of the researchers

When deploying autonomous underwater vehicles (AUVs), much of an engineer’s time is spent writing scripts, or low-level commands, in order to direct a robot to carry out a mission plan. Now a new programming approach developed by MIT engineers gives robots more “cognitive” capabilities, enabling humans to specify high-level goals, while a robot performs high-level decision-making to figure out how to achieve these goals.

For example, an engineer may give a robot a list of goal locations to explore, along with any time constraints, as well as physical directions, such as staying a certain distance above the seafloor. Using the system devised by the MIT team, the robot can then plan out a mission, choosing which locations to explore, in what order, within a given timeframe. If an unforeseen event prevents the robot from completing a task, it can choose to drop that task, or reconfigure the hardware to recover from a failure, on the fly.

In March, the team tested the autonomous mission-planning system during a research cruise off the western coast of Australia. Over three weeks, the MIT engineers, along with groups from Woods Hole Oceanographic Institution, the Australian Center for Field Robotics, the University of Rhode Island, and elsewhere, tested several classes of AUVs, and their ability to work cooperatively to map the ocean environment.

The MIT researchers tested their system on an autonomous underwater glider, and demonstrated that the robot was able to operate safely among a number of other autonomous vehicles, while receiving higher-level commands. The glider, using the system, was able to adapt its mission plan to avoid getting in the way of other vehicles, while still achieving its most important scientific objectives. If another vehicle was taking longer than expected to explore a particular area, the glider, using the MIT system, would reshuffle its priorities, and choose to stay in its current location longer, in order to avoid potential collisions.

“We wanted to show that these vehicles could plan their own missions, and execute, adapt, and re-plan them alone, without human support,” says Brian Williams, a professor of aeronautics and astronautics at MIT, and principal developer of the mission-planning system. “With this system, we were showing we could safely zigzag all the way around the reef, like an obstacle course.”

Williams and his colleagues will present the mission-planning system in June at the International Conference on Automated Planning and Scheduling, in Israel.

All systems go

When developing the autonomous mission-planning system, Williams’ group took inspiration from the “Star Trek” franchise and the top-down command center of the fictional starship Enterprise, after which Williams modeled and named the system.

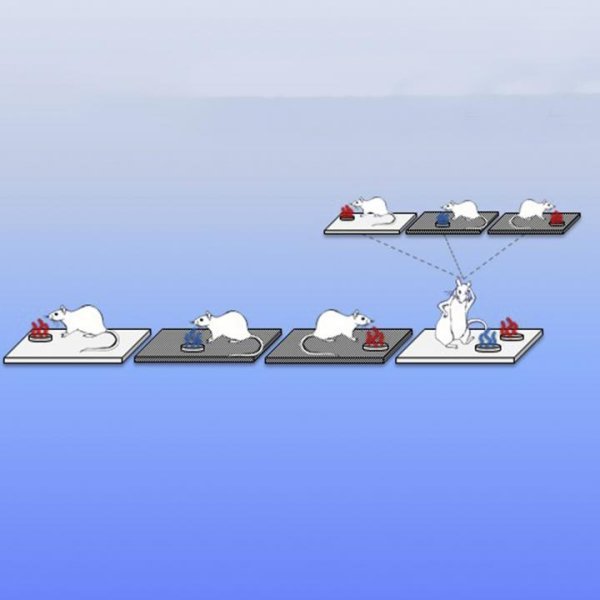

Just as a hierarchical crew runs the fictional starship, Williams’ Enterprise system incorporates levels of decision-makers. For instance, one component of the system acts as a “captain,” making higher-level decisions to plan out the overall mission, deciding where and when to explore. Another component functions as a “navigator,” planning out a route to meet mission goals. The last component works as a “doctor,” or “engineer,” diagnosing and repairing problems autonomously.

“We can give the system choices, like, ‘Go to either this or that science location and map it out,’ or ‘Communicate via an acoustic modem, or a satellite link,’” Williams explains. “What the system does is, it makes those choices, but makes sure it satisfies all the timing constraints and doesn’t collide with anything along the way. So it has the ability to adapt to its environment.”

Autonomy in the sea

The system is similar to one that Williams developed for NASA following the loss of the Mars Observer, a spacecraft that, days before its scheduled insertion into Mars’ orbit in 1993, lost contact with NASA.

“There were human operators on Earth who were experts in diagnosis and repair, and were ready to save the spacecraft, but couldn’t communicate with it,” Williams recalls. “Subsequently, NASA realized they needed systems that could reason at the cognitive level like engineers, but that were onboard the spacecraft.”

Williams, who at the time was working at NASA’s Ames Research Center, was tasked with developing an autonomous system that would enable spacecraft to diagnose and repair problems without human assistance. The system was successfully tested on NASA’s Deep Space 1 probe, which performed an asteroid flyby in 1999.

“That was the first chance to demonstrate goal-directed autonomy in deep space,” Williams says. “This was a chance to do the same thing under the sea.”

By giving robots control of higher-level decision-making, Williams says such a system would free engineers to think about overall strategy, while AUVs determine for themselves a specific mission plan. Such a system could also reduce the size of the operational team needed on research cruises. And, most significantly from a scientific standpoint, an autonomous planning system could enable robots to explore places that otherwise would not be traversable. For instance, with an autonomous system, robots may not have to be in continuous contact with engineers, freeing the vehicles to explore more remote recesses of the sea.

“If you look at the ocean right now, we can use Earth-orbiting satellites, but they don’t penetrate much below the surface,” Williams says. “You could send sea vessels which send one autonomous vehicle, but that doesn’t show you a lot. This technology can offer a whole new way to observe the ocean, which is exciting.”

Story Source:

The above story is based on materials provided by MIT News office, Jennifer Chu.