To help people suffering paralysis from injury, stroke or disease, scientists have invented brain-machine interfaces that record electrical signals of neurons in the brain and translate them to movement. Usually, that means the neural signals direct a device, like a robotic arm.

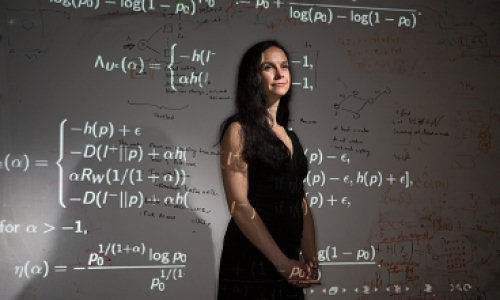

Cornell University researcher Maryam Shanechi, assistant professor of electrical and computer engineering, working with Ziv Williams, assistant professor of neurosurgery at Harvard Medical School, is bringing brain-machine interfaces to the next level: Instead of signals directing a device, she hopes to help paralyzed people move their own limb, just by thinking about it.

When paralyzed patients imagine or plan a movement, neurons in the brain’s motor cortical areas still activate even though the communication link between the brain and muscles is broken. By implanting sensors in these brain areas, neural activity can be recorded and translated to the patient’s desired movement using a mathematical transform called the decoder. These interfaces allow patients to generate movements directly with their thoughts.

In a paper published online Feb. 18 in Nature Communications, Shanechi, Williams and colleagues describe a cortical-spinal prosthesis that directs “targeted movement” in paralyzed limbs. The research team developed and tested a prosthesis that connects two subjects by enabling one subject to send its recorded neural activity to control limb movements in a different subject that is temporarily sedated. The demonstration is a step forward in making brain-machine interfaces for paralyzed humans to control their own limbs using their brain activity alone.

The brain-machine interface is based on a set of real-time decoding algorithms that process neural signals by predicting their targeted movements. In the experiment, one animal acted as the controller of the movement or the “master,” then “decided” which target location to move to, and generated the neural activity that was decoded into this intended movement. The decoded movement was used to directly control the limb of the other animal by electrically stimulating its spinal cord.

“The problem here is not only that of decoding the recorded neural activity into the intended movement, but also that of properly stimulating the spinal cord to move the paralyzed limb according to the decoded movement,” Shanechi said.

The scientists focused on decoding the target endpoint of the movement as opposed to its detailed kinematics. This allowed them to match the decoded target with a set of spinal stimulation parameters that generated limb movement toward that target. They demonstrated that the alert animal could produce two-dimensional movement in the sedated animal’s limb – a breakthrough in brain-machine interface research.

“By focusing on the target end point of movement as opposed to its detailed kinematics, we could reduce the complexity of solving for the appropriate spinal stimulation parameters, which helped us achieve this 2-D movement,” Williams said.

Part of the experimental setup’s novelty was using two different animals, rather than just one with a temporarily paralyzed limb. That way, the scientists contend that they have a true model of paralysis, since the master animal’s brain and the sedated animal’s limb had no physiological connection, as is the case for a paralyzed patient.

Shanechi’s lab will continue developing more sophisticated brain-machine interface architectures with principled algorithmic designs and use them to construct high-performance prosthetics. These architectures could be used to control an external device or the native limb.

“The next step is to advance the development of brain-machine interface algorithms using the principles of control theory and statistical signal processing,” Shanechi said. “Such brain-machine interface architectures could enable patients to generate complex movements using robotic arms or paralyzed limbs.”

Story Source:

The above story is based on materials provided by Cornell University.

Photo: eecs.mit.edu