In a test of the examinations system of the University of Reading in the UK, artificial intelligence (AI)-generated submissions went almost entirely undetected, and these fake answers tended to receive higher grades than those achieved by real students. Peter Scarfe of the University of Reading and colleagues present these findings in the open-access journal PLOS ONE on June 26.

Credit: Scarfe et al., 2024, PLOS ONE, CC-BY 4.0 (https://creativecommons.org/licenses/by/4.0/)

In a test of the examinations system of the University of Reading in the UK, artificial intelligence (AI)-generated submissions went almost entirely undetected, and these fake answers tended to receive higher grades than those achieved by real students. Peter Scarfe of the University of Reading and colleagues present these findings in the open-access journal PLOS ONE on June 26.

In recent years, AI tools such as ChatGPT have become more advanced and widespread, leading to concerns about students using them to cheat by submitting AI-generated work as their own. Such concerns are heightened by the fact that many universities and schools transitioned from supervised in-person exams to unsupervised take-home exams during the COVID-19 pandemic, with many now continuing such models. Tools for detecting AI-generated written text have so far not proven very successful.

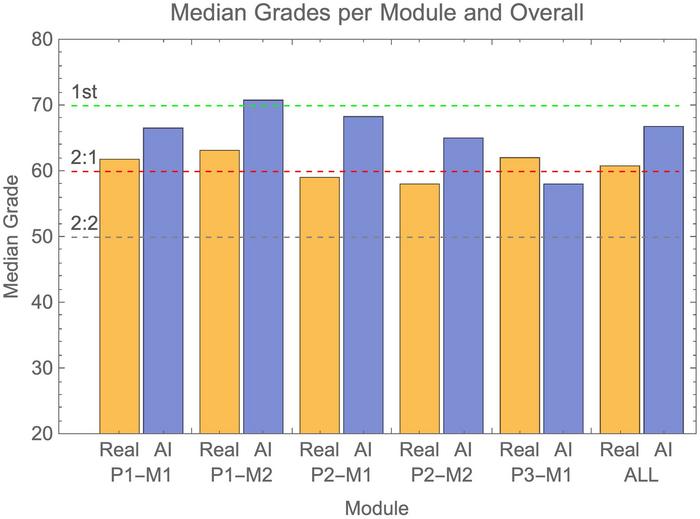

To better understand these issues, Scarfe and colleagues generated answers that were 100% written by the AI chatbot GPT-4 and submitted on behalf of 33 fake students to the examinations system of the School of Psychology and Clinical Language Sciences at the University of Reading. Exam graders were unaware of the study.

The researchers found that 94 percent of their AI submissions went undetected. On average, the fake answers earned higher grades than real students’ answers. In 83.4% of cases, the AI submissions received higher grades than a randomly selected group of the same number of submissions from real students.

These results suggest the possibility that students could not only get away with using AI to cheat, but they could also achieve better grades than achieved by their peers who do not cheat. The researchers also contemplate the possibility that a number of real students may have gotten away with AI-generated submissions in the course of this study.

From an academic integrity standpoint, the researchers remark, these findings are of extreme concern. They note that a return to supervised, in-person exams could help address this issue, but as AI tools continue to advance and infiltrate professional workplaces, universities might focus on working out how to embrace the “new normal” of AI in order to enhance education.

The authors add: “A rigorous blind test of a real-life university examinations system shows that exam submissions generated by artificial intelligence were virtually undetectable and robustly gained higher grades than real students. The results of the ‘Examinations Turing Test’ invite the global education sector to accept a new normal and this is exactly what we are doing at the University of Reading. New policies and advice to our staff and students acknowledge both the risks and the opportunities afforded by tools that employ artificial intelligence.”

#####

In your coverage please use this URL to provide access to the freely available article in PLOS ONE: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0305354

Citation: Scarfe P, Watcham K, Clarke A, Roesch E (2024) A real-world test of artificial intelligence infiltration of a university examinations system: A “Turing Test” case study. PLoS ONE 19(6): e0305354. https://doi.org/10.1371/journal.pone.0305354

Author Countries: UK

Funding: This work was supported by the project award “CHAI: Cyber Hygiene in AI enabled domestic life” from the Engineering and Physical Sciences Research Council (EPSRC EP/T026820/1). There was no additional external funding received for this study.

Journal

PLoS ONE

DOI

10.1371/journal.pone.0305354

Method of Research

Experimental study

Subject of Research

People

Article Title

A real-world test of artificial intelligence infiltration of a university examinations system: A “Turing Test” case study

Article Publication Date

26-Jun-2024

COI Statement

The authors have declared that no competing interests exist.