In the intricate realm of chemistry and molecular science, understanding the subtle forces that govern interactions between molecules remains one of the most challenging yet rewarding pursuits. Molecular interactions, ranging from solute-solvent dissolution to intricate drug-drug interplays and protein complex formations, form the backbone of countless biochemical and chemical phenomena. These interactions dictate how molecules assemble, react, and function, offering unparalleled insights that pave the way for advances in drug design, materials science, and beyond.

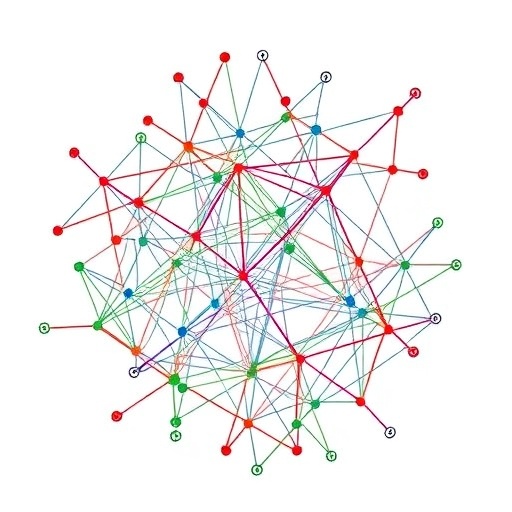

Historically, the scientific community has sought computational methods to predict these interactions with accuracy and efficiency. Many contemporary machine learning approaches tackle the problem using distinct paradigms. The first, known as Embedding Merging, independently encodes molecular structures before merging their representations. Alternatively, Feature Fusion methods blend molecular features, often leveraging attention mechanisms to pinpoint key attributes. Perhaps the most anatomically grounded strategy involves Merged Molecular Graphs, where solute and solvent atoms are combined into a single graph structure, allowing atom-to-atom interactions to be explicitly modeled.

Among these approaches, the Merged Molecular Graph Neural Network (MMGNN) once stood as a beacon of interpretability and performance. By connecting every possible atom pair in the fused graph and applying attention mechanisms, MMGNN showcased a remarkable ability to identify and prioritize crucial intermolecular interactions. This explicit encoding granted researchers a transparent window into the molecular interactions underlying predictive outputs, enhancing not just accuracy but comprehension. However, this virtue came at a pivotal cost: the computational complexity of MMGNN grew exponentially with molecular size, significantly limiting its scalability and rendering it impractical for larger or more diverse molecular systems.

.adsslot_EH5A6bC3Tr{width:728px !important;height:90px !important;}

@media(max-width:1199px){ .adsslot_EH5A6bC3Tr{width:468px !important;height:60px !important;}

}

@media(max-width:767px){ .adsslot_EH5A6bC3Tr{width:320px !important;height:50px !important;}

}

ADVERTISEMENT

Addressing this pressing bottleneck, a novel neural network architecture known as the Molecular Merged Hypergraph Neural Network (MMHNN) has recently emerged, promising to revolutionize molecular interaction modeling. Rather than relying on fully connected atom-to-atom graphs, MMHNN innovatively leverages the concept of hypergraphs—structures where edges can connect multiple nodes simultaneously—to encapsulate molecular subgraphs as cohesive entities, or “supernodes.” This thoughtful reduction in graph complexity translates into drastically reduced computational overhead, allowing models to scale to larger or more structurally complex molecules without compromising the richness of interaction representation.

What distinguishes MMHNN beyond mere efficiency gains is its adept incorporation of Graph Information Bottleneck (GIB) theory to explicitly model repulsive or non-interacting atomic pairs. Traditionally, neural networks focusing solely on positive interactions risk overlooking the subtle contextual information embedded in non-binding regions. By introducing mechanisms grounded in GIB, MMHNN enriches its semantic interpretability, better defining node and edge meanings within the fused molecular hypergraph and thus elevating the transparency of solvation free energy predictions.

The predictive prowess of MMHNN has been validated through rigorous experiments conducted on multiple benchmark solute-solvent datasets. Results revealed not only superior prediction accuracy but also stunning improvements in computational efficiency compared to existing methodologies, such as MMGNN and other graph-based neural networks. This leap forward is particularly notable given that, unlike traditional models bogged down by the combinatorial explosion of atom pairs, MMHNN maintains a streamlined yet expressive representation conducive to high-throughput predictions.

Crucially, MMHNN’s architecture also lends itself to enhanced interpretability. Researchers can visualize and dissect the contributions of distinct molecular subgraphs and their interconnections, unveiling the underlying physicochemical forces governing solvation Gibbs free energy (ΔG_solv). This clarity addresses a long-standing challenge in molecular machine learning—balancing model complexity and transparency—offering new pathways to rational molecular design grounded in mechanistic understanding.

Beyond predictive performance, MMHNN demonstrates robust generalization capabilities across diverse solvent environments and solute scaffolds. Analyses reveal the model sustains high fidelity under distribution shifts, a critical prerequisite for practical deployment in drug discovery and materials research, where chemical space is vast and often underexplored. Moreover, nuanced investigations reveal the model exhibits greater error sensitivity with increasing molecular size and specific atomic element types, highlighting ongoing opportunities for refinement and domain-specific tuning.

A particularly illuminating finding underscores the impact of distributional dissimilarities between training and testing datasets. Variations in solute scaffolds, for instance, manifest as performance fluctuations, emphasizing the intricate balance between molecular representativeness within training sets and generalization capabilities. Such insights suggest avenues for dataset curation and augmentation strategies to further fortify MMHNN’s robustness in real-world applications.

From a computational perspective, MMHNN’s hypergraph-based fusion framework epitomizes the synergy between algorithmic innovation and practical necessity. By abstracting molecular substructures into supernodes and avoiding exhaustive pairwise atom connections, the model slashes memory consumption and speeds up processing times. These practical benefits translate into unprecedented scalability, enabling simulations and predictions once deemed computationally prohibitive.

The impactful combination of interpretability, scalability, and predictive excellence witnessed in MMHNN posits it as a trailblazer in molecular machine learning. An approach that seamlessly integrates structural hierarchy, information theory, and graph neural network advancements, MMHNN stands poised to catalyze breakthroughs in numerous fields—from drug solubility prediction to solvent effect elucidation and beyond.

This pioneering study represents a vital step towards unraveling molecular convolutions at scale, harmonizing technical sophistication with domain-specific needs. As we continue to grapple with the complexities of molecular systems, innovations like MMHNN embody the future of predictive chemistry—where deep learning models not only forecast outcomes but illuminate the molecular stories behind them.

In summary, the Molecular Merged Hypergraph Neural Network delivers a compelling new paradigm for solvation free energy prediction, amalgamating computational efficiency with interpretability and robustness. Its hypergraph-centric view, augmented by Graph Information Bottleneck theory, underscores a shift in molecular modeling that values both accuracy and understanding. As research trends increasingly prioritize explainability alongside performance, MMHNN exemplifies the transformative potential of hybrid methodologies that strategically refine data representation to unlock deeper insights into molecular interactions.

Given the accelerating role of machine learning in chemistry and life sciences, MMHNN’s successful demonstration heralds a future where AI-driven molecular modeling adapts fluidly to complexity without sacrificing clarity. The model’s design philosophy, validated through exhaustive benchmarking and interpretive analysis, sets a new benchmark—inviting further exploration, optimization, and adoption across diverse scientific domains.

Subject of Research: Not applicable

Article Title: Molecular Merged Hypergraph Neural Network for Explainable Solvation Gibbs Free Energy Prediction

News Publication Date: 15-Aug-2025

Web References: http://dx.doi.org/10.34133/research.0740

Image Credits: Copyright © 2025 Wenjie Du et al.

Keywords

Molecular interactions, solvation free energy, hypergraph neural network, MMHNN, Graph Information Bottleneck, solute-solvent modeling, molecular machine learning, interpretability, computational chemistry, molecular graphs, machine learning scalability, explainable AI

Tags: Advances in materials science and drug developmentAtom-to-atom interaction modelingAttention mechanisms in neural networksComputational methods in chemistryDrug design and molecular interactionsExplainable AI in molecular scienceFeature fusion in molecular graphsHypergraph neural networks in chemistryMachine learning for molecular predictionsMerged molecular graph modelsMolecular interactions in chemistrySolvation Gibbs free energy predictions