Credit: @Nicola Marzari

Over the past 20 years, first-principles simulations have become powerful, widely used tools in many, diverse fields of science and engineering. From nanotechnology to planetary science, from metallurgy to quantum materials, they have accelerated the identification, characterization, and optimization of materials enormously. They have led to astonishing predictions–from ultrafast thermal transport to electron-phonon mediated superconductivity in hydrides to the emergence of flat bands in twisted-bilayer graphene– that have gone on to inspire remarkable experiments.

The current push to complement experiments with simulations; continued, rapid growth in computer throughput capacity; the ability of machine-learning and artificial intelligence to accelerate materials discovery as well as the promise of disruptive accelerators such as quantum computing for exponentially expensive tasks mean it is apparent that these methods will become ever more relevant as time goes by. It is an appropriate time then to review the capabilities as well as the limitations of the electronic-structure methods underlying these simulations. Marzari, Ferretti and Wolverton address this task in the paper “Electronic-structure methods for materials design,” just published in Nature Materials.

“Simulations do not fail in spectacular ways but can subtly shift from being invaluable to barely good enough to just useless,” the authors said in the paper. “The reasons for failure are manifold, from stretching the capabilities of the methods to forsaking the complexity of real materials. But simulations are also irreplaceable: they can assess materials at conditions of pressure and temperature so extreme that no experiment on earth is able to replicate, they can explore with ever-increasing nimbleness the vast space of materials phases and compositions in the search for that elusive materials breakthrough, and they can directly identify the microscopic causes and origin of a macroscopic property. Last, they share with all branches of computational science a key element of research: they can be made reproducible and open and shareable in ways that no physical infrastructure will ever be.”

The authors first look at the framework of density-functional theory (DFT) and give an overview of the increasingly complex approaches that can improve accuracy or extend the scope of simulations. They then discuss the capabilities that computational materials science has developed to exploit this toolbox and deliver predictions for the properties of materials under realistic conditions of ever-increasing complexity. Finally, they highlight how physics- or data-driven approaches can provide rational, high-throughput, or artificial-intelligence avenues to materials discovery, and explain how such efforts are changing the entire research ecosystem.

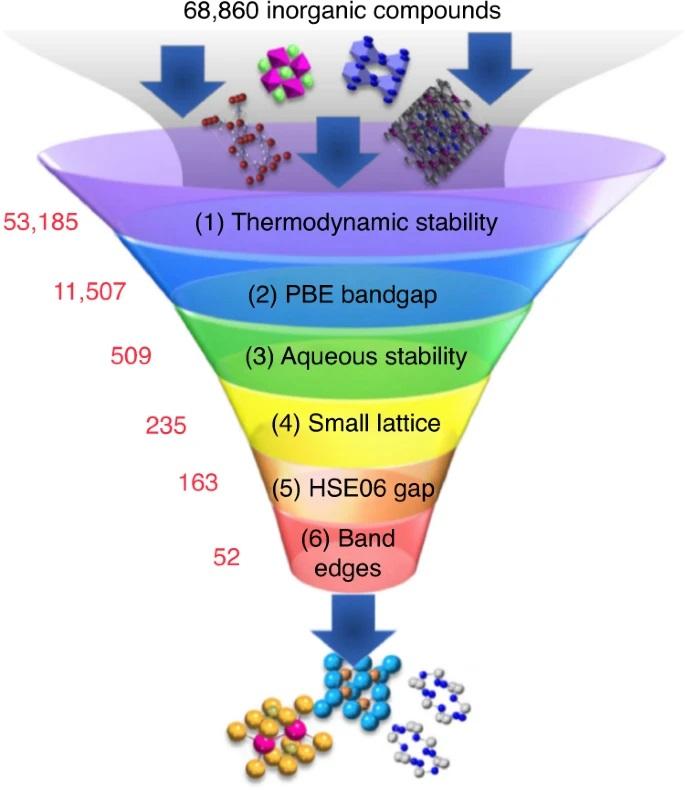

Looking ahead, the authors say that developing methods that can assess the thermodynamic stability, synthesis conditions, manufacturability, and tolerance of the predicted properties to intrinsic and extrinsic defects in novel materials will be a significant challenge. Researchers may need to augment DFT estimates by more advanced electronic-structure methods or machine learning algorithms to improve accuracy, and use computational methods to address realistic conditions such as vibrational entropies, the concentration of defects and applied electrochemical potentials.

Finally, given the extended role that such methods are likely to play in the coming decades, the authors note that support and planning for the needed computational infrastructures–widely used scientific software, the verification of codes and validation of theories, the dissemination and curation of computational data, tools and workflows as well as the associated career models these entail and require–are only just beginning to emerge.

###

Media Contact

Carey Sargent

[email protected]

Original Source

https:/

Related Journal Article

http://dx.