Artificial mechanism of “attention” through the deep learning

Credit: Nobuhiko Wagatsuma

This discovery was made possible by applying the research method for the comparison of the brain activity between monkeys and humans to artificial neural networks. This finding might be helpful not only to understand the cortical mechanism of attentional selection but also to develop artificial intelligence.

Deep neural networks (DNNs), which are used in the development of artificial intelligence, are mathematical models for obtaining appropriate mechanisms to solve specific problems from the training with a large-scale dataset. However, the detailed mechanisms underlying DNNs through this learning process have not yet been clarified.

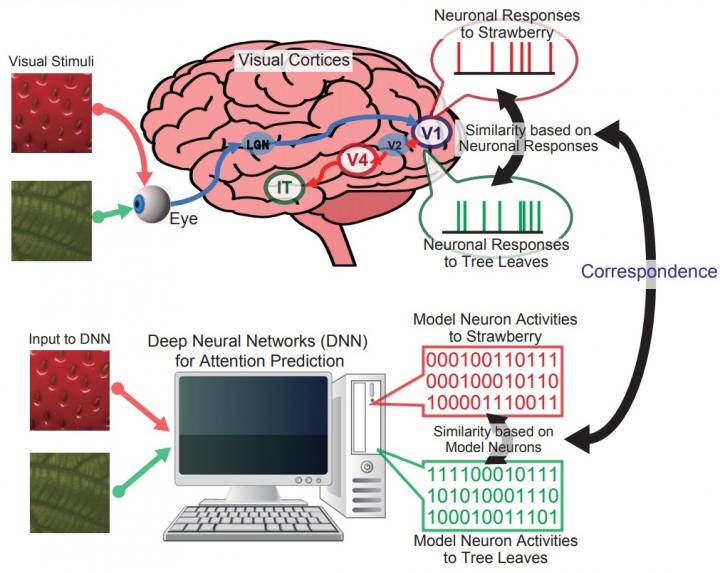

A research group led by Nobuhiko Wagatsuma, Lecturer at the Faculty of Science, Toho University, Akinori Hidaka, Associate Professor at the Faculty of Science and Engineering, Tokyo Denki University, and Hiroshi Tamura, Associate Professor at the Graduate School of Frontiers Biosciences, Osaka University, found that the characteristics of responses in DNNs for predicting the attention to the most important location in images were consistent with those of the neural representation in the primary visual cortex (V1) of primates. The discovery was made possible by applying the analysis method designed for comparing the characteristics of the neuronal activity in monkeys with that in humans to DNNs.

The result of this study provides important insight into the neural mechanism of attention. Additionally, the application of the attentional mechanism in the primates including human may accelerate the development of artificial intelligence.

Key Points:

- The correspondence between primate visual cortices and deep neural networks has been revealed by applying the research method for comparing the neural activity between different species, such as humans and monkeys, to artificial neural networks.

- Recently, deep neural networks are utilized as the main methods for developing artificial intelligence. Wagatsuma et al. have reported the similar properties between deep neural networks for predicting attention and the primary visual cortex (V1) of primates. Additionally, their findings implied that the mechanism of the deep neural networks for attention prediction might be distinct from that for object classification such as VGG 16.

- Attention is a function that enables us to attend the most important information at the moment, which is the most critical keyword in the recent development of artificial intelligence. The results of this research might provide contributions not only for understanding the neural mechanisms for attention selection of primates including human but also for developing artificial intelligence.

###

These results have been published in eNeuro, an open-access journal of Society for Neuroscience (SfN), headquartered in the United States, on November 24, 2020.

Media Contact

Nobuhiko Wagatsuma

[email protected]

Related Journal Article

http://dx.