Credit: Caltech

In his quest to bring ever-faster cameras to the world, Caltech’s Lihong Wang has developed technology that can reach blistering speeds of 70 trillion frames per second, fast enough to see light travel. Just like the camera in your cell phone, though, it can only produce flat images.

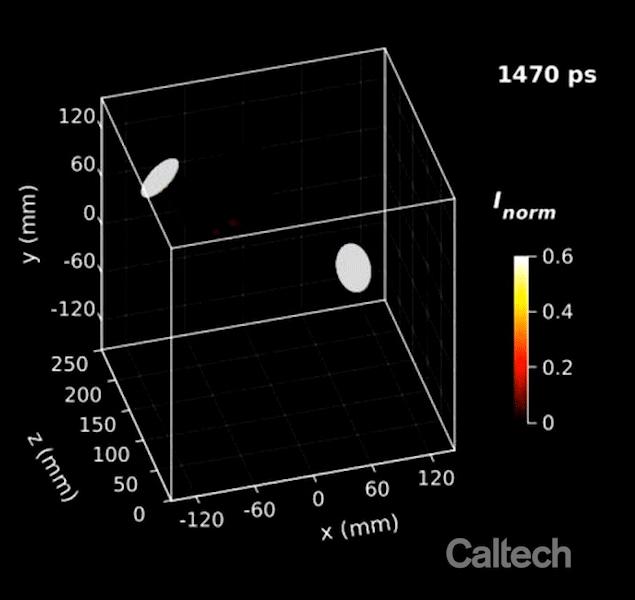

Now, Wang’s lab has gone a step further to create a camera that not only records video at incredibly fast speeds but does so in three dimensions. Wang, Bren Professor of Medical Engineering and Electrical Engineering in the Andrew and Peggy Cherng Department of Medical Engineering, describes the device in a new paper in the journal Nature Communications.

The new camera, which uses the same underlying technology as Wang’s other compressed ultrafast photography (CUP) cameras, is capable of taking up to 100 billion frames per second. That is fast enough to take 10 billion pictures, more images than the entire human population of the world, in the time it takes you to blink your eye.

Wang calls the new iteration “single-shot stereo-polarimetric compressed ultrafast photography,” or SP-CUP.

In CUP technology, all of the frames of a video are captured in one action without repeating the event. This makes a CUP camera extremely quick (a good cell-phone camera can take 60 frames per second). Wang added a third dimension to this ultrafast imagery by making the camera “see” more like humans do.

When a person looks at the world around them, they perceive that some objects are closer to them, and some objects are farther away. Such depth perception is possible because of our two eyes, each of which observes objects and their surroundings from a slightly different angle. The information from these two images is combined by the brain into a single 3-D image.

The SP-CUP camera works in essentially the same way, Wang says.

“The camera is stereo now,” he says. “We have one lens, but it functions as two halves that provide two views with an offset. Two channels mimic our eyes.”

Just as our brain does with the signals it receives from our eyes, the computer that runs the SP-CUP camera processes data from these two channels into one three-dimensional movie.

SP-CUP also features another innovation that no human possesses: the ability to see the polarization of light waves.

The polarization of light refers to the direction in which light waves vibrate as they travel. Consider a guitar string. If the string is pulled upwards (say, by a finger) and then released, the string will vibrate vertically. If the finger plucks it sideways, the string will vibrate horizontally. Ordinary light has waves that vibrate in all directions. Polarized light, however, has been altered so that its waves all vibrate in the same direction. This can occur through natural means, such as when light reflects off a surface, or as a result of artificial manipulation, as happens with polarizing filters.

Though our eyes cannot detect the polarization of light directly, the phenomenon has been exploited in a range of applications: from LCD screens to polarized sunglasses and camera lenses in optics to devices that detect hidden stress in materials and the three-dimensional configurations of molecules.

Wang says that the SP-CUP’s combination of high-speed three-dimensional imagery and the use of polarization information makes it a powerful tool that may be applicable to a wide variety of scientific problems. In particular, he hopes that it will help researchers better understand the physics of sonoluminescence, a phenomenon in which sound waves create tiny bubbles in water or other liquids. As the bubbles rapidly collapse after their formation, they emit a burst of light.

“Some people consider this one of that greatest mysteries in physics,” he says. “When a bubble collapses, its interior reaches such a high temperature that it generates light. The process that makes this happen is very mysterious because it all happens so fast, and we’re wondering if our camera can help us figure it out.”

###

The paper describing the work, titled, “Single-shot stereo-polarimetric compressed ultrafast photography for light-speed observation of high-dimensional optical transients with picosecond resolution,” appears in the October 16 issue of Nature Communications. Co-authors are Jinyang Liang, formerly of Caltech now at the Institut national de la recherche scientifique in Quebec; Peng Wang, postdoctoral scholar in medical engineering; and Liren Zhu, a former graduate student of the Wang lab.

Funding for the research was provided by the National Institutes of Health.

Media Contact

Emily Velasco

[email protected]