Credit: Virginia Tech

Two Virginia Tech professors are part of a team focused on developing new technology for the virtual world: a full-body experience in a fully electronic environment.

The project is funded by a $1.5 million grant from the National Science Foundation. Virginia Tech contributors are Professor Alex Leonessa from the Department of Mechanical Engineering and Associate Professor Divya Srinivasan from the Grado Department of Industrial and Systems Engineering. They are joining forces with Eric Jing Du, a professor from University of Florida, and industry partner HaptX.

Virtual reality has been a technological pie-in-the-sky development for decades. Movies and television shows have fueled imagination and inspired commercial developments, but the expectation created by fantasy has yet to find its full realization. While science fiction often presents the idea as fully immersive, casting ideas, such as Star Trek’s holodeck and the world of the Matrix, VR has been a heavily visual experience.

The addition of gloves allowed users to interact within virtual environments in ways that expanded the virtual reality world, adapted for video games in developments such as the Nintendo Power Glove and the cyber glove. NASA adapted this approach as well, building its Virtual Interface Environment Workstation in 1990 for an operator to physically move objects from a remote location. In each of these experiences, a user would watch their virtual hands perform virtual tasks in a virtual environment. The feedback to a user remained visual.

In recent years, such developments as HaptX Gloves have added an additional dimension to the experience: a virtual environment that returns a touch response to a user’s hand. Programmed microfluidic pockets within gloves respond based on the actions in a virtual environment, creating a tactile response in addition to a visual one. A user would not just experience the virtual world visually, they would also feel weight, shape, and texture across their hand and fingertips.

The next step

Being able to move around a virtual environment presents its own set of challenges. Video games normally accomplish this by using joysticks or keypads, meaning that a user is essentially pushing buttons with their fingers to achieve virtual reality walking. Other input devices, such as pads and devices that fit like shoes, require some form of walking in place to input the motion.

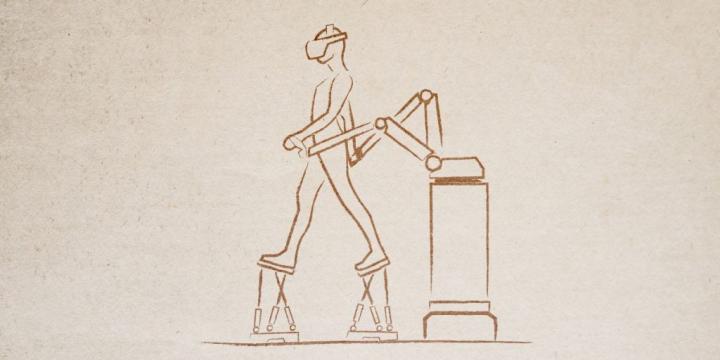

Virginia Tech is working with University of Florida and HaptX to bring together motion, touch response, visual interaction, and more. The hopeful result is a full-body virtual reality rig that allows users to see the environment through a headset, achieve touch via the HaptX gloves, and both walk and interact by using an exoskeleton.

The project is called ForceBot, drawing on the term “force feedback,” the general classification of controls that impact objects in the virtual environment. Responsive robotics working with a user’s feet would allow walking, simulate changes in terrain, and move a user through the electronic world. Additional robotics in a hand and arm rig would provide resistance for pushing and pulling simulated solid objects, while the HaptX gloves complete the experience with touch response. The visual headset would allow a user to see the electronic environment unfold before them.

“We are excited to have the opportunity to develop a fully immersive virtual reality experience, providing realistic full-body interactions,” said Leonessa. “Virtual reality is a familiar technology, but the untapped potential it holds is vast.”

###

Media Contact

Suzanne Irby

[email protected]

Original Source

https:/