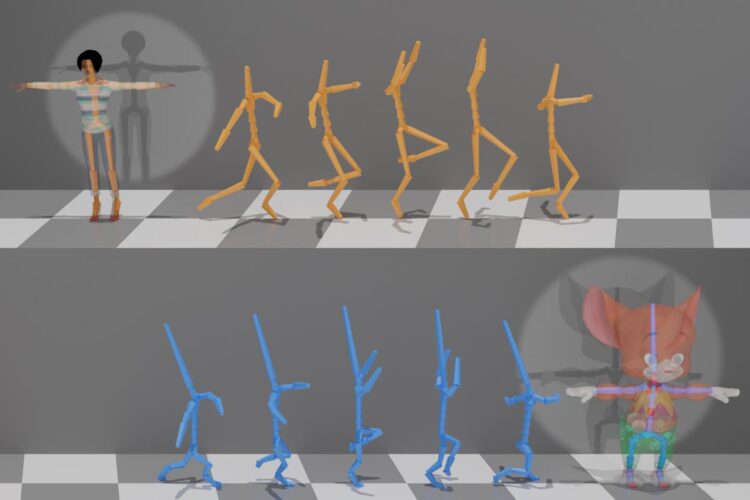

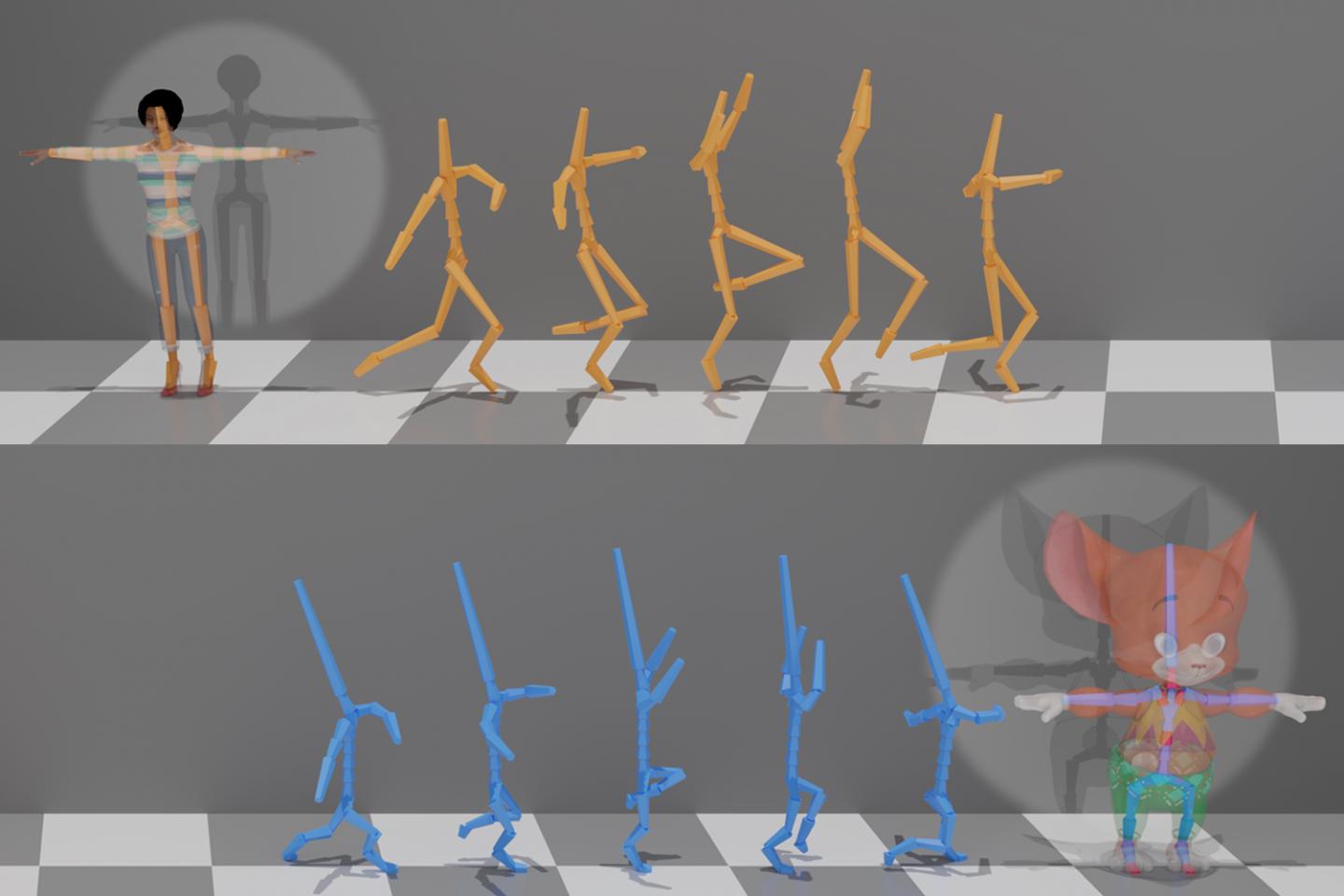

Credit: Kfir Aberman, Peizhuo Li, Dani Lischinski, Olga Sorkine-Hornung, Daniel Cohen-Or, Baoquan Chen

Every human body is unique, and the way in which a person’s body naturally moves depends on myriad factors, including height, weight, size, and overall shape. A global team of computer scientists has developed a novel deep-learning framework that automates the precise translation of human motion, specifically accounting for the wide array of skeletal structures and joints.

The end result? A seamless, much more flexible and universal framework for replicating human motion in the virtual world.

The team of researchers hail from AICFVE, the Beijing Film Academy, ETH Zurich, Hebrew University of Jerusalem, Peking University, and Tel Aviv University, and plan to demonstrate their work during SIGGRAPH 2020. The conference, which will take place virtually this year starting 17 August, gathers a network of leading professionals who approach computer graphics and interactive techniques from different perspectives. SIGGRAPH continues to serve as the industry’s premier venue for showcasing forward-thinking ideas and research. Registration for the virtual conference is now available.

Capturing the motion of humans remains a burgeoning and exciting field in computer animation and human-computer interaction. Motion capture (mocap) technology, particularly in filmmaking and visual effects, has made it possible to bring animated characters or digital actors to life. Mocap systems usually require the performer or actor to wear a set of markers or sensors that computationally capture their motions and 3D-skeleton poses. What remains a challenge in mocap is the ability to precisely transfer motion, also known as “motion retargeting,” between human skeletons, where the skeletons might differ in their structure depending on the number of bones and joints involved.

To date, mocap systems have not been successful in retargeting skeletons with different structures in a fully automated way. Errors are typically introduced in positions where joint correspondence cannot be specified. The team set out to address this specific problem and demonstrate that the framework can accurately replicate motion retargeting without specifying explicit pairing between the varying data sets.

“Our development is essential for using data from multiple mocap datasets that are captured with different systems within a single model,” Kfir Aberman, a senior author of the work and a researcher from AICFVE at the Beijing Film Academy, shared. “This enables the training of stronger, data-driven models that are setup-agnostic for various motion processing tasks.”

The team’s new motion processing framework contains special operators uniquely designed for motion data. The framework is general and can be used for various motion processing tasks. In particular, the researchers exploit its special properties to solve a practical problem in the mocap world, which makes their novel method widely applicable.

“I am particularly excited about the ability of our approach to encode motion into an abstract, skeleton-agnostic latent space,” Dani Lischinski, a coauthor of the work and professor at the School of Computer Science and Engineering at the Hebrew University of Jerusalem, said. “A fascinating direction for future work would be to enable motion transfer between fundamentally different characters, such as bipeds and quadrupeds.”

In addition to Aberman and Lischinski, the collaborators on “Skeleton-aware Networks for Deep Motion Retargeting” include Peizhuo Li, Olga Sorkine-Hornung, Daniel Cohen-Or, and Baoquan Chen. The team’s paper and video can be found here and here.

###

Media Contact

Emily Drake

[email protected]

Original Source

https:/