A paralysed woman who controlled a robotic arm using just her thoughts has taken another step towards restoring her natural movements by controlling the arm with a range of complex hand movements.

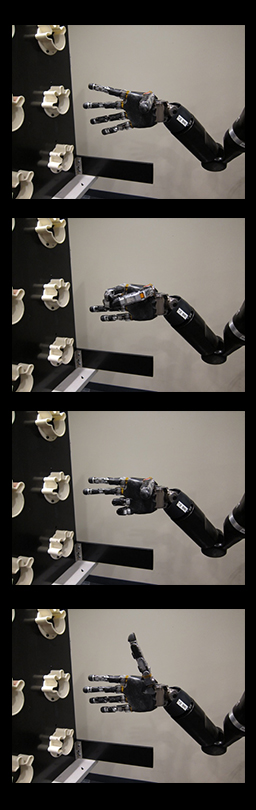

Video Credit: Institute of Physics

Thanks to researchers at the University of Pittsburgh, Jan Scheuermann, who has longstanding quadriplegia and has been taking part in the study for over two years, has gone from giving “high fives” to the “thumbs-up” after increasing the manoeuvrability of the robotic arm from seven dimensions (7D) to 10 dimensions (10D).

The extra dimensions come from four hand movements—finger abduction, a scoop, thumb extension and a pinch—and have enabled Jan to pick up, grasp and move a range of objects much more precisely than with the previous 7D control.

It is hoped that these latest results, which have been published today, 17 December, in IOP Publishing’s Journal of Neural Engineering, can build on previous demonstrations and eventually allow robotic arms to restore natural arm and hand movements in people with upper limb paralysis.

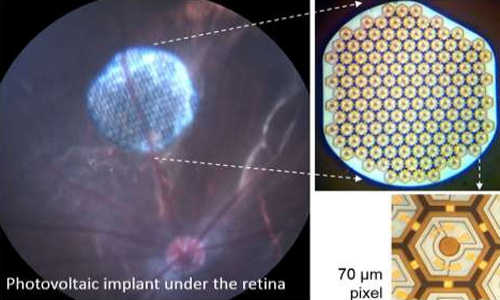

Jan Scheuermann, 55, from Pittsburgh, PA had been paralysed from the neck down since 2003 due to a neurodegenerative condition. After her eligibility for a research study was confirmed in 2012, Jan underwent surgery to be fitted with two quarter-inch electrode grids, each fitted with 96 tiny contact points, in the regions of Jan’s brain that were responsible for right arm and hand movements.

After the electrode grids in Jan’s brain were connected to a computer, creating a brain-machine interface (BMI), the 96 individual contact points picked up pulses of electricity that were fired between the neurons in Jan’s brain.

Computer algorithms were used to decode these firing signals and identify the patterns associated with a particular arm movement, such as raising the arm or turning the wrist.

By simply thinking of controlling her arm movements, Jan was then able to make the robotic arm reach out to objects, as well as move it in a number of directions and flex and rotate the wrist. It also enabled Jan to “high five” the researchers and feed herself dark chocolate.

Two years on from the initial results, the researchers at the University of Pittsburgh have now shown that Jan can successfully manoeuvre the robotic arm in a further four dimensions through a number of hand movements, allowing for more detailed interaction with objects.

The researchers used a virtual reality computer program to calibrate Jan’s control over the robotic arm, and discovered that it is crucial to include virtual objects in this training period in order to allow reliable, real-time interaction with objects.

Controlling the robot arm with her thoughts, the participant shaped the hand into four positions: fingers spread, scoop, pinch and thumb up. Photo credit: Journal of Neural Engineering/IOP Publishing

Co-author of the study Dr Jennifer Collinger said: “10D control allowed Jan to interact with objects in different ways, just as people use their hands to pick up objects depending on their shapes and what they intend to do with them. We hope to repeat this level of control with additional participants and to make the system more robust, so that people who might benefit from it will one day be able to use brain-machine interfaces in daily life.

“We also plan to study whether the incorporation of sensory feedback, such as the touch and feel of an object, can improve neuroprosthetic control.”

Commenting on the latest results, Jan Scheuermann said: “”This has been a fantastic, thrilling, wild ride, and I am so glad I’ve done this.”

“This study has enriched my life, given me new friends and co-workers, helped me contribute to research and taken my breath away. For the rest of my life, I will thank God every day for getting to be part of this team.”

Story Source:

The above story is based on materials provided by Institute of Physics.