Demonstrated by breakthroughs in various fields of artificial intelligence (AI), such as image processing, smart healthcare, self-driving vehicles and smart cities, this is undoubtedly the golden period of deep learning. In the next decade or so, AI and computing systems will eventually be equipped with the ability to learn and think the way humans do – to process continuous flow of information and interact with the real world.

Credit: SUTD

Demonstrated by breakthroughs in various fields of artificial intelligence (AI), such as image processing, smart healthcare, self-driving vehicles and smart cities, this is undoubtedly the golden period of deep learning. In the next decade or so, AI and computing systems will eventually be equipped with the ability to learn and think the way humans do – to process continuous flow of information and interact with the real world.

However, current AI models suffer from a performance loss when they are trained consecutively on new information. This is because every time new data is generated, it becomes written on top of existing data, thus erasing previous information. This effect is known as ‘catastrophic forgetting’. A difficulty arises from the stability-plasticity issue, where the AI model needs to update its memory to continuously adjust to the new information, and at the same time, maintain the stability of its current knowledge. This problem prevents state-of-the-art AI from continually learning through real world information.

Edge computing systems allow computing to be moved from the cloud storage and data centres to near the original source, such as devices connected to the Internet of Things (IoTs). Applying continual learning efficiently on resource limited edge computing systems remains a challenge, although many continual learning models have been proposed to solve this problem. This is because traditional models require high computing power and large memory capacity.

A new type of code to realise an energy-efficient continual learning system has been recently designed by a team of researchers from the Singapore University of Technology and Design (SUTD), including Shao-Xiang Go, Qiang Wang, Bo Wang, Yu Jiang and Natasa Bajalovic. The team was led by principal investigator, Assistant Professor Desmond Loke from SUTD. The study by these researchers, ‘Continual Learning Electrical Conduction in Resistive-Switching-Memory Materials’, was published in the journal Advanced Theory and Simulations.

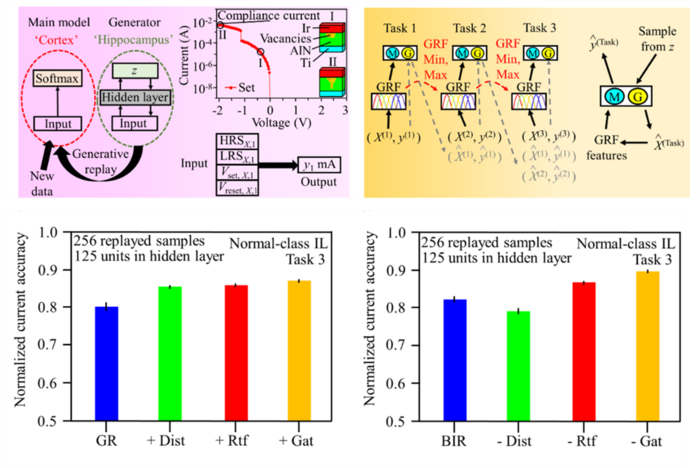

The team proposed Brain-Inspired Replay (BIR), a model inspired by the brain, which performs continual learning naturally. The BIR model, based on the use of an artificial neural network and a variational autoencoder, imitates the functions of the human brain and can perform well in class-incremental learning situations without storing data. The researchers also used the BIR model to represent conductive filament growth using electrical current in digital memory systems.

“In this model, knowledge is preserved within trained models in order to minimise performance loss upon the introduction of additional tasks, without the need to refer to data from previous works,” explained Assistant Professor Loke. “So, this saves us a substantial amount of energy.”

“Moreover, a state-of-the-art accuracy of 89% on challenging compliance to current learning tasks without storing data was achieved, which is about two times higher than that of traditional continual learning models, as well as high energy efficiency,’” he added.

To allow the model to process on-the-spot information in the real world independently, the team plans to expand the adjustable capability of the model in the next phase of their research.

“Based on small scale demonstrations, this research is still in its early stages,” said Assistant Professor Loke. “The adoption of this approach is expected to allow edge AI systems to progress independently without human control.”

Journal

Advanced Theory and Simulations

DOI

10.1002/adts.202200226

Article Title

Continual Learning Electrical Conduction in Resistive-Switching-Memory Materials

Article Publication Date

2-Jun-2022