In recent years, large language models (LLMs) such as OpenAI’s GPT-4 and Google’s Gemini have made remarkable strides in the field of artificial intelligence (AI), particularly within healthcare. These sophisticated AI systems have demonstrated extraordinary capabilities in understanding and generating human language, enabling their rapid adoption in various medical contexts. From facilitating clinical decision-making to accelerating drug discovery, LLMs are poised to transform healthcare delivery. However, as their deployment expands, so too do the cybersecurity challenges associated with their use, demanding urgent attention from the medical community and cybersecurity experts alike.

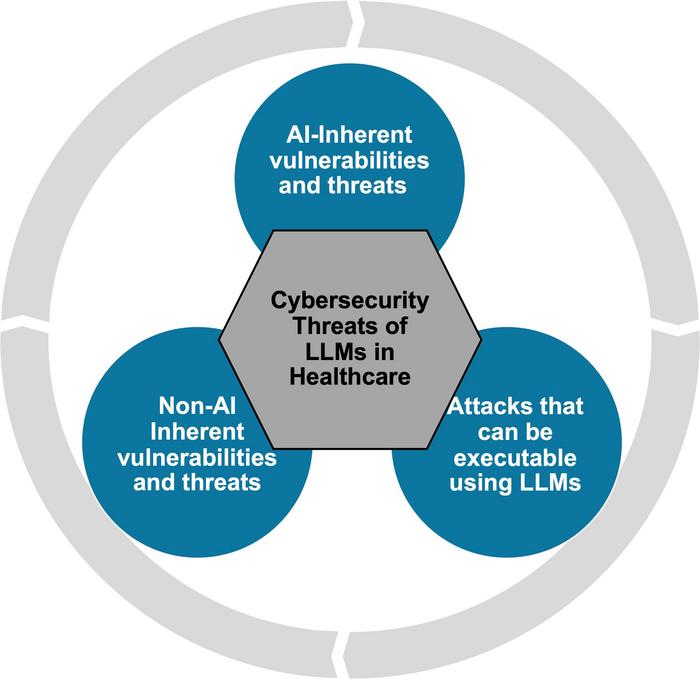

The integration of LLMs in healthcare presents a dual-edged sword. On one hand, these models offer unprecedented opportunities to improve patient outcomes, streamline workflows, and democratize complex medical-information access. On the other hand, they introduce novel security vulnerabilities that can be exploited by malicious actors. The nature of these vulnerabilities is multifaceted, encompassing both AI-inherent technical risks and broader systemic threats within the healthcare ecosystem. Understanding these layers of cybersecurity concerns is vital to safeguarding patient data and ensuring the integrity of clinical processes.

At the core of AI-inherent challenges are attacks targeting the training and operational behavior of LLMs. Techniques like data poisoning involve the injection of malicious or misleading information into model training datasets, compromising the accuracy and reliability of AI outputs. Inference attacks further expose sensitive patient information by exploiting model outputs to reconstruct confidential data. Additionally, adversaries may circumvent embedded safety mechanisms designed to restrict harmful or unethical model responses, thereby fostering scenarios where AI generates misleading or damaging content.

Beyond the AI technology itself, the environments into which LLMs are deployed carry their own security risks. Healthcare systems traditionally present a complex and often fragmented infrastructure susceptible to breaches and service disruptions. An attacker who manipulates image analysis algorithms in radiology, for example, could alter diagnostic outcomes, directly impacting patient care. Similarly, unauthorized access to databases through compromised LLM interfaces can result in significant data privacy violations, contravening regulations such as HIPAA and GDPR.

The radiology domain exemplifies a specialized field where integrating LLMs requires meticulous cybersecurity oversight. Digital imaging workflows rely heavily on accurate data interpretation, and any compromise in the AI-assisted analysis jeopardizes both diagnosis and treatment planning. Cyberattack vectors in this space can involve tampering with image datasets, injecting unauthorized software, or intercepting communication channels between AI models and healthcare practitioners, amplifying the risk profile substantially.

Effective mitigation strategies demand a comprehensive cybersecurity approach tailored to the intricacies of healthcare AI. Fundamental measures include enforcing robust authentication protocols like multi-factor authentication and maintaining rigorous patch management to address newly discovered vulnerabilities. However, these baseline protections must be extended to encompass secure deployment architectures, featuring strong encryption of data both at rest and in transit, alongside continuous monitoring systems to detect anomalous AI interactions and potential breaches in real time.

Furthermore, the vetting and institutional approval of AI tools prior to deployment cannot be overstated. Ensuring that only those LLMs which have undergone stringent security evaluations and compliance assessments are utilized within hospital systems is paramount. Additionally, any patient data processed by LLMs should be anonymized or de-identified to minimize exposure in the event of a compromise. This data hygiene practice reduces the risk associated with potential leaks and fosters compliance with ethical standards.

The human factor remains a critical component in the cybersecurity framework for LLM integration. Ongoing education and training programs for healthcare professionals are essential to cultivate awareness and preparedness regarding cyber threats. Analogous to mandatory radiation safety training in radiology, periodic cybersecurity drills and updates ensure that all stakeholders are equipped to recognize and respond to emerging risks. This collective vigilance helps create a resilient operational environment where technology serves as an asset rather than a liability.

Despite these challenges, the outlook on the use of LLMs in healthcare is cautiously optimistic. Researchers and clinicians emphasize that while vulnerabilities exist, they are neither insurmountable nor static. With escalating investments in cybersecurity infrastructure, evolving regulatory frameworks, and increasing public and professional consciousness, many of these threats can be managed proactively. The ongoing dialogue between AI developers, medical experts, and cybersecurity professionals is a testament to this collaborative effort aimed at maximizing benefits while curtailing risks.

Patients themselves also play a role in this evolving landscape. Transparency about the capabilities and limits of AI in healthcare, along with clear communication about cybersecurity measures, is critical to building trust. Educating patients on the safeguards implemented within healthcare institutions reassures them that their data privacy is a priority, even as new technologies are adopted. Thus, informed patient engagement becomes a pillar supporting the ethical deployment of LLMs.

From a technical standpoint, the resilience of LLMs arguably depends on the continuous refinement of their architectures and training paradigms. Advances in robust model training—with techniques such as adversarial training, differential privacy, and secure multiparty computation—are actively explored to harden AI systems against exploitation. The adoption of these sophisticated methodologies must be coupled with rigorous auditing and validation procedures to ensure that AI outputs remain reliable and free from malicious manipulation.

In conclusion, the cybersecurity threats posed by large language models in healthcare represent a complex convergence of AI technology vulnerabilities and systemic infrastructure risks. As the healthcare sector moves toward greater AI integration, it must prioritize a holistic security strategy encompassing technical safeguards, institutional policies, and workforce education. Only by addressing these challenges head-on can the full transformative potential of LLMs be realized safely and ethically, ultimately advancing patient care in a digitally connected world.

Subject of Research: Not applicable

Article Title: Cybersecurity Threats and Mitigation Strategies for Large Language Models in Healthcare

News Publication Date: 14-May-2025

Web References:

https://pubs.rsna.org/journal/ai

https://www.rsna.org/

Image Credits: Radiological Society of North America (RSNA)

Keywords: Artificial intelligence, Computer science, Medical cybernetics

Tags: AI in healthcare cybersecurity challengescybersecurity strategies for healthcare technologiescybersecurity threats in medical AI systemsdata poisoning attacks on AI modelsimplications of AI in healthcare deliverylarge language models cybersecurity risksLLMs in clinical decision-makingprotecting patient data in AIsafeguarding clinical processes with AIsystemic threats in healthcare AItechnical risks of large language modelsvulnerabilities of AI in radiology