Particle physicists use lattice quantum chromodynamics and supercomputers to search for physics beyond the Standard Model

Credit: A. Bazavov (Michigan State U.), C. Bernard (Washington U., St. Louis), N. Brown (Washington U., St. Louis), C. DeTar (Utah U.), A.X. El-Khadra (Illinois U., Urbana and Fermilab) et al….

Peer deeper into the heart of the atom than any microscope allows and scientists hypothesize that you will find a rich world of particles popping in and out of the vacuum, decaying into other particles, and adding to the weirdness of the visible world. These subatomic particles are governed by the quantum nature of the Universe and find tangible, physical form in experimental results.

Some subatomic particles were first discovered over a century ago with relatively simple experiments. More recently, however, the endeavor to understand these particles has spawned the largest, most ambitious and complex experiments in the world, including those at particle physics laboratories such as the European Organization for Nuclear Research (CERN) in Europe, Fermilab in Illinois, and the High Energy Accelerator Research Organization (KEK) in Japan.

These experiments have a mission to expand our understanding of the Universe, characterized most harmoniously in the Standard Model of particle physics; and to look beyond the Standard Model for as-yet-unknown physics.

“The Standard Model explains so much of what we observe in elementary particle and nuclear physics, but it leaves many questions unanswered,” said Steven Gottlieb, distinguished professor of Physics at Indiana University. “We are trying to unravel the mystery of what lies beyond the Standard Model.”

Ever since the beginning of the study of particle physics, experimental and theoretical approaches have complemented each other in the attempt to understand nature. In the past four to five decades, advanced computing has become an important part of both approaches. Great progress has been made in understanding the behavior of the zoo of subatomic particles, including bosons (especially the long sought and recently discovered Higgs boson), various flavors of quarks, gluons, muons, neutrinos and many states made from combinations of quarks or anti-quarks bound together.

Quantum field theory is the theoretical framework from which the Standard Model of particle physics is constructed. It combines classical field theory, special relativity and quantum mechanics, developed with contributions from Einstein, Dirac, Fermi, Feynman, and others. Within the Standard Model, quantum chromodynamics, or QCD, is the theory of the strong interaction between quarks and gluons, the fundamental particles that make up some of the larger composite particles such as the proton, neutron and pion.

PEERING THROUGH THE LATTICE

Carleton DeTar and Steven Gottlieb are two of the leading contemporary scholars of QCD research and practitioners of an approach known as lattice QCD. Lattice QCD represents continuous space as a discrete set of spacetime points (called the lattice). It uses supercomputers to study the interactions of quarks, and importantly, to determine more precisely several parameters of the Standard Model, thereby reducing the uncertainties in its predictions. It’s a slow and resource-intensive approach, but it has proven to have wide applicability, giving insight into parts of the theory inaccessible by other means, in particular the explicit forces acting between quarks and antiquarks.

DeTar and Gottlieb are part of the MIMD Lattice Computation (MILC) Collaboration and work very closely with the Fermilab Lattice Collaboration on the vast majority of their work. They also work with the High Precision QCD (HPQCD) Collaboration for the study of the muon anomalous magnetic moment. As part of these efforts, they use the fastest supercomputers in the world.

Since 2019, they have used Frontera at the Texas Advanced Computing Center (TACC) — the fastest academic supercomputer in the world and the 9th fastest overall — to propel their work. They are among the largest users of that resource, which is funded by the National Science Foundation. The team also uses Summit at the Oak Ridge National Laboratory (the #2 fastest supercomputer in the world); Cori at the National Energy Research Scientific Computing Center (#20), and Stampede2 (#25) at TACC, for the lattice calculations.

The efforts of the lattice QCD community over decades have brought greater accuracy to particle predictions through a combination of faster computers and improved algorithms and methodologies.

“We can do calculations and make predictions with high precision for how strong interactions work,” said DeTar, professor of Physics and Astronomy at the University of Utah. “When I started as a graduate student in the late 1960s, some of our best estimates were within 20 percent of experimental results. Now we can get answers with sub-percent accuracy.”

In particle physics, physical experiment and theory travel in tandem, informing each other, but sometimes producing different results. These differences suggest areas of further exploration or improvement.

“There are some tensions in these tests,” said Gottlieb, distinguished professor of Physics at Indiana University. “The tensions are not large enough to say that there is a problem here — the usual requirement is at least five standard deviations. But it means either you make the theory and experiment more precise and find that the agreement is better; or you do it and you find out, ‘Wait a minute, what was the three sigma tension is now a five standard deviation tension, and maybe we really have evidence for new physics.'”

DeTar calls these small discrepancies between theory and experiment ‘tantalizing.’ “They might be telling us something.”

Over the last several years, DeTar, Gottlieb and their collaborators have followed the paths of quarks and antiquarks with ever-greater resolution as they move through a background cloud of gluons and virtual quark-antiquark pairs, as prescribed precisely by QCD. The results of the calculation are used to determine physically meaningful quantities such as particle masses and decays.

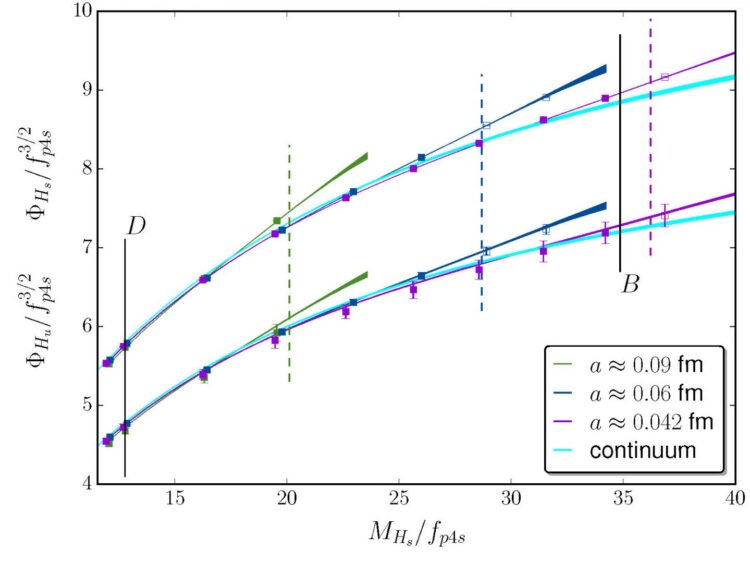

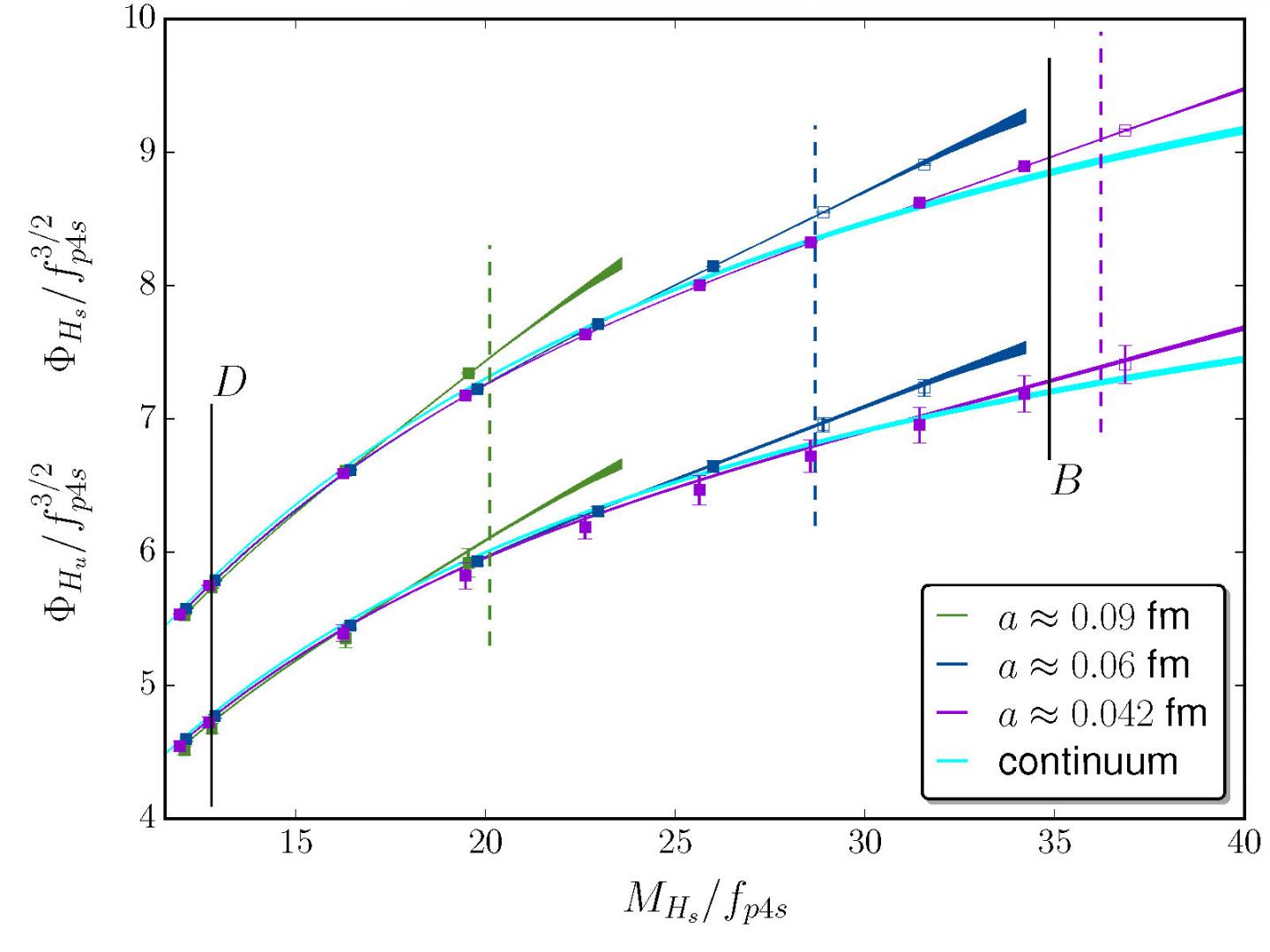

One of the current state-of-the-art approaches that is applied by the researchers uses the so-called highly improved staggered quark (HISQ) formalism to simulate interactions of quarks with gluons. On Frontera, DeTar and Gottlieb are currently simulating at a lattice spacing of 0.06 femtometers (10-15 meters), but they are quickly approaching their ultimate goal of 0.03 femtometers, a distance where the lattice spacing is smaller than the wavelength of the heaviest quark, consequently removing a significant source of uncertainty from these calculations.

Each doubling of resolution, however, requires about two orders of magnitude more computing power, putting a 0.03 femtometers lattice spacing firmly in the quickly-approaching ‘exascale’ regime.

“The costs of calculations keeps rising as you make the lattice spacing smaller,” DeTar said. “For smaller lattice spacing, we’re thinking of future Department of Energy machines and the Leadership Class Computing Facility [TACC’s future system in planning]. But we can make do with extrapolations now.”

THE ANOMALOUS MAGNETIC MOMENT OF THE MUON AND OTHER OUTSTANDING MYSTERIES

Among the phenomena that DeTar and Gottlieb are tackling is the anomalous magnetic moment of the muon (essentially a heavy electron) – which, in quantum field theory, arises from a weak cloud of elementary particles that surrounds the muon. The same sort of cloud affects particle decays. Theorists believe yet-undiscovered elementary particles could potentially be in that cloud.

A large international collaboration called the Muon g-2 Theory Initiative recently reviewed the present status of the Standard Model calculation of the muon’s anomalous magnetic moment. Their review appeared in Physics Reports in December 2020. DeTar, Gottlieb and several of their Fermilab Lattice, HPQCD and MILC collaborators are among the coauthors. They find a 3.7 standard deviation difference between experiment and theory.

“… the processes that were important in the earliest instance of the Universe involve the same interactions that we’re working with here. So, the mysteries we’re trying to solve in the microcosm may very well provide answers to the mysteries on the cosmological scale as well.”

Carleton DeTar, Professor of Physics, University of Utah

While some parts of the theoretical contributions can be calculated with extreme accuracy, the hadronic contributions (the class of subatomic particles that are composed of two or three quarks and participate in strong interactions) are the most difficult to calculate and are responsible for almost all of the theoretical uncertainty. Lattice QCD is one of two ways to calculate these contributions.

“The experimental uncertainty will soon be reduced by up to a factor of four by the new experiment currently running at Fermilab, and also by the future J-PARC experiment,” they wrote. “This and the prospects to further reduce the theoretical uncertainty in the near future… make this quantity one of the most promising places to look for evidence of new physics.”

Gottlieb, DeTar and collaborators have calculated the hadronic contribution to the anomalous magnetic moment with a precision of 2.2 percent. “This give us confidence that our short-term goal of achieving a precision of 1 percent on the hadronic contribution to the muon anomalous magnetic moment is now a realistic one,” Gottlieb said. The hope to achieve a precision of 0.5 percent a few years later.

Other ‘tantalizing’ hints of new physics involve measurements of the decay of B mesons. There, various experimental methods arrive at different results. “The decay properties and mixings of the D and B mesons are critical to a more accurate determination of several of the least well-known parameters of the Standard Model,” Gottlieb said. “Our work is improving the determinations of the masses of the up, down, strange, charm and bottom quarks and how they mix under weak decays.” The mixing is described by the so-called CKM mixing matrix for which Kobayashi and Maskawa won the 2008 Nobel Prize in Physics.

The answers DeTar and Gottlieb seek are the most fundamental in science: What is matter made of? And where did it come from?

“The Universe is very connected in many ways,” said DeTar. “We want to understand how the Universe began. The current understanding is that it began with the Big Bang. And the processes that were important in the earliest instance of the Universe involve the same interactions that we’re working with here. So, the mysteries we’re trying to solve in the microcosm may very well provide answers to the mysteries on the cosmological scale as well.”

###

Media Contact

Aaron Dubrow

[email protected]

Original Source

https:/

Related Journal Article

http://dx.