In an era where robotics is rapidly evolving, the challenge of enabling robots to efficiently and intelligently interact with unknown objects remains a hot topic in research. A recent study conducted by researchers Wu, Li, and Liu presents groundbreaking advancements in autonomous robotic capabilities, particularly focusing on grasping and robot-to-robot handover functionalities of unfamiliar items. This research promises to enhance operational efficiencies across various industries, including logistics, healthcare, and manufacturing, by integrating principles of autonomous learning within robotic systems without the conventional learning process.

Historically, robot programming required extensive input from developers and training data to facilitate interaction with objects. The necessity for pre-knowledge or learning beforehand limited the flexibility and adaptability of robotic systems. Wu and colleagues propose a novel approach that eschews learning dependencies, thereby allowing robots to grasp and manipulate unknown objects autonomously in real-time. This advancement could pave the way for more intuitive and user-friendly robotic applications in everyday scenarios, bringing us one step closer to seamless human-robot collaboration.

The core innovation outlined in this study revolves around developing algorithms that allow robots to perceive objects through sophisticated sensory inputs, enabling them to assess the geometry, weight, and other properties of items as they are encountered. The idea is that these robots, equipped with enhanced sensory systems, can analyze objects’ attributes instantly, making calculated decisions on how to grasp them without prior training. Such a shift in methodology not only optimizes time but also boosts the robots’ functional versatility, significantly widening their operational scope in unpredictable environments.

One of the key aspects of the research is the reduction of dependency on extensive training datasets, which have proven to be cumbersome and resource-intensive. The researchers have introduced a new framework that incorporates a combination of real-time object recognition and kinematic planning. This dual-layered approach enables robots to navigate complex scenarios where human intervention is minimal, thereby fostering independence in robotic operations. It allows these machines to respond dynamically to their environment, distinguishing themselves from traditional robotic systems that rely on predetermined pathways and actions.

In the study, the researchers conducted a series of experiments to evaluate the efficacy of their framework. Robots were subjected to various scenarios that involved picking up and handing over objects of different shapes and sizes, with no pre-acquired knowledge about these items. Results showed a marked improvement in grasp success rates compared to existing models, highlighting the potential for this technology to offer real-time solutions for industrial applications.

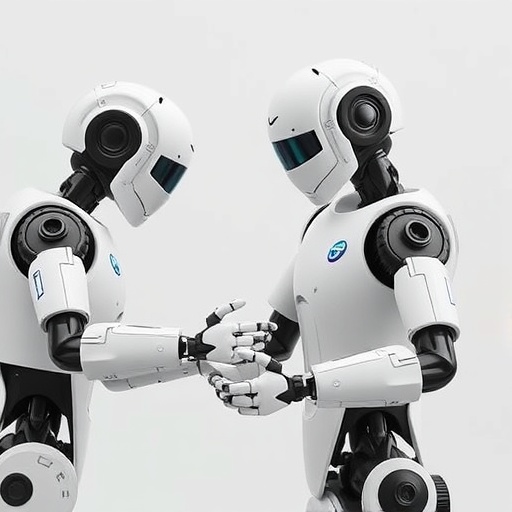

Furthermore, another significant innovation was the implementation of robot-to-robot handover systems. The ability for one robotic arm to hand over an object to another eliminates the need for human intermediaries in many contexts. This feature has far-reaching implications, particularly in logistical operations where timelines are critical. The handover mechanism was designed to be smooth and reliable, ensuring that the receiving robot can comprehend and adjust to the object being handed over, facilitating a seamless transition that mimics human interaction.

One of the central motivations behind this research is to address the increasing demand for automation in environments where human presence is limited or undesirable. For instance, in hazardous workplaces or during surgical operations, robots that can operate autonomously while managing tasks such as grasping and object handover reduce human risk and improve overall efficiency. This approach could significantly transform sectors by allowing robots to function in tandem with human workers, effectively augmenting workforce capabilities.

The implications extend beyond commercial applications. The researchers emphasize that this technology could facilitate advancements in assistive robotics, where social robots are capable of responding intelligently to user commands and needs. In domestic settings, for example, robots could autonomously retrieve household items for individuals with mobility challenges, promising enhanced quality of life and independence.

Additionally, the researchers shed light on the ethical dimensions of deploying such advanced robotics technologies. The study underscores the importance of developing robots that can operate transparently and collaboratively alongside humans, ensuring that they complement rather than compete with human labor. As robotics continue to permeate our daily lives, considerations around trust and transparency in robotic decision-making have become increasingly essential.

The implications of this research are profound, suggesting that we are on the brink of a paradigm shift in how we perceive and implement robotic assistance in everyday tasks. The ability to explore an unknown environment and interact with unfamiliar objects freely could redefine our understanding of autonomous machines. By focusing on intuitive designs that enhance robot-human collaboration, the authors invite further research in this field to create societies where robots can serve as valuable partners.

As the robotics community continues to innovate, the study signifies a promising future for robotics, emerging as a tool that can learn from the environment while performing tasks. Researchers across disciplines must view these developments as opportunities that can advance various industry practices by creating synergies between humans and machines that benefit society as a whole. The potential for development holds immense promise, not just in existing frameworks but also in pioneering new methodologies that can address contemporary challenges faced by industries globally.

In conclusion, Wu et al.’s research presents a significant leap toward realizing the potential of autonomous robots in navigating and interacting with the world without extensive previous training. Their findings have major implications not only for industrial operations but for the future of robotics in everyday life. By advancing the capacities of robots to act autonomously and intelligently, the study lays the groundwork for more profound inquiries into the integration of robotics within society, affecting various sectors and highlighting the importance of collaborative interfaces between machines and humans.

Subject of Research: Autonomous learning-free grasping and robot-to-robot handover of unknown objects

Article Title: Autonomous learning-free grasping and robot-to-robot handover of unknown objects

Article References: Wu, Y., Li, W., Liu, Z. et al. Autonomous learning-free grasping and robot-to-robot handover of unknown objects. Auton Robot 49, 18 (2025). https://doi.org/10.1007/s10514-025-10201-y

Image Credits: AI Generated

DOI: https://doi.org/10.1007/s10514-025-10201-y

Keywords: Robotics, Autonomous Systems, Grasping, Robot Handover, Machine Learning, Human-Robot Interaction, Object Recognition.

Tags: autonomous learning in roboticsenhancing operational efficiencies roboticsflexible robotic systems developmenthuman-robot collaboration improvementsintelligent robot interactionsintuitive robotic applicationslogistics and healthcare roboticsnovel algorithms for object perceptionreal-time object manipulation robotsrobotic capabilities advancementsrobots autonomous object handoverunknown object grasping technology