Modeling the emergence and spread of biological threats isn’t as routine as forecasting the weather, but scientists in two of the U.S. Department of Energy’s (DOE) national laboratories were awarded funding to try to make it so.

Credit: (Image by Argonne National Laboratory.)

Modeling the emergence and spread of biological threats isn’t as routine as forecasting the weather, but scientists in two of the U.S. Department of Energy’s (DOE) national laboratories were awarded funding to try to make it so.

DOE’s Argonne National Laboratory and Sandia National Laboratories were one of the three projects to receive a total of $5 million from DOE to advance computational tools to better prepare for natural and human-created biological threats. The laboratories will work together to harness Sandia’s algorithms of real-world outcomes to Argonne’s high performance models that address spread transmission and control of diseases.

“Our whole point in doing this type of work is to make the process routine, more akin to weather forecasting or other domains where a large computational infrastructure is dedicated to continuously adjusting the models automatically as new data is obtained.” — Jonathan Ozik, principal computational scientist, Argonne National Laboratory

The projects fall under the DOE Office of Science’s new Bio-preparedness Research Virtual Environment initiative, which focuses on developing scientific capabilities that aid in the prevention and response to potential biothreats.

“We want models that mimic reality,” said Jonathan Ozik, principal computational scientist at Argonne. “By calibrating them, we will be able to trust that outcomes from computational experiments carried out with the models have a good chance of meaningfully reflecting reality.”

Those outcomes can provide insight into the intricacies of disease transmission as well as the effectiveness of vaccination efforts. Provided to municipal and state public health officials, the outcomes could serve as potential guidelines for the development of mitigation initiatives.

The disease model Argonne developed was first used in the early 2000s when a MRSA epidemic emerged in Chicago. The same model was later applied to the threat of an Ebola outbreak in 2014.

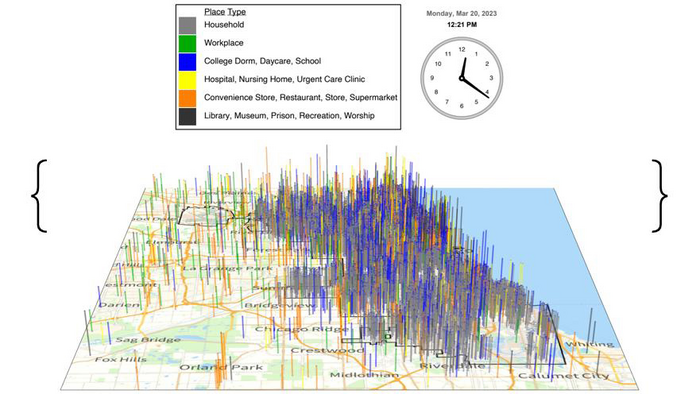

More recently, Argonne researchers developed CityCOVID, a highly refined version of the model, to simulate the spread of COVID-19 in Chicago. By forecasting new infections, hospitalizations and deaths, the model provided a computational platform for investigating the potential impacts of nonpharmaceutical interventions for mitigating the spread of COVID-19. It was chosen as one of only four finalists for the 2020 ACM Gordon Bell Special Prize for High Performance Computing-Based COVID-19 Research, which recognized outstanding research achievement towards the understanding of the COVID-19 pandemic through the use of high performance computing (HPC).

Developing such models, known as agent-based modeling (ABM), is a complex effort and HPC becomes critical. This is due to the large amount of data the model requires, the number of parameters researchers must take into account and additional factors or inputs that combine to make the model as accurate as possible.

“Getting access to the data, landing on the right parameters and evolving the best fit of information that is timely and relevant to decision makers are among the biggest problems we face,” said Charles “Chick” Macal, chief scientist for Argonne’s Decision and Infrastructure Sciences division and its social, behavioral and decision science group leader. “A large component of the work that we do as part of the biopreparedness project is to develop high performance computing workflows to improve the computational techniques, to make them more efficient.”

The parameters and factors have to predict real-world targets that researchers want to understand, like number of hospitalizations, deaths and vaccinations. To get it right, researchers run simulations over and over — sometimes hundreds of thousands of times — adjusting parameters until the model mimics what the data is telling them.

Making sure these components align, and reducing the number and run-time of the simulations, is where Sandia’s efforts come into play. Like Argonne, Sandia already had roots in computational epidemiology, starting with the anthrax scare of 2003. Later work focused on smallpox and, most recently, COVID.

For this project, Argonne’s computing infrastructure will be adapted to automatically apply Sandia-developed calibration algorithms as parameters for the epidemiological models change or new ones come to light.

“We are the guys who search out those parameters,” said Sandia’s Jaideep Ray, principal investigator of the project.

One of the most important parameters is the unknown spread rate of the disease, according to Ray. Calibrating the model predictions to real data — much of it from Chicago and New Mexico COVID-19 data and other public health surveillance sources — by optimizing the spread rate over several weeks of simulation runs and data collection allows the model to forecast future case counts in an epidemic.

“If the forecasts are right, then we know we have the right set of parameters,” said Ray.

When it comes to infectious disease epidemics, time is of the essence. Naïve calibration, which requires running an ABM thousands of times, is neither efficient nor practical. By using an artificial intelligence method called machine learning, researchers can construct and train a metamodel — a model of their ABM — that can run in seconds. The results can then be used by the machine to “learn” the spread-rate from epidemic data and make forecasts.

While the process may sacrifice the accuracy of long-term forecasts, it could generate faster, short-term forecasts that reduce computational expense and set mitigation efforts in motion more quickly.

“Our whole point in doing this type of work is to make the process routine, more akin to weather forecasting or other domains where a large computational infrastructure is dedicated to continuously adjusting the models automatically as new data is obtained,” said Ozik. “We can then provide short-term forecasts and the ability to run longer-term scenarios that answer specific stakeholder questions.”

While Chicago served as the testbed for this model, the team expects to generalize their methods for application to any other place in the world.

Award funding was provided by the DOE’s Advanced Scientific Computing Research program, which manages the development of computational systems and tools for the DOE.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation’s first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America’s scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy’s Office of Science.

The U.S. Department of Energy’s Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.