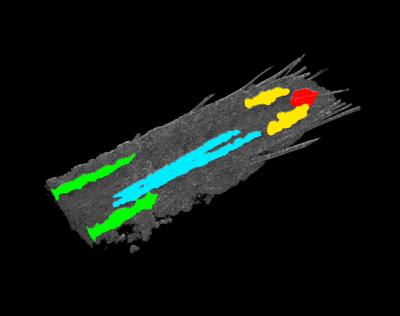

Credit: Yujia Hu, U-M Life Sciences Institute

Fruit flies may be able to teach researchers a thing or two about artificial intelligence.

University of Michigan biologists and their colleagues have uncovered a neural network that enables Drosophila melanogaster fruit flies to convert external stimuli of varying intensities into a “yes or no” decision about when to act.

The research, scheduled to publish Oct. 15 in the journal Current Biology, offers hints into how these decisions work in other species, and could perhaps even be applied to help AI machines learn to categorize information.

Imagine you are working near an open window. If the outside noise is low enough, you may not even notice it. As the noise level gradually increases, you start to notice it more–and eventually, your brain makes a decision about whether to get up and close the window.

But how does the nervous system translate that gradual, linear increase in intensity to a binary, “yes/no” behavioral decision?

“That’s a really big question,” said neuroscientist Bing Ye, a faculty member at the University of Michigan Life Sciences Institute and senior author of the study. “Between the sensory input and the behavior output is a bit of ‘black box.’ With this study, we wanted to open that box.”

Brain imaging in humans or other mammals can identify certain regions of the brain that respond to particular stimuli. But to determine how and when the neurons transform linear information into a nonlinear decision, the researchers needed a much deeper, more quantitative analysis of the nervous system, Ye said.

They chose to work with the model organism Drosophila, due to the availability of genetic tools that make it possible to identify individual neurons responding to stimuli.

Using an imaging technique that detects neuronal activity through calcium signaling between neurons, the scientists were able to produce 3-D neuroactivity imaging of the flies’ entire central nervous system.

“What we saw was that, when we stimulate the sensory neurons that detect harmful stimuli, quite a few brain regions light up within seconds,” said Yujia Hu, a research investigator at the LSI and one of the lead authors on the study. “But these brain regions perform different functions. Some are immediately processing sensory information, some spark the behavioral output–but some are more for this transformation process that occurs in between.”

When sensory neurons detect the harmful external stimuli, they send information to second-order neurons in the central nervous system. The researchers found that one region of the nervous system in particular, called the posterior medial core, responds to sensory information by either muting less intense signals or amplifying more intense signals, effectively sorting a gradient of sensory inputs into “respond” or “don’t respond” categories.

The signals get amplified through increased recruitment of second-order neurons to the neural network–what the researchers refer to as escalated amplification. A mild stimulus could activate two second-order neurons, for example, while a more intense stimulus may activate 10 second-order neurons in the network. The larger network can then prompt a behavioral response.

But to make a “yes/no” decision, the nervous system needs a way not just to amplify information (for a “yes” response), but to also suppress unnecessary or less harmful information (for a “no” response).

“Our sensory system detects and tells us a lot more than we realize,” said Ye, who is also a professor of cell and developmental biology in the U-M Medical School. “We need a way to quiet that information, or we would just constantly have exponential amplification.”

Using the 3-D imaging, the researchers found that the sensory neurons actually do detect the less harmful stimuli, but that information is filtered out by the posterior medial core, through the release of a chemical that represses neuron-to-neuron communication.

Together, the findings decode the biological mechanism that the fruit fly nervous system uses to convert a gradient of sensory information into a binary behavioral response. And Ye believes this mechanism could have far wider applications.

“There is a dominant idea in our field that these decisions are made by the accumulation of evidence, which takes time,” Ye said. “In the biological mechanism we found, the network is wired in a way that it does not need an evidence accumulation phase. We don’t know yet, but we wonder if this could serve as a model to help AI learn to sort information more quickly.”

###

The research was supported by the National Institutes of Health. Study authors also included Limin Yang, Geng Pan and Hao Liu of U-M and Congchao Wang and Guoqiang Yu of Virginia Tech.

Study: A neural basis for categorizing sensory stimuli to enhance decision accuracy (DOI:10.1016/j.cub.2020.09.045)

Additional contact:

Emily Kagey

734-615-6228

[email protected]

Media Contact

Jim Erickson

[email protected]

Related Journal Article

http://dx.