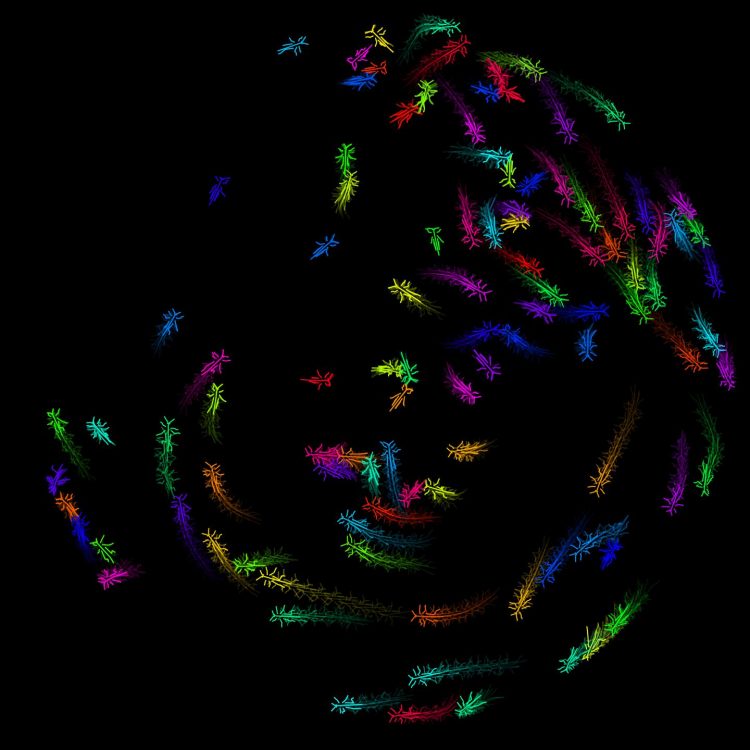

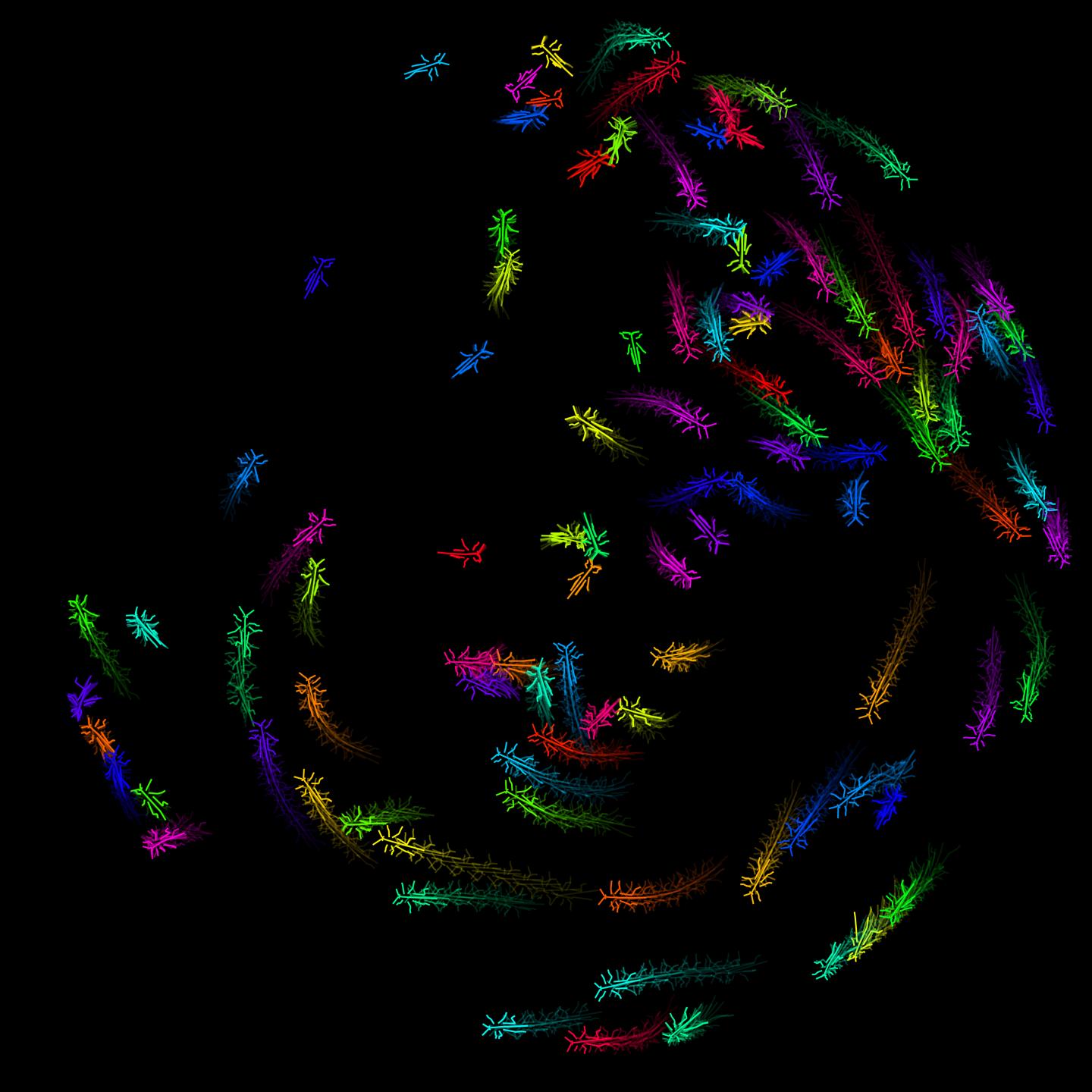

Credit: Jake Graving

A new toolkit goes beyond existing machine learning methods by measuring body posture in animals with high speed and accuracy. Developed by researchers from the Centre for the Advanced Study of Collective Behaviour at the University of Konstanz and the Max Planck Institute of Animal Behavior, this deep learning toolkit, called DeepPoseKit, combines previous methods for pose estimation with state-of-the-art developments in computer science. These newly-developed deep learning methods can correctly measure body posture from previously-unseen images after being trained with only 100 examples and can be applied to study wild animals in challenging field settings. Published today in the open access journal eLife, the study is advancing the field of animal behaviour with next-generation tools while at the same time providing an accessible system for non-experts to easily apply machine learning to their behavioural research.

Animals must interact with the physical world in order to survive and reproduce, and studying their behaviour can reveal the solutions that have evolved for achieving these ultimate goals. Yet behaviour is hard to define just by observing it directly: biases and limited processing power of human observers inhibits the quality and resolution of behavioural data that can be collected from animals.

Machine learning has changed that. Various tools have been developed in recent years that allow researchers to automatically track the locations of animals’ body parts directly from images or videos – without the need for applying intrusive markers on animals or manually scoring behaviour. These methods, however, have shortcomings that limit performance. “Existing tools for measuring body posture with deep learning were either slower and more accurate or faster and less accurate – but we wanted to achieve the best of both worlds.” says lead author Jake Graving, a graduate student in the Max Planck Institute of Animal Behavior.

In the new study, researchers present an approach that overcomes this speed-accuracy trade-off. These new methods use an efficient, state-of-the-art deep learning model to detect body parts in images, and a fast algorithm for calculating the location of these detected body parts with high accuracy. Results from this study also demonstrate that these new methods can be applied across species and experimental conditions – from flies, locusts, and mice in controlled laboratory settings to herds of zebras interacting in the wild. Dr. Blair Costelloe, co-author of the paper, who studies zebras in Kenya says: “The posture data we can now collect for the zebras using DeepPoseKit allows us to know exactly what each individual is doing in the group and how they interact with the surrounding environment. In contrast, existing technologies like GPS will reduce this complexity down to a single point in space, which limits the types of questions you can answer.”

Due to its high performance and easy-to-use software interface (the code is publicly available on Github, https:/

“In just a few short years deep learning has gone from being a sort of niche, hard-to-use method to one of the most democratized and widely-used software tools in the world,” says Iain Couzin, senior author on the paper who leads the Centre for the Advanced Study of Collective Behaviour at the University of Konstanz and the Department of Collective Behaviour at the Max Planck Institute of Animal Behavior. “Our hope is that we can contribute to behavioural research by developing easy-to-use, high-performance tools that anybody can use.” Tools like these are important for studying behaviour because, as Graving puts it: “They allow us to start with first principles, or ‘how is the animal moving its body through space?’, rather than subjective definitions of what constitutes a behaviour. From there we can begin to apply mathematical models to the data and develop general theories that help us to better understand how individuals and groups of animals adaptively organize their behaviour.”

###

Facts:

– Jacob M. Graving, Daniel Chae, Hemal Naik, Liang Li, Benjamin Koger, Blair R. Costelloe, Iain D. Couzin (2019) DeepPoseKit, a software toolkit for fast and robust animal pose estimation using deep learning. eLife. DOI: https:/

Link: https:/

– All authors with the exception of Daniel Chae are part of the new Cluster of Excellence “Centre for the Advanced Study of Collective Behaviour” at the University of Konstanz and the Department of Collective Behaviour, Max Planck Institute of Animal Behavior in Konstanz.

– This project received funding from the European Union’s Horizon 2020 research and innovation programme (under the Marie Sklodowska-Curie grant agreement) and the National Science Foundation.

Note to editors:

You can download a photo here:

https:/

Caption: A deep learning toolkit, called DeepPoseKit, can automatically detect animal body parts directly from images or video with high speed and accuracy – without attaching physical markers. The method can be used for animals in laboratory settings (e.g. flies and locusts) or in the wild (e.g. zebras).

Credit: Jake Graving

Media Contact

Julia Wandt

[email protected]

Original Source

https:/

Related Journal Article

http://dx.