In the rapidly evolving realm of robotics, achieving fluid and safe bipedal locomotion remains a cornerstone challenge. Scientists at Beijing Institute of Technology have unveiled an innovative perception method designed to significantly enhance the “look-and-step” behavior in bipedal robots. This breakthrough lays the foundation for robots that can navigate complex environments more efficiently, relying solely on limited computing resources without compromising safety or speed.

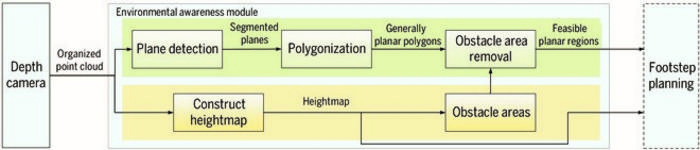

The foundation of this research revolves around the rapid extraction and representation of the surrounding environment. Unlike traditional approaches that either require extensive computational power or auxiliary hardware such as GPUs, this new method operates efficiently on standard central processing units (CPUs), making it highly practical for lightweight bipedal robots. By leveraging a hybrid environmental representation that combines feasible planar regions with a heightmap, the researchers have engineered a perception system optimized for real-time applications.

At the heart of this advancement is a dual-subsystem framework. The first subsystem identifies feasible planar regions—essentially flat surfaces suitable for foot placement—while the second constructs a detailed heightmap to assist in precise foot trajectory planning. This dual approach ensures that robots not only identify safe landing zones but also calculate swing foot paths that avoid collisions with obstacles or uneven terrain, thereby mitigating risks of stumbles or falls during locomotion.

The significance of the “look-and-step” behavior strategy lies in its emphasis on immediate responsiveness. Bipedal robots engage in a continuous loop of rapid perception followed by movement, eliminating prolonged pauses for environmental analysis. This method dramatically improves adaptability in unpredictable environments, enabling robots to adjust their footsteps dynamically and correct cumulative walking deviations—a critical requirement for autonomous navigation in real-world scenarios.

One of the major hurdles in robotic perception is the balancing act between computational efficiency and environmental detail. Existing systems frequently resort to powerful GPUs to accelerate processing, but this introduces weight and bulk that are prohibitive for nimble bipeds. The Beijing team circumvented this limitation by ingeniously exploiting the inherent structured organization within point cloud data, allowing for swift nearest neighbor searches that expedite the extraction of planar regions without additional hardware demands.

Efficiency aside, safety remains paramount. The perception system meticulously excludes hazardous planar surfaces that might lead to collisions between the robot’s body and environmental obstacles. By implementing precise filtering criteria, the robot’s stability is preserved without sacrificing the breadth of navigable terrain. Remarkably, the entire perception pipeline operates at a rate of approximately 0.16 seconds per frame when running on a single CPU, a testament to the method’s optimization.

Empirical validation involved coupling the perception system with an advanced footstep planning algorithm developed by the same research group. Through rigorous experimentation in artificially constructed environments, the integrated system demonstrated its competence in enabling bipedal robots to make rapid, safe decisions about foot placement, successfully negotiating obstacles and uneven surfaces with a high degree of reliability.

While the results are promising, the study acknowledges inherent limitations. Primarily, the system’s reliance on empirically set thresholds tailored to specific scenarios presents challenges when transitioning to varied or unforeseen terrains. These thresholds govern the sensitivity of planar region extraction and obstacle detection, and static values may not generalize well across diverse landscapes. The researchers propose future developments aimed at adaptive thresholding mechanisms that can dynamically adjust parameters in real-time.

Expanding beyond current achievements, the scientists envision extending their methodology to support continuous walking sequences under the look-and-step paradigm. Such an evolution would enable bipedal robots to maintain seamless locomotion over prolonged periods, adapting fluidly to evolving terrain complexities and environmental changes, thus vastly improving operational autonomy.

The implications of this work resonate beyond the lab. Efficient perception bolsters robotic applications in search and rescue, delivery, and exploration missions where environmental unpredictability is the norm. Lighter, faster-processing robots enhance mobility in cluttered or uneven spaces such as urban rubble or natural landscapes, potentially revolutionizing their utility in real-world humanitarian and industrial contexts.

Integral to this innovation is the ability to operate within stringent computational budgets, which carries profound engineering significance. By obviating the need for bulky GPUs, the overall payload of bipedal robots is reduced, improving energy efficiency and mechanical design freedom. This alignment of perception and physical constraints opens pathways for more compact, agile, and commercially viable robotic platforms.

This pioneering work is authored by a team including Chao Li, Qingqing Li, Junhang Lai, Xuechao Chen, Zhangguo Yu, and Zhihong Jiang, whose combined expertise bridges mechatronics, computer vision, and robotic locomotion. Supported by the Postdoctoral Fellowship Program of CPSF and the Beijing Natural Science Foundation, their research marks a significant stride in enabling autonomous, adaptive, and safe biped robot navigation.

Published in the April 2025 issue of Cyborg and Bionic Systems, the paper titled “Efficient Hybrid Environment Expression for Look-and-Step Behavior of Bipedal Walking” sets a new benchmark for real-time robotic perception. Its practical framework and thoughtful engineering solutions hold the promise of accelerating the deployment of next-generation bipedal robots across an array of challenging applications.

Subject of Research: Bipedal Robot Locomotion and Environmental Perception

Article Title: Efficient Hybrid Environment Expression for Look-and-Step Behavior of Bipedal Walking

News Publication Date: April 23, 2025

Web References: DOI: 10.34133/cbsystems.0244

Image Credits: Xuechao Chen, School of Mechatronic Engineering, Beijing Institute of Technology

Keywords: Applied sciences and engineering, Health and medicine, Life sciences

Tags: advancements in robotic movement safetybipedal locomotion technologyCPU-based robotics solutionsdual-subsystem framework for roboticsefficient robot navigation systemshybrid perception methods in roboticslightweight bipedal robot designlook-and-step behavior enhancementobstacle avoidance in bipedal robotsreal-time environmental representationrobotics research at Beijing Institute of Technologysafe foot placement algorithms