Credit: Liang Gao, from the University of Illinois at Urbana-Champaign

WASHINGTON — There is a great deal of excitement around virtual reality (VR) headsets that display a computer-simulated world and augmented reality (AR) glasses that overlay computer-generated elements with the real world. Although AR and VR devices are starting to hit the market, they remain mostly a novelty because eye fatigue makes them uncomfortable to use for extended periods. A new type of 3D display could solve this long-standing problem by greatly improving the viewing comfort of these wearable devices.

"We want to replace currently used AR and VR optical display modules with our 3D display to get rid of eye fatigue problems," said Liang Gao, from the University of Illinois at Urbana-Champaign. "Our method could lead to a new generation of 3D displays that can be integrated into any type of AR glasses or VR headset."

Gao and Wei Cui report their new optical mapping 3D display in The Optical Society (OSA) journal Optics Letters. Measuring only 1 x 2 inches, the new display module increases viewing comfort by producing depth cues that are perceived in much the same way we see depth in the real-world.

Overcoming eye fatigue

Today's VR headsets and AR glasses present two 2D images in a way that cues the viewer's brain to combine the images into the impression of a 3D scene. This type of stereoscopic display causes what is known as a vergence-accommodation conflict, which over time makes it harder for the viewer to fuse the images and causes discomfort and eye fatigue.

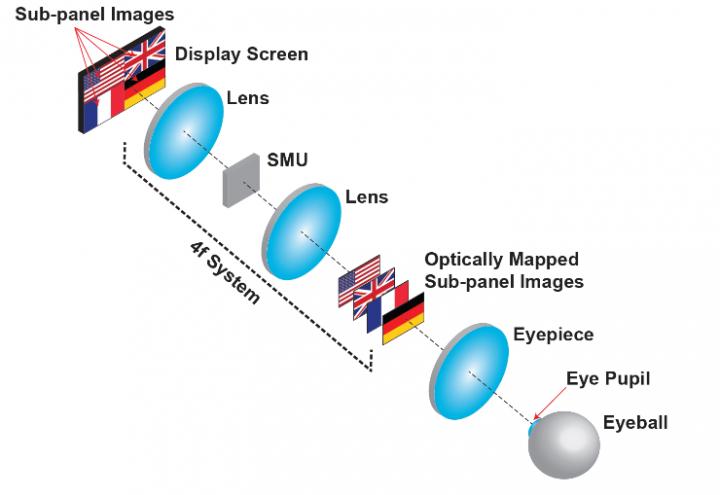

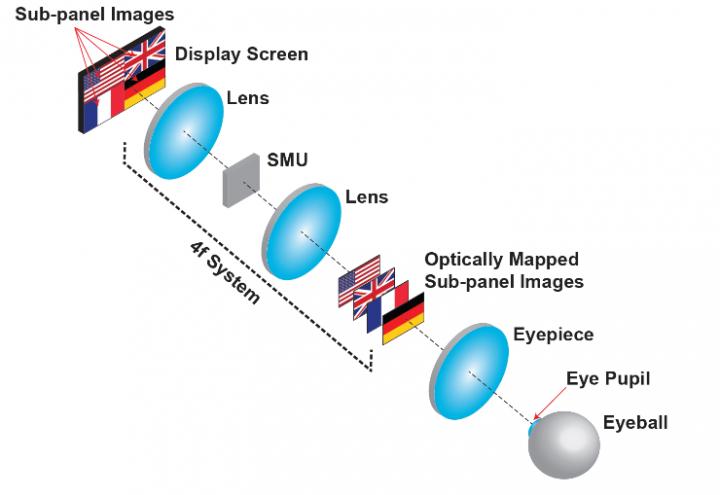

The new display presents actual 3D images using an approach called optical mapping. This is done by dividing a digital display into subpanels that each create a 2D picture. The subpanel images are shifted to different depths while the centers of all the images are aligned with one another. This makes it appear as if each image is at a different depth when a user looks through the eyepiece. The researchers also created an algorithm that blends the images, so that the depths appear continuous, creating a unified 3D image.

The key component for the new system is a spatial multiplexing unit that axially shifts sub-panel images to the designated depths while laterally shifting the centers of sub-panel images to the viewing axis. In the current setup, the spatial multiplexing unit is made of spatial light modulators that modify the light according to a specific algorithm developed by the researchers.

Although the approach would work with any modern display technology, the researchers used an organic light emitting diode (OLEDs) display, one of the newest display technologies to be used on commercial televisions and mobile devices. The extremely high resolution available from the OLED display ensured that each subpanel contained enough pixels to create a clear image.

"People have tried methods similar to ours to create multiple plane depths, but instead of creating multiple depth images simultaneously, they changed the images very quickly," said Gao. "However, this approach comes with a trade-off in dynamic range, or level of contrast, because the duration each image is shown is very short."

Creating depth cues

The researchers tested the device by using it to display a complex scene of parked cars and placing a camera in front of the eyepiece to record what the human eye would see. They showed that the camera could focus on cars that appeared far away while the foreground remained out of focus. Similarly, the camera could be focused on the closer cars while the background appeared blurry. This test confirmed that the new display produces focal cues that create depth perception much like the way humans perceive depth in a scene. This demonstration was performed in black and white, but the researchers say the technique could also be used to produce color images, although with a reduced lateral resolution.

The researchers are now working to further reduce the system's size, weight and power consumption. "In the future, we want to replace the spatial light modulators with another optical component such as a volume holography grating," said Gao. "In addition to being smaller, these gratings don't actively consume power, which would make our device even more compact and increase its suitability for VR headsets or AR glasses."

Although the researchers don't currently have any commercial partners, they are in discussions with companies to see if the new display could be integrated into future AR and VR products.

###

Paper: W. Cui, L. Gao, "Optical Mapping Near-eye Three-dimensional Display with Correct Focus Cues," Opt. Lett., Volume 42, Issue 13, 2475-2478 (2017). DOI: 10.1364/OL.42.002475.

About Optics Letters

Optics Letters offers rapid dissemination of new results in all areas of optics with short, original, peer-reviewed communications. Optics Letters covers the latest research in optical science, including optical measurements, optical components and devices, atmospheric optics, biomedical optics, Fourier optics, integrated optics, optical processing, optoelectronics, lasers, nonlinear optics, optical storage and holography, optical coherence, polarization, quantum electronics, ultrafast optical phenomena, photonic crystals and fiber optics.

About The Optical Society

Founded in 1916, The Optical Society (OSA) is the leading professional organization for scientists, engineers, students and business leaders who fuel discoveries, shape real-life applications and accelerate achievements in the science of light. Through world-renowned publications, meetings and membership initiatives, OSA provides quality research, inspired interactions and dedicated resources for its extensive global network of optics and photonics experts. For more information, visit osa.org.

Media Contacts:

Rebecca B. Andersen

The Optical Society

[email protected]

+1 202.416.1443

Joshua Miller

The Optical Society

[email protected]

+1 202.416.1435

Media Contact

Joshua Miller

[email protected]

202-416-1435

@opticalsociety

http://www.osa.org

Related Journal Article

http://dx.doi.org/10.1364/OL.42.002475.

############

Story Source: Materials provided by Scienmag