In the ever-evolving landscape of immersive technology, the way we perceive sound is rapidly transforming, especially within virtual and augmented reality environments. A groundbreaking study conducted by researchers at Sophia University in Japan sheds new light on a subtle yet profound aspect of auditory perception: how humans discern the direction a speaker is facing solely through acoustic cues. This inquiry not only deepens scientific understanding of spatial hearing but also carries significant implications for the future design of virtual soundscapes that mimic real-life auditory experiences with greater precision and realism.

The research, led by Dr. Shinya Tsuji along with Ms. Haruna Kashima and Professor Takayuki Arai from the Department of Information and Communication Sciences at Sophia University, in collaboration with experts from NHK Science and Technology Research Laboratories, employs rigorous experimental methods to examine the auditory cues that inform a listener about a speaker’s orientation in space. The findings, published in the prestigious journal Acoustical Science and Technology in its May 2025 issue, establish that the human auditory system leverages a nuanced combination of loudness variation and spectral frequency cues to identify the direction a speaker faces while speaking.

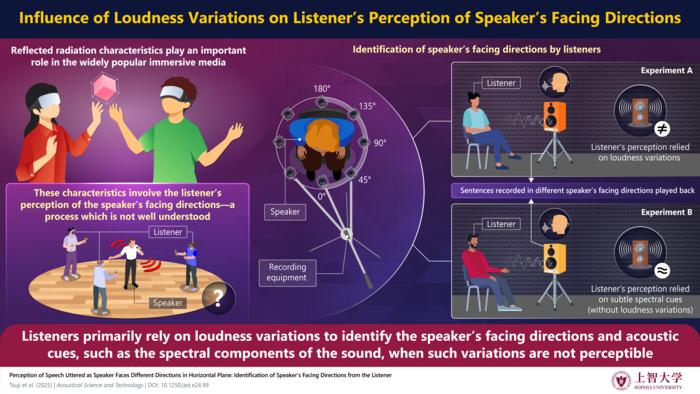

Central to this study is an exploration of two distinct experimental paradigms. The first manipulated the loudness of speech recordings to simulate varying directional intensities, while the second controlled for loudness, maintaining a constant volume to isolate the influence of spectral characteristics. Participants were tasked with identifying the speaker’s facing direction based solely on these auditory stimuli. Intriguingly, results demonstrated that loudness served as a dominant cue for localization. However, when loudness was held constant, listeners still exhibited a remarkable ability to infer direction by decoding subtle changes in the spectral content—the distribution of sound frequencies that shift with the emitter’s orientation.

.adsslot_tMKUyLIWSa{width:728px !important;height:90px !important;}

@media(max-width:1199px){ .adsslot_tMKUyLIWSa{width:468px !important;height:60px !important;}

}

@media(max-width:767px){ .adsslot_tMKUyLIWSa{width:320px !important;height:50px !important;}

}

ADVERTISEMENT

Spectral cues arise because as a speaker turns, the filtering effect of their head, torso, and mouth shapes the sound differently across frequencies. This frequency-dependent alteration is captured in the acoustic signal as variances in harmonic content and timbre, providing listeners with covert information about direction beyond mere intensity. This insight underscores the intricate interplay between physical sound properties and the brain’s auditory processing mechanisms, revealing an adaptive perceptual skill that transcends simple volume-based judgments.

Dr. Tsuji emphasized the dual importance of these findings, stating, “Our study suggests that humans mainly rely on loudness to identify a speaker’s facing direction. However, it can also be judged from some acoustic cues, such as the spectral component of the sound, not just loudness alone.” This remark highlights a nuanced understanding of how directional hearing operates in real-world listening conditions, where loudness can be affected by environmental factors, and spectral cues provide a necessary complementary signal for accurate spatial orientation.

The implications of this research resonate profoundly within the burgeoning fields of augmented reality (AR) and virtual reality (VR). These platforms rely heavily on spatial audio to create believable, immersive worlds where users’ auditory experience must correspond convincingly to visual and positional information. In six-degrees-of-freedom (6DoF) environments, where a user can move and turn freely within a three-dimensional space, the ability to perceive subtle cues about speaker direction enhances realism and presence. As Dr. Tsuji noted, “In contents having virtual sound fields with six-degrees-of-freedom—like AR and VR—where listeners can freely appreciate sounds from various positions, the experience of human voices can be significantly enhanced using the findings from our research.”

This advancement arrives at a pivotal moment, as consumer technology companies are investing heavily in spatial audio capabilities to differentiate their devices and improve user engagement. Headsets like Meta Quest 3 and Apple Vision Pro are pioneering how spatially accurate voice audio can transform virtual interactions, from gaming to teleconferencing. Accurate rendering of speaking direction improves not only the fidelity of these interactions but also enhances accessibility for users relying on spatial hearing cues to navigate complex sound environments.

Beyond entertainment and communication, this research also sets the foundation for innovations in assistive technologies and auditory health. For instance, individuals with unilateral hearing loss or auditory processing disorders may benefit from audio systems that emulate more realistic directional cues, facilitating better comprehension and spatial awareness. Additionally, in virtual meeting platforms and therapeutic contexts—such as voice-based cognitive rehabilitation—the fidelity of spatial audio can profoundly influence effectiveness and user comfort.

The study’s experimental design, characterized by controlled auditory stimulus presentation and exhaustive participant testing, reflects a commitment to scientific rigor. By methodically isolating variables such as loudness and spectral features, the researchers have delineated the sensory parameters that the human brain prioritizes when reconstructing spatial auditory scenes. This approach opens pathways for engineers and sound designers to integrate these parameters into algorithms and hardware that manage sound localization in virtual environments.

Sophia University, a renowned institution located in the heart of Tokyo, is known for fostering multidisciplinary research at the intersection of information science and human-computer interaction. The collaboration with NHK Science and Technology Research Laboratories underscores the translational potential of fundamental acoustic research into practical applications within broadcasting and digital media technologies. Such partnerships illustrate a model for how academic and industrial entities can cooperatively advance cutting-edge solutions.

At the core of human interaction is speech, arguably the most essential and personal sound experienced daily. By unraveling the mechanisms that allow listeners to intuitively discern the facing direction of a speaker, this research enriches the broader narrative of human auditory perception and spatial cognition. It invites further exploration into how complex acoustic environments can be reconstructed digitally, ultimately striving to bridge the gap between real-world soundscapes and their virtual representations.

In conclusion, the pioneering work from Dr. Tsuji and his colleagues embodies a significant stride toward making virtual auditory experiences more authentic, interactive, and meaningful. Their elucidation of the complementary roles of loudness and spectral acoustic cues provides a scientific foundation for enhancing the design of future VR and AR platforms, setting a new benchmark for spatial audio fidelity. As immersive media continues to reshape how we communicate, learn, and entertain, such advancements ensure that auditory realism keeps pace, delivering richer, more convincing experiences that resonate naturally with human perception.

Subject of Research: People

Article Title: Perception of speech uttered as speaker faces different directions in horizontal plane: Identification of speaker’s facing directions from the listener

News Publication Date: May 1, 2025

Web References: https://doi.org/10.1250/ast.e24.99

References:

Tsuji, S., Kashima, H., Arai, T., Sugimoto, T., Kinoshita, K., & Nakayama, Y. (2025). Perception of speech uttered as speaker faces different directions in horizontal plane: Identification of speaker’s facing directions from the listener. Acoustical Science and Technology, 46(3). https://doi.org/10.1250/ast.e24.99

Image Credits: Dr. Shinya Tsuji, Sophia University, Japan

Keywords

Spatial audio, auditory perception, speaker direction identification, spectral cues, loudness, virtual reality, augmented reality, immersive sound, six degrees of freedom, sound localization, human auditory processing, acoustic signals

Tags: acoustic cues for speaker directionauditory perception in augmented realityexperimental methods in auditory researchfuture of immersive audio experiencesimmersive technology advancementsimplications for virtual soundscapeslistening and orientation cuesloudness variation and directionalitySophia University acoustic studysound perception in virtual realityspatial hearing researchspectral frequency cues in sound