Credit: Zewei Cai, Jiawei Chen, Giancarlo Pedrini, Wolfgang Osten, Xiaoli Liu, and Xiang Peng

Light-field imaging can detect both spatial and angular information of light rays. The angular information offers peculiar capabilities over conventional imaging, such as viewpoint shifting, post-capture refocusing, depth sensing, depth-of-field extension, etc. The concept of plenoptic cameras by adding a pinhole array or microlens array was proposed more than a century ago. Nowadays, microlens array based plenoptic cameras are commonly used for light-field imaging, such as the commercially available products, Lytro and Raytrix. However, these devices confront a trade-off between the spatial and angular resolutions; the spatial resolution is in general tens to hundreds times smaller than the number of pixels used.

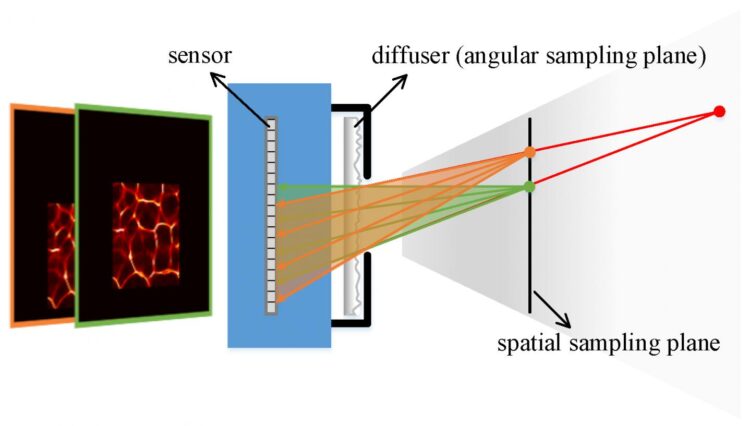

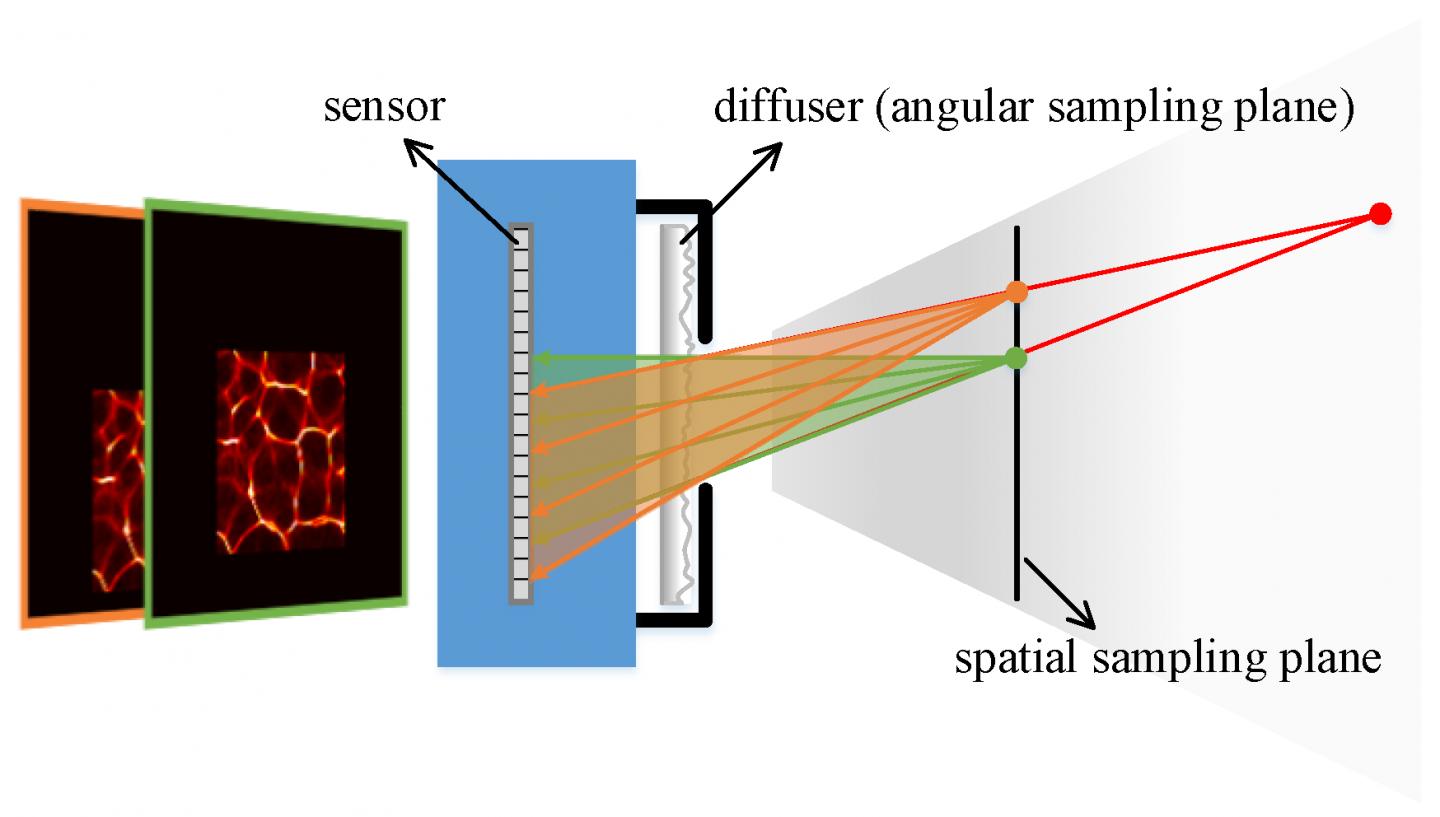

In a new paper published in Light Science & Application, a team of scientists from College of Physics and Optoelectronic Engineering, Shenzhen University, China and Institut für Technische Optik, Universität Stuttgart, German have developed “a novel modality for computational light-field imaging by using a diffuser as an encoder, without needing any lens. Through the diffuser, each sub-beam directionally emitted by a point source in the detectable field-of-view forms a distinguishable sub-image that covers a specific region on the sensor. These sub-images are combined into a unique pseudorandom pattern corresponding to the response of the system to the point source. Consequently, the system has the capability of encoding a light field incident onto the diffuser. We establish a diffuser-encoding light-field transmission model to characterize the mapping of four-dimensional light fields to two-dimensional images, where a pixel collects and integrates contributions from different sub-beams. With the aid of the optical properties of the diffuser encoding, the light-field transmission matrix can be flexibly calibrated through a point source generated pattern. As a result, light fields are computationally reconstructed with adjustable spatio-angular resolutions, avoiding the resolution limitation of the sensor.”

They constructed an experimental system using a diffuser and a sensor. The system was demonstrated for distributed object points and area objects, which shows the object-dependent performance of the computational approach. The performance regarding the spatio-angular samplings and measured objects was further analyzed. After that, these scientists made a summary of their approach:

“The improvement of the proposed methodology over the previous work on diffuser-encoding light-field imaging mainly lies in two aspects. One is that our imaging modality is lensless and thus is compact and free of aberration; the other is that the system calibration and decoupling reconstruction become simple and flexible since only one pattern generated by a point source is required.”

“Based on this single-shot lensless light-field imaging modality, light rays, viewpoints, and focal depths can be manipulated and the occlusion problem can be tackled to some extent. This allows to further investigate the intrinsic mechanism of the light-field propagation through the diffuser. It is also possible to transform the diffuser-encoding light-field representation into the Wigner phase space so that the diffraction effect introduced by the internal tiny structure of the diffuser can be taken into account and lensless light-field microscopy through diffuser encoding may be developed.” the scientists forecast.

###

Media Contact

Xiaoli Liu

[email protected]

Related Journal Article

http://dx.