Credit: Lianlin Li, Ya Shuang, Qian Ma, Haoyang Li, Hanting Zhao, Menglin Wei, Che Liu, Chenglong Hao, Cheng-Wei Qiu, and Tie Jun Cui

The Internet of Things (IoT) and cyber physical systems have opened up possibilities for smart cities and smart homes, and are changing the way for people to live. In this smart era, it is increasingly demanded to remotely monitor people in daily life using radio-frequency probe signals. However, the conventional sensing systems can hardly be deployed in real-world settings since they typically require objects to either deliberately cooperate or carry an active wireless device or identification tag. Additionally, the existing sensing systems are not adaptive or programmable to specific tasks. Hence, they are far from efficient in many points of view, from time to energy consumptions.

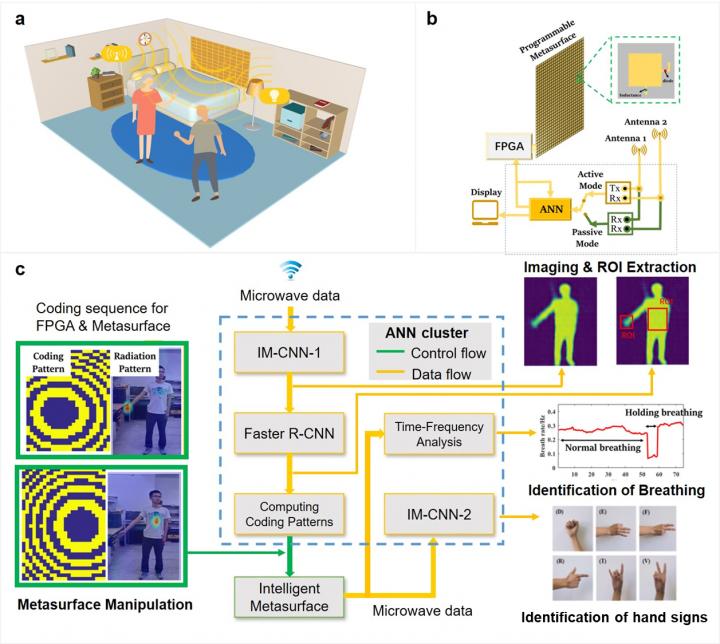

In a new paper published in Light Science & Application, scientists from the State Key Laboratory of Advanced Optical Communication Systems and Networks, Department of Electronics, Peking University, China, the State Key Laboratory of Millimeter Waves, Southeast University, China, and co-workers developed an AI-driven smart metasurface for jointly controlling the EM waves on the physical level and the EM data flux on the digital pipeline. Based on the metasurface, they designed an inexpensive intelligent EM “camera”, which has robust performance in realizing instantaneous in-situ imaging of full scene and adaptive recognition of the hand signs and vital signs of multiple non-cooperative people. More interestingly, the EM camera works very well even when it is passively excited by stray 2.4GHz Wi-Fi signals that ubiquitously exist in the daily lives. As such, their intelligent camera allows us to remotely “see” what people are doing, monitor how their physiological states change, and “hear” what people are talking without deploying any acoustic sensors, even when these people are non-cooperative and are behind obstacles. The reported method and technique will open new avenues for future smart cities, smart homes, human-device interactive interfaces, healthy monitoring and safety screening, without causing the visual privacy problems.

The intelligent EM camera is centered around a smart metasurface, i.e., a programmable metasurface empowered with a cluster of artificial neural networks (ANNs). The metasurface can be manipulated to generate the desired radiation patterns corresponding to different sensing tasks, from data acquisition to imaging, and to automatic recognition. It can support various kinds of successive sensing tasks with a single device in real time. These scientists summarize the operational principle of their camera:

“We design a large-aperture programmable coding metasurface for three purposes in one: (1) to perform in-situ high-resolution imaging of multiple people in a full-view scene; (2) to rapidly focus EM fields (including ambient stray Wi-Fi signals) to selected local spots and avoid undesired interferences from the body trunk and ambient environment; and (3) to monitor the local body signs and vital signs of multiple non-cooperative people in real-world settings by instantly scanning the local body parts of interest.”

“Since the switching rate of metasurface is remarkably faster than that of body changing (vital sign and hand sign) by a factor of ~ , the number of people monitored in principle can be very large” they added.

“The presented technique can be used to monitor the notable or non-notable movements of non-cooperative people in the real world but also help people with profound disabilities remotely send commands to devices using body languages. This breakthrough could open a new venue for future smart cities, smart homes, human-device interactive interface, healthy monitoring, and safety screening without causing privacy issues. ” the scientists forecast.

###

This research received funding from the National Key Research and Development Program of China, the National Natural Science Foundation of China , and the 111 Project.

Media Contact

Lianlin Li

[email protected]

Related Journal Article

http://dx.