Cambridge, MA – Transformer-based Large Language Models (LLMs) are best known for natural language understanding, reasoning, and text generation as well as multimodal capabilities involving text and imaging data. Proprietary models like ChatGPT, Claude, and open source models like Llama and Mixtral have achieved state of art (SOTA) performance in most tasks involving text. In the area of chemistry and biology, transformers and diffusion models demonstrated exceptional performance in tasks involving protein structure prediction and molecular generation.

Credit: Insilico Medicine

Cambridge, MA – Transformer-based Large Language Models (LLMs) are best known for natural language understanding, reasoning, and text generation as well as multimodal capabilities involving text and imaging data. Proprietary models like ChatGPT, Claude, and open source models like Llama and Mixtral have achieved state of art (SOTA) performance in most tasks involving text. In the area of chemistry and biology, transformers and diffusion models demonstrated exceptional performance in tasks involving protein structure prediction and molecular generation.

However, little progress has been made in complex tasks such as building longitudinal world models or body models of complex biological organisms. Learning biology in time requires highly-multimodal systems that can generalize across multiple data types such as genomics (DNA), epigenetics (methylation and acetylation), transcriptomics (RNA), proteomics (proteins), signaling pathways, cellular, tissue, organ, and system organization and changes in time during the life of the organism. Validation of the output of such multimodal multi omics systems would require multiple experimental species.

In 2022, to explore the potential of multi modal transformers and diffusion models in learning longitudinal multiomics and development of the body world models, Insilico Medicine started working on the PreciousGPT series (www.insilico.com/precious). The concept of multimodal transformers for aging research was first proposed by Alex Zhavoronkov during the Gordon Research Conference (GRC) on Systems Aging in May, 2022. Precious1GPT is a dual-transformer model using methylation and transcriptomic data for aging biomarker development and target discovery. Precious2GPT (in review) used a diffusion model on multiomics data for synthetic data generation and multiple drug discovery tasks.

Today, we are launching Precious3GPT, the first multi omics multispecies multi tissue multimodal transformer model for aging research and drug discovery. It is trained on biomedical text data, and multiple data types coming from mice, rats, monkeys, and humans including transcriptomics, methylation, proteomics, and laboratory blood tests.

Precious3GPT is our most capable model for aging research so far. Unlike previous Precious-series models, Precious3GPT is a collaborative effort. We collaborated with Vadim Gladyshev and Albert Ying at Harvard to develop the concept and are making the model available for the community via Hugging Face and GitHub with the pre-print describing the model and its initial capabilities. As the community starts using the model, we are planning to improve it and add additional features and capabilities.

Based on novel tokenization logic, Precious3GPT presents genuinely multimodal learning process on a large collection of >2MM data points from public omics datasets, as well as biomedical text data and knowledge graphs, thus enabling age prediction in specific tissues, simulated biomedical experiments, compound effect transfer and more, all prompted by natural language as if in daily conversations.

Some of the geroprotector research results produced by Precious3GPT were validated experimentally, including experiments performed by LifeStar 1, Insilico’s 6th generation automated AI-driven robotics laboratory.

“The aging research field is focused on developing aging clocks using a variety of different data types and then using these clocks to test geroprotective compounds. With multimodal, multi-species transformers trained on multiple data types simultaneously, we can replace many of these clocks with just one model capable of performing basic target and drug discovery tasks as well as age prediction. Just as the One Ring in the Lord of the Rings, Precious3GPT can be the model to combine many of the aging research and drug discovery tasks together, unifying and surpassing the capabilities of individual aging clocks,” said Alex Zhavoronkov, PhD, founder and CEO of Insilico Medicine. “Another important aspect of this announcement is that Precious3GPT model was developed and tested entirely by Insilico AI R&D center in the Middle East demonstrating the growing capabilities of this region in AI, aging research and drug discovery. One of the goals of this project is to unite the countries MENA region and around the world to work in peace to extend healthy, productive and sustainable life for everyone on the planet.”

“Using Precious3GPT, you can find compounds that work for different species or affect multiple tissues, or that are capable of treating multiple diseases just by asking questions,” says Dr Khadija Alawi, Insilico’s senior biologist, based in the company’s MENA AI R&D Center, where this technology was developed. “You can also compare the compounds generated for mice and humans and then take a deeper look at the compounds that would work in both species and follow up with in vivo experiments.”

“By enabling precise biological age prediction across tissues, species and omic data types, our Precious GPT series can facilitate the development of more personalized and effective treatments,” says Fedor Galkin, head of the Precious project, based in the company’s MENA AI R&D Center, where this technology was developed. “For the benefit of researchers and everyone else, we decided to open-source the latest Precious3GPT in the near future, making the ecosystem more collaborative and transparent.”

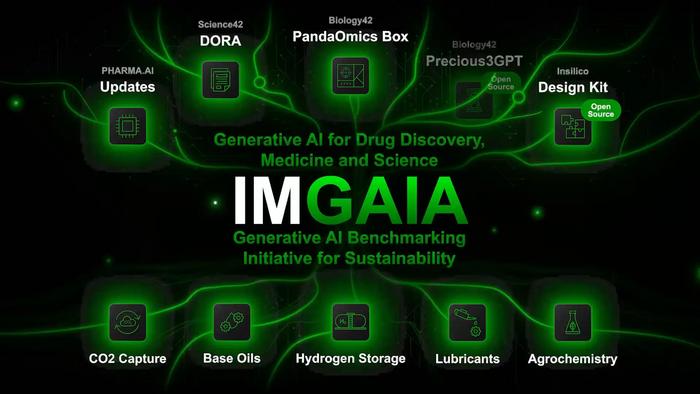

Precious3GPT was announced as part of the Insilico Medicine Genrative AI Action (IMGAIA) for Science and Sustainability. The release also features Draft Outline Research Assistant (DORA), multi-AI agent research tool capable of writing scientific article. The tool was used to generate this press release and associated research papers. Precious3GPT will seamlessly connect with DORA for automated aging research studies. During IMGAIA event, Insilico Medicine also announced its Generative AI Benchmarking Initiative for CO2 capture, hydrogen storage, sustainable lubricants, and sustainable agriculture.

Founded in 2014, Insilico Medicine is a pioneer in generative AI for drug discovery and development. Insilico first described the concept of using generative AI for the design of novel molecules in a peer-reviewed journal in 2016, which laid the foundation for the commercially available Pharma.AI platform spanning across biology, chemistry and clinical development. Powered by Pharma.AI, Insilico has nominated 18 preclinical candidates in its comprehensive portfolio of over 30 assets since 2021, and has received IND approval for 7 molecules. Novel targets identified using AI and molecules generated using generative AI are in 2 Phase II human clinical trials. Recently, the company published a paper in Nature Biotechnology presenting the entire R&D journey of its lead drug pipeline, INS018_055, from AI algorithms to Phase II clinical trials.

About Insilico Medicine

Insilico Medicine, a global clinical stage biotechnology company powered by generative AI, is connecting biology, chemistry, and clinical trials analysis using next-generation AI systems. The company has developed AI platforms that utilize deep generative models, reinforcement learning, transformers, and other modern machine learning techniques for novel target discovery and the generation of novel molecular structures with desired properties. Insilico Medicine is developing breakthrough solutions to discover and develop innovative drugs for cancer, fibrosis, immunity, central nervous system diseases, infectious diseases, autoimmune diseases, and aging-related diseases. www.insilico.com