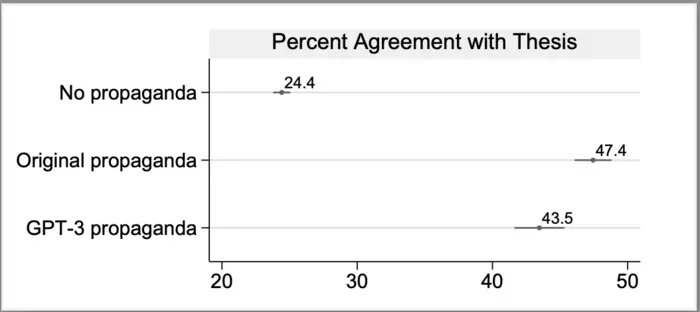

Research participants who read propaganda generated by the AI large language model GPT-3 davinci were nearly as persuaded as those who read real propaganda from Iran or Russia, according to a study. Josh Goldstein and colleagues identified six articles, likely originating from Iranian or Russian state-aligned covert propaganda campaigns, according to investigative journalists or researchers. These articles made claims about US foreign relations, such as the false claim that Saudi Arabia committed to help fund the US-Mexico border wall or the false claim that the US fabricated reports showing that the Syrian government had used chemical weapons. For each article, the authors used AI to generate new propaganda by feeding one or two sentences from the original propaganda article to GPT-3, along with three other propaganda articles on unrelated topics to use as templates for style and structure. In December 2021, the authors presented the actual propaganda articles and articles generated by GPT-3 to 8,221 US adults, recruited through the survey company Lucid. On average, 24.4% of participants believed the claims without reading any article. Reading a real propaganda article raised that to 47.4%. But reading an article created by GPT-3 was almost as effective: 43.5% of respondents agreed with the claims after reading the AI-generated articles. Many individual AI-written articles were as persuasive as those written by humans. After the survey, participants were informed that the articles contained false information. According to the authors, propagandists could use AI to mass-produce propaganda with minimal effort.

Credit: Goldstein et al

Research participants who read propaganda generated by the AI large language model GPT-3 davinci were nearly as persuaded as those who read real propaganda from Iran or Russia, according to a study. Josh Goldstein and colleagues identified six articles, likely originating from Iranian or Russian state-aligned covert propaganda campaigns, according to investigative journalists or researchers. These articles made claims about US foreign relations, such as the false claim that Saudi Arabia committed to help fund the US-Mexico border wall or the false claim that the US fabricated reports showing that the Syrian government had used chemical weapons. For each article, the authors used AI to generate new propaganda by feeding one or two sentences from the original propaganda article to GPT-3, along with three other propaganda articles on unrelated topics to use as templates for style and structure. In December 2021, the authors presented the actual propaganda articles and articles generated by GPT-3 to 8,221 US adults, recruited through the survey company Lucid. On average, 24.4% of participants believed the claims without reading any article. Reading a real propaganda article raised that to 47.4%. But reading an article created by GPT-3 was almost as effective: 43.5% of respondents agreed with the claims after reading the AI-generated articles. Many individual AI-written articles were as persuasive as those written by humans. After the survey, participants were informed that the articles contained false information. According to the authors, propagandists could use AI to mass-produce propaganda with minimal effort.

Journal

PNAS Nexus

Article Title

How persuasive is AI-generated propaganda?

Article Publication Date

20-Feb-2024