In the relentless pursuit of overcoming the bandwidth limitations inherent to today’s optical communication networks, a significant advancement has emerged from the realm of multimode fiber (MMF) technology. Driven by the explosive growth in data traffic through artificial intelligence, big data analytics, and cloud computing, the capacity constraints of conventional single-mode fiber (SMF) systems are increasingly becoming a critical bottleneck. Addressing this challenge, a research team led by Professor Jürgen Czarske at the Chair of Measurement and Sensor System Techniques (MST) has developed a groundbreaking method for real-time mode decomposition in MMF communication systems. This novel approach integrates an FPGA-accelerated deep learning engine, demonstrating unparalleled speed and energy efficiency while maintaining exceptionally high reconstruction accuracy.

The foundation of this breakthrough lies in the principle of space-division multiplexing (SDM), a technique that capitalizes on the ability of a multimode fiber to carry multiple spatial modes simultaneously. Unlike single-mode fibers that transmit data through a single spatial channel, MMFs multiplex orthogonal transverse modes which can theoretically multiply the bandwidth capacity severalfold. However, the complex interplay between modes during propagation inside the fiber causes random mode coupling. This coupling results in intricate speckle patterns, which scramble the transmitted signals and render traditional coherent detection methods insufficient for accurate mode recovery. Consequently, the problem of mode demultiplexing remains an imposing hurdle due to the overwhelming computational demands associated with conventional digital signal processing (DSP) algorithms.

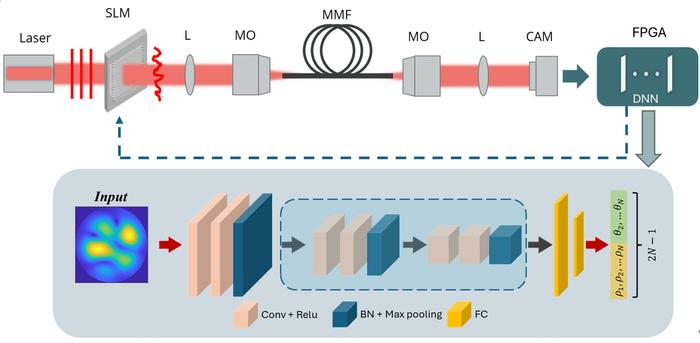

Enter the FPGA-accelerated mode decomposition engine, a solution ingeniously crafted to tackle this complexity with both speed and precision. At its core, the system utilizes a customized convolutional neural network (CNN) specifically trained on extensive synthetic datasets to predict the amplitude and relative phase of each mode directly from a single intensity image captured at the fiber output. This approach cleverly circumvents the need for coherent detection, a method traditionally demanding complex hardware setups and significant power consumption. By leveraging neural inference, mode decomposition becomes a matter of computational pattern recognition, streamlined through the power of deep learning.

.adsslot_mnb2lykcA8{width:728px !important;height:90px !important;}

@media(max-width:1199px){ .adsslot_mnb2lykcA8{width:468px !important;height:60px !important;}

}

@media(max-width:767px){ .adsslot_mnb2lykcA8{width:320px !important;height:50px !important;}

}

ADVERTISEMENT

Implementation of this CNN on a field-programmable gate array (FPGA) marks a vital innovation in energy-efficient and high-throughput signal processing. FPGAs offer a unique balance of configurability and low power consumption, facilitating real-time neural network inference at speeds unattainable by conventional graphics processing units (GPUs) without substantial energy costs. The research team reports an inference throughput exceeding 100 frames per second while consuming merely 2.4 watts of power. Such performance is transformative when juxtaposed with GPU-based approaches, which can draw tens of watts for similar tasks, thereby making this technology eminently suitable for practical deployment in industry- and medically relevant applications where energy and thermal budgets are severely constrained.

Experimental validation of the concept took place within a meticulously designed optical test bench. This setup combines a spatial light modulator (SLM) that synthesizes tailored superpositions of spatial modes, a precision six-axis fiber coupling stage enabling controlled mode excitation, and a high-sensitivity infrared camera that captures the resulting speckle patterns after transmission through the MMF. The captured intensity images are streamed in real time to the on-board FPGA, where the CNN inference operates to reconstruct the complex field of up to six spatial modes. Empirical results showcase reconstruction fidelities surpassing 97%, underscoring the method’s reliability and robustness under realistic experimental conditions.

A cardinal innovation underpinning this achievement is the network’s capability to resolve the notorious phase ambiguity inherent in intensity-only measurements. Typically, training neural networks solely on intensity patterns encounters an inherent global phase uncertainty since intensity measurements eliminate absolute phase information. This uncertainty often results in ambiguous outputs that compromise the physical relevance of the reconstructed fields. The team’s novel strategy involves harnessing the relative phases of higher-order modes to nullify this global phase ambiguity. This ensures the output is both unique and physically meaningful, even as the global phase drifts unpredictably, enhancing system stability and interpretability.

Beyond high-speed and high-fidelity mode recovery, the approach’s integration into an FPGA platform offers outstanding versatility in terms of system integration. The compact form factor and reconfigurability of FPGAs allow these engines to be embedded directly into optical communication hardware, medical imaging devices, or fiber sensor systems. Such embedded implementations pave the way for next-generation closed-loop adaptive optics setups, where rapid feedback on the optical field can dramatically enhance performance. Likewise, ultra-dense SDM links leveraging this technology promise to push the limits of fiber network throughput, addressing ever-escalating data demands with scalable hardware.

The implications of this research extend into several cutting-edge technology domains. For fiber-optic sensing applications, where precise phase information is critical for detecting subtle environmental changes, the FPGA-accelerated mode decomposition could enable vibration-tolerant, low-latency interrogation schemes. In biomedical optics, particularly endoscopic imaging, the method’s rapid and energy-efficient phase retrieval could enhance imaging clarity and speed, enabling real-time diagnostic procedures that were previously limited by slower computational algorithms or bulkier equipment. Consequently, the intersection of advanced photonics and embedded machine learning here represents a paradigm shift with broad societal relevance.

At its essence, this work exemplifies how deep neural networks, when thoughtfully integrated with hardware accelerators like FPGAs, can transcend traditional limitations of optical signal processing. The synergy between synthetic data-driven training and physically informed network design not only accelerates computation but also grounds the reconstructions in realistic optical physics. This balance is critical for translating laboratory successes into practical solutions capable of redefining the infrastructure of global communications and sensing architectures.

The study, entitled “FPGA-accelerated mode decomposition for multimode fiber-based communication,” was carried out by Professor Czarske’s team with co-first authors doctoral candidate Qian Zhang and graduate student Yuedi Zhang. Published in the distinguished journal Light: Advanced Manufacturing, this work not only opens pathways toward next-generation optical fiber technologies but also exemplifies the fertile convergence of photonic engineering and machine learning acceleration.

As data traffic continues its inexorable ascent, innovations like this FPGA-accelerated mode decomposition engine will be pivotal in ensuring that the communications backbone of the digital age remains agile, scalable, and efficient. With the ability to overcome longstanding computational barriers and integrate seamlessly into compact devices, this technology stands poised to transform both how we transmit information and how we interface with the optical domain in real time.

Subject of Research: FPGA-accelerated deep learning for real-time mode decomposition in multimode fiber communications

Article Title: FPGA-accelerated mode decomposition for multimode fiber-based communication

Web References:

10.37188/lam.2025.031

Image Credits: Qian Zhang, Yuedi Zhang et al.

Keywords

Multimode fiber, space-division multiplexing, mode decomposition, convolutional neural network, FPGA acceleration, optical communications, phase retrieval, deep learning, real-time signal processing, spatial light modulator, speckle pattern analysis, energy-efficient computing

Tags: artificial intelligence in fiber opticsbig data analytics for telecommunicationsdeep learning in multimode fiberenergy-efficient communication systemsFPGA-accelerated AI in communicationshigh reconstruction accuracy in data transmissionmultimode fiber technology advancementsnext-generation optical communication solutionsoptical communication research and innovationovercoming bandwidth limitations in optical networksreal-time mode decomposition methodsspace-division multiplexing techniques