New image inpainting technique accurately predicts missing image regions

Credit: Hiya Roy et al.

Image inpainting is a computer vision technique in which pixels missing from an image are filled in. It is often used to remove unwanted objects from an image or to recreate missing regions of occluded images. Inpainting is a common tool for predicting missing image data, but it’s challenging to synthesize the missing pixels in a realistic and coherent way.

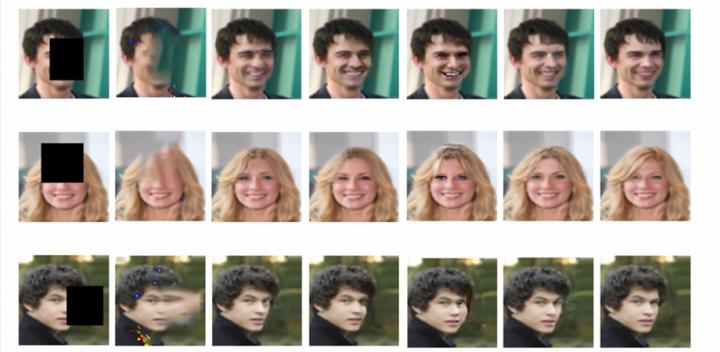

Researchers at the University of Tokyo have presented a frequency-based inpainting method that enables the use of both frequency and spatial information to generate missing image portions. Publishing in the Journal of Electronic Imaging (JEI), Hiya Roy et al. detail the technique in “Image inpainting using frequency domain priors.” Current methods employ only spatial domain information during the learning process, which can allow details of interior reconstruction to be lost, resulting in the estimation of only a low-frequency part of the original patch. To solve that problem, the researchers looked to frequency-based image inpainting and demonstrated that converting inpainting to deconvolution in the frequency domain can predict the local structure of missing image regions.

“The frequency-domain information contains rich representations which allow the network to perform the image understanding tasks in a better way than the conventional way of using only spatial-domain information,” Roy says. “Therefore, in this work, we try to achieve better image inpainting performance by training the networks using both frequency and spatial domain information.”

Image inpainting algorithms historically fall into two broad categories. Diffusion-based image inpainting algorithms attempt to replicate the appearance of the image into the missing regions. This method can fill small holes well, but the quality of the results erodes as the size of holes increases. The second category is patch-based inpainting algorithms, which seek the best-fitting patch in the image to fill missing portions. This method can fill larger holes but is ineffective for complex or distinctive portions of an image.

“The originality of the research resides in the fact that the authors used the frequency domain representation, namely the spectrum of the images obtained by fast Fourier transform, at the first stage of inpainting with a deconvolution network,” says Jenny Benois-Pineau of the University of Bordeaux, a senior editor for JEI. “This yields a rough inpainting result capturing the structural elements of the image. Then the refinement is fulfilled in the pixel domain by a GAN network. Their approach outperforms the state-of-the art in all quality metrics: PSNR, SSIM, and L1.”

Roy and colleagues show that deconvolution in the frequency domain can predict the missing regions of the image structure using context from the image. In its first stage, their model learned the context using frequency domain information, then reconstructed the high-frequency parts. In the second stage, it used spatial domain information to guide the color scheme of the image and then enhanced the details and structures obtained in the first stage. The result is better inpainting outcomes.

“Experimental results showed that our method could achieve results better than state-of-the-art performances on challenging datasets by generating sharper details and perceptually realistic inpainting results,” say Roy et al. in the research paper. “Based on our empirical results, we believe that methods using both frequency and spatial information should gain dominance because of their superior performance.”

The group expects their research to become a springboard to extend the use of other types of frequency domain transformations to solve image restoration tasks such as image denoising.

###

Read the open access research article: Hiya Roy, Subhajit Chaudhury, Toshihiko Yamasaki, and Tatsuaki Hashimoto, “Image inpainting using frequency domain priors,” J. Electron. Imaging 30(2) 023016 (2021). DOI: 10.1117/1.JEI.30.2.023016

Media Contact

Daneet Steffens

[email protected]

Original Source

https:/

Related Journal Article

http://dx.