The advent of Generative Artificial Intelligence (Gen-AI) has ushered in a transformative era in healthcare, particularly within the realm of radiology. As the complexity of radiological interpretations continues to escalate, the integration of Gen-AI technology has surfaced as a pivotal advancement in enhancing diagnostic accuracy. However, the clinical deployment of such technology necessitates rigorous validation against real-world data to ensure its efficacy and reliability in a clinical setting. Recent studies have explored the performance of various Gen-AI models in interpreting pulmonary CT images, revealing a spectrum of diagnostic accuracies that underscore the challenges and potential of this cutting-edge technology.

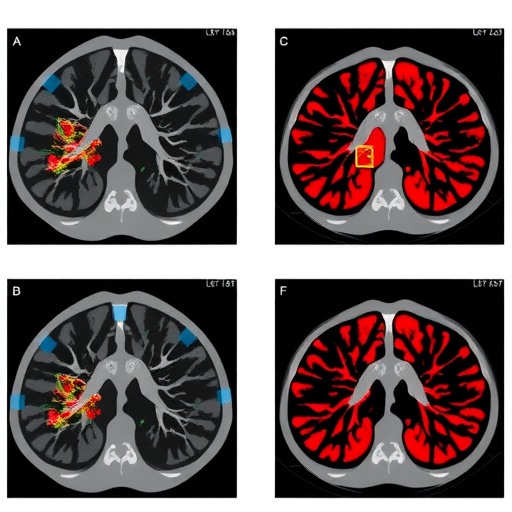

In a thorough investigation involving 184 confirmed cases of malignant lung tumors, researchers meticulously analyzed the diagnostic performance of three prominent Gen-AI models: Gemini, Claude-3-opus, and GPT. The findings indicated that Gemini emerged as the frontrunner with an impressive accuracy rate surpassing 90%, closely followed by Claude-3-opus. In stark contrast, GPT demonstrated a considerably lower accuracy of only 65.22%. This variation in performance highlights the importance of selecting the appropriate AI model for specific tasks, particularly in high-stakes medical environments where accuracy is paramount.

.adsslot_S1GmOsfAgF{ width:728px !important; height:90px !important; }

@media (max-width:1199px) { .adsslot_S1GmOsfAgF{ width:468px !important; height:60px !important; } }

@media (max-width:767px) { .adsslot_S1GmOsfAgF{ width:320px !important; height:50px !important; } }

ADVERTISEMENT

One interesting aspect of this investigation was the introduction of clinical history into the diagnostic process, which marginally elevated Gemini’s accuracy to 68.30%. This increment suggests that while AI models can vastly enhance diagnostic efficiency, there remains a cautionary need to ensure these models do not become overly reliant on textual inputs, potentially overshadowing crucial imaging features that are vital for accurate diagnosis. The same pattern of underperformance in complex input scenarios was observed with GPT, which struggled with an accuracy of 48.91% when analyzing continuous CT slices, and only achieved 63.95% with integrated clinical history.

The overall performance of Claude-3-opus and GPT illuminated their robustness in handling various input types, particularly when faced with continuous imaging challenges where they exhibited notable stability and improved accuracy metrics. The study further demonstrated that by adopting a standardized diagnosis approach using consistent results across multiple attempts, Claude-3-opus consistently outperformed both Gemini and GPT in diagnostic accuracy.

To enhance the diversity of the analyzed sample, the research incorporated additional categories such as non-malignant nodules, inflammatory lesions, and normal lung appearances. This inclusion allowed for a more holistic evaluation of the AI models’ diagnostic capabilities, with results indicating that both Claude and Gemini achieved an area under the curve (AUC) of 0.61 when assessing single CT images. However, the complexity of the input directly correlated with a decline in both models’ diagnostic AUC as the input scenarios escalated.

In a crucial aspect of the research, the simplification of prompts utilized during the AI diagnostics significantly enriched the performance metrics for all three models. Post-simplification assessments recorded notable increases in AUC values, with Claude, Gemini, and GPT achieving AUCs of 0.69, 0.76, and 0.73, respectively. These enhanced metrics not only underline the importance of prompt precision but also highlight the intricate balance between model complexity and interpretative clarity, particularly when confronted with varying imaging modalities.

When assessing the diagnostic capacity of Gen-AI with respect to identified lesion features, it became apparent that Claude and GPT exhibited superior performance in both the accuracy and diversity of lesion localization and description. All models heavily featured morphological and margin characteristics as essential factors for malignancy, notably emphasizing identifiers such as “spiculated” and “irregular” lesions. This reliance on morphological features underscores a potential area for enhancement within AI models, particularly the need for more nuanced training that incorporates advanced lesion characteristics alongside more conventional identifiers.

The study’s findings brought to light a compelling issue concerning misdiagnosed cases, in which notable discrepancies were identified across various dimensions of model performance. The significant variances in misdiagnosis not only raise concerns about feature fabrication but also prompt questions regarding the maturity of the Gen-AI models in accurately learning and reproducing image features during training processes. This facet reveals a critical avenue for ongoing research aimed at refining the robustness of AI-driven diagnostic capabilities.

For performance optimization, advanced regression techniques were employed, resulting in AUCs of 0.896 and 0.884 prior to and following cross-validation, respectively. These promising results indicate not only the potential for enhanced diagnostic accuracy but also the stability of the model constructs utilized. Stepwise regression yielded similar AUC results, albeit with a noted increase in variability. Notably, external validation through datasets such as TCGA-LUAD, TCGA-LUSC, and MIDRC-RICORD-1A further substantiated the initial findings, demonstrating that Claude consistently outperformed its counterparts during assessments employing simplified prompts.

Through rigorous dimensionality reduction of features, Lasso regression contributed to a recalibration of performance indicators, achieving more balanced outcomes as validated by additional ROC curve analyses. This multi-faceted evaluation reinforces the imperative for continual refinement of Gen-AI technologies within radiology, highlighting areas for improvement and urging the incorporation of diverse datasets for comprehensive model training.

On a broader scale, the implications of these findings extend beyond mere diagnostic metrics; they pose critical questions about the future role of AI in personalized medicine. As Gen-AI technologies evolve, their integration into standard clinical practice necessitates a thoughtful approach, balancing enhanced diagnostic capabilities with the essential human elements of interpretation and decision-making in healthcare. The path forward must prioritize rigorous validation with real-world data to ensure that these promising technologies deliver on their potential, ultimately improving patient outcomes and fostering a more informed approach to radiological diagnostics.

As the landscape of healthcare continues to evolve, the intersection of AI and clinical practice will certainly be a focal point of ongoing research and discussion. With significant strides made in the evaluation of Gen-AI models, the future is poised for innovations that not only enhance diagnostic accuracy but also fundamentally transform the way radiologists and clinicians approach patient care in an increasingly complex diagnostic environment.

Subject of Research: Diagnostic accuracy of AI models for pulmonary CT images

Article Title: Enhancing Diagnostic Precision with Generative AI in Pulmonary Radiology

News Publication Date: TBD

Web References: TBD

References: TBD

Image Credits: THE AUTHORS

Keywords

Artificial Intelligence, Radiology, Pulmonary Imaging, Diagnostic Accuracy, Machine Learning, Gen-AI, Healthcare, Cancer Detection, CT Imaging, Data Analysis, Model Validation, Medical Technology.

Tags: accuracy rates of AI in diagnosticsadvanced multimodal models in radiologychallenges in radiological interpretationsclinical validation of AI technologiescomparative evaluation of Gen-AI in medicinediagnostic accuracy of AI modelsGenerative AI in healthcarelung cancer diagnosis using AIperformance of AI in lung tumor detectionpulmonary CT image analysisselecting AI models for medical taskstransformative impact of AI on healthcare