Recent advancements in the field of machine learning have seen the rapid proliferation of tree-based models due to their flexibility and accuracy. These models, such as decision trees and their ensemble variants, have proven invaluable across various applications, from finance to healthcare. However, one of the most significant challenges facing researchers and practitioners is not merely predicting outcomes, but understanding how these models reach their decisions. This understanding is critical, particularly in high-stakes domains where interpretability can impact trust and accountability.

Tree models excel at handling complex relationships and nonlinear patterns inherent in data. Despite their success, traditional interpretation methods have largely focused on assessing the importance of individual features. This approach often oversimplifies the intricate interdependencies that exist among multiple features, ultimately hindering the model’s interpretative power. As a result, there is a pressing need for comprehensive methods that take into account the collective influence of feature groups, rather than viewing them in isolation.

In light of these challenges, a research team led by Wei Gao has made strides towards enhancing the interpretability of tree-based models. Their innovative work, recently published in Frontiers of Computer Science, introduces a novel interpretation methodology that emphasizes the importance of feature groups, thereby uncovering the underlying correlations and structures among various features. This approach serves to enrich our understanding of how tree models derive their predictions, contributing to the broader goal of making machine learning more transparent and accountable.

.adsslot_uOI63q87bc{ width:728px !important; height:90px !important; }

@media (max-width:1199px) { .adsslot_uOI63q87bc{ width:468px !important; height:60px !important; } }

@media (max-width:767px) { .adsslot_uOI63q87bc{ width:320px !important; height:50px !important; } }

ADVERTISEMENT

The team’s breakthrough is centered around a concept they term the BGShapvalue. This metric enables a nuanced evaluation of the importance of feature groups, granting insights into not just individual feature contributions but also how these features interact collectively. By leveraging BGShapvalue, researchers can better capture the complex dynamics at play within tree models, ultimately leading to a significant improvement in interpretability.

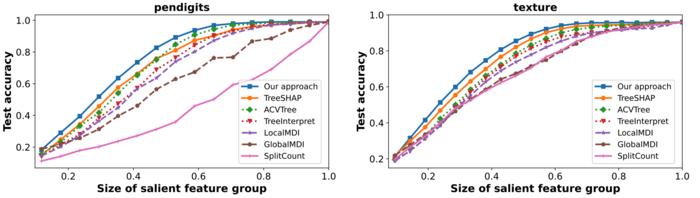

To implement their method, the researchers developed an algorithm known as BGShapTree. This polynomial algorithm efficiently computes the BGShapvalues by decomposing them into manageable components. The core of the algorithm hinges on the relationships between individual features and the model’s decision-making pathways. In practice, the team employed a greedy search algorithm to identify salient feature groups that exhibit large BGShapvalues, thus highlighting which combinations of features most significantly influence model predictions.

The significance of this research extends beyond theoretical contributions; extensive experiments across 20 benchmark datasets validate the effectiveness of the proposed methodology. Not only do these results underscore the practicality of the BGShapvalue and BGShapTree, but they also offer a pathway forward for researchers looking to enhance the interpretability of their machine learning models. By providing a systematic way to assess feature group importance, this work addresses a fundamental gap in the current landscape of model interpretation.

Looking to the future, the research team aims to expand their methodology’s applicability. One of the immediate goals is to adapt the proposed techniques for more complex tree models, including popular frameworks like XGBoost and deep forests. These models, known for their powerful predictive capabilities, present unique challenges and opportunities for further enhancing interpretability.

Moreover, there is a growing need to identify more efficient strategies for searching and evaluating feature groups. The team’s focus on developing computationally feasible approaches ensures that their interpretation methods can scale to larger datasets and more intricate models, ultimately fostering broader adoption within the data science community.

As artificial intelligence and machine learning continue to penetrate various sectors, the demand for interpretable models will only increase. This ongoing research not only contributes to technical advancements but also aligns with ethical principles of fairness and transparency in AI. The profound implications of such work suggest a transformative potential that could reshape the relationship between humans and machines, ultimately leading to a more informed and responsible deployment of AI technologies.

In conclusion, the advancements made by Wei Gao and his team present a substantial step forward in the quest for interpretable machine learning. By developing methods that consider the collective interaction of feature groups, their research paves the way for a deeper understanding of model behaviors. As the scientific community endeavors to bridge the gap between predictive accuracy and interpretability, initiatives like this will play an essential role in advancing the conversation around responsible AI.

With the academic and practical implications of this research, it is evident that the future of interpretability in machine learning is bright. As researchers continue to refine and enhance these methodologies, the potential for broader application and deeper understanding will undoubtedly evolve. This work represents not just a method but a philosophy that prioritizes understanding the ‘why’ behind model predictions, fostering a future where AI is not only smarter but also more transparent.

Subject of Research: Not applicable

Article Title: Interpretation with baseline shapley value for feature groups on tree models

News Publication Date: 15-May-2025

Web References: Frontiers of Computer Science

References: DOI: 10.1007/s11704-024-40117-2

Image Credits: Fan XU, Zhi-Jian ZHOU, Jie NI, Wei GAO

Keywords

Computer science, machine learning, model interpretability, feature group importance, BGShapvalue, tree models, ethical AI, transparency.

Tags: collective feature influencedecision trees applicationsenhancing model interpretabilityensemble model analysisfeature group insightsFrontiers of Computer Science publicationhigh-stakes decision makinginterpretability in machine learningmachine learning transparencynonlinear data relationshipstree-based modelsWei Gao research contributions