In the modern agricultural landscape, the importance of accurate and efficient disease classification in crops cannot be overstated. Maize, a staple food for millions worldwide, is particularly susceptible to various diseases that can significantly lower yield and threaten food security. Recent advancements in artificial intelligence, particularly in the field of computer vision, have paved the way for innovative methodologies to tackle these challenges. A noteworthy study published in 2025 sheds light on a groundbreaking approach to maize disease classification, utilizing a statistically validated stacking ensemble of Convolutional Neural Networks (CNNs) and Vision Transformers.

The emergence of deep learning has revolutionized the field of image analysis, allowing significant leaps in the accuracy and efficiency of tasks such as disease identification in plants. This study exploits two cutting-edge technologies—CNNs and Vision Transformers—to create a robust model that not only classifies maize diseases accurately but also exhibits resilience in varied conditions. By combining strengths from both frameworks, the research aims to develop a system that reduces false positives and negatives, which are critical in agricultural practice.

The foundational element of this ensemble approach lies in its nature of stacking multiple models. Unlike traditional one-model approaches, stacking allows for the integration of diverse representations from various architectures. CNNs, known for their prowess in image processing, leverage their hierarchical structure to detect subtle visual patterns indicative of specific diseases. On the other hand, Vision Transformers take advantage of attention mechanisms, which excel in understanding complex dependencies within the data, providing a comprehensive view of the image context.

Data used in this research play a pivotal role in enhancing model performance. A diverse dataset comprising various maize leaf images affected by different diseases was amassed. This ensures that the model is not only trained on a single disease type, but rather exposed to a multitude of conditions, thereby enhancing its generalizability. The complexity of plant diseases necessitates such extensive datasets to account for variances in symptoms that might arise due to environmental factors, stage of disease progress, or even genetic variability among maize strains.

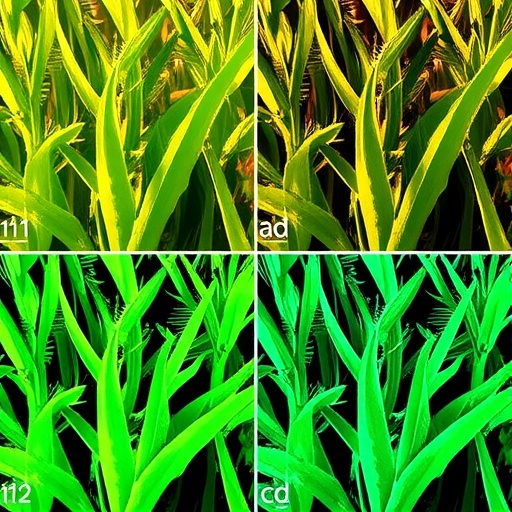

One of the study’s critical steps was the rigorous preprocessing of image data. The authors employed advanced image augmentation techniques to artificially increase the dataset size, thereby preventing overfitting—a common pitfall in machine learning where the model performs well on training data but poorly on unseen data. Techniques such as rotation, scaling, and color adjustments were utilized to confer robustness to the model, ensuring it can handle real-world scenarios where disease manifestation may be less than ideal.

Following the preprocessing stage, the study implemented a multi-phase training process. Initial training utilized CNNs alone, allowing the model to establish a baseline performance. Subsequently, Vision Transformers were introduced into the ensemble, capitalizing on the foundational knowledge gained during the CNN training. The stacking strategy facilitates a collaborative learning environment where the strengths of both architectures are mutually reinforced. Ultimately, this dual approach to learning enables the ensemble model to achieve performance that surpasses individual model capabilities.

The statistical validation of the model was another cornerstone of the study. Rigorous testing ensured that the predictions made by the ensemble were not only accurate but also reliable under various conditions. The researchers employed techniques such as k-fold cross-validation to ascertain consistency across subsets of the data, further enhancing trust in the model’s predictions. Performance metrics such as accuracy, precision, recall, and F1-score were meticulously calculated, providing a comprehensive view of its efficacy in real-world applications.

The outcome of this research is significant, especially in an era where digital agriculture is on the rise. The use of AI in disease classification can lead to timely interventions, which are crucial in minimizing crop losses. With the implementation of this ensemble model, farmers can potentially leverage mobile applications that deploy this technology, enabling them to capture images of their crops and receive instant feedback regarding the health of their plants.

More than just a technological marvel, this research also stimulates a broader dialogue about the integration of machine learning in agriculture—highlighting not only the capabilities but also the responsibilities that come with wielding such power. The collective knowledge gained through AI can aid in informed decision-making, ultimately promoting sustainable agricultural practices. This aligns with global goals of agriculture resilience particularly in regions that are heavily dependent on maize as a primary food source.

The study further underscores the importance of collaboration among researchers, practitioners, and policymakers. Implementing these technological advancements in the agricultural sector will require an ecosystem approach that includes training for farmers, agricultural extensions, and continuous support systems. Investments in infrastructure and accessibility to technology could maximize the benefits derived from this research, ensuring that the innovations reached the grassroots level.

In conclusion, this statistically validated stacking ensemble of CNNs and Vision Transformers represents a significant stride toward robust maize disease classification. As AI continues to impact various fields, its presence in agriculture illustrates the potential for transforming age-old practices into modern, data-driven operations that can safeguard food security. The meticulous attention to detail, from dataset creation to model validation, speaks volumes about the potential of interdisciplinary collaboration harnessed to tackle real-world challenges through innovation.

The future stands to benefit immensely from continual advancements in this field. As researchers refine these techniques and explore additional layers of complexity, the comprehensive understanding of maize diseases will undoubtedly deepen, paving the way for even more sophisticated solutions that can further protect crops against threats. Thus, as we stand on the brink of technological evolution in agriculture, the convergence of AI and agronomy could indeed shape a more sustainable and productive future.

Subject of Research: Maize Disease Classification using AI Techniques

Article Title: A statistically validated stacking ensemble of CNNs and vision transformer for robust maize disease classification.

Article References:

Weldeslasie, D.T., Mekonen, M.Y., Abebe, A.M. et al. A statistically validated stacking ensemble of CNNs and vision transformer for robust maize disease classification.

Discov Artif Intell 5, 284 (2025). https://doi.org/10.1007/s44163-025-00548-7

Image Credits: AI Generated

DOI: 10.1007/s44163-025-00548-7

Keywords: maize, disease classification, machine learning, CNN, Vision Transformer, deep learning, agricultural technology

Tags: advanced methodologies in crop disease managementartificial intelligence in farmingCNNs in agriculturecomputer vision applications in agriculturedeep learning in plant pathologyensemble learning for disease classificationfood security and crop yieldimage analysis for agricultural cropsinnovative approaches to maize disease classificationmaize disease detection techniquesreducing false positives in disease identificationVision Transformers for crop analysis