Credit: Kobe University

A research group led by Kobe University’s Professor MATOBA Osamu (Organization for Advanced and Integrated Research) has successfully created 3D fluorescence and phase imaging of living cells based on digital holography (*1). They used plant cells with fluorescent protein markers in their nuclei to demonstrate this imaging system.

The group consisted of Project Assistant Professor Manoj KUMAR and Assistant Professor Xiangyu QUAN (both of the Graduate School of System Informatics), Professor AWATSUJI Yasuhiro (Kyoto Institute of Technology) and Associate Professor TAMADA Yosuke (Utsunomiya University).

This technology will form a foundation for living cell imaging, which is indispensable in the life sciences field. It is also expected that using this technology to visualize stem cell processes in plants will increase our understanding of them.

These research results appeared in the journal Scientific Reports published by Springer Nature on May 15.

Main Points

- Creation of an integrated system that can produce 3D fluorescence and quantitative phase high-speed imaging without scanning.

- The mathematics behind the integrated system’s fluorescence imaging was formularized.

- Each of the spatial 3D fluorescence and phase images was generated from a single 2D image. Time-lapse observations are also possible.

- It is expected that this technology will be applied to visualize stem cell formation processes in plants.

Research Background

The optical microscope was invented at the end of the sixteenth century and the British scientist Robert Hooke was the first to discover cells in the mid-seventeenth century. The invention has been developed into new technologies such as the phase-contrast microscope (1953 Nobel Prize in Physics), which allows living cells to be observed without the need to stain them, and fluorescent cellular imaging, in which specific molecules are marked using fluorescent proteins (2008 Nobel Prize in Chemistry) and observed in living cells. These have become essential tools for observation in life sciences and medical fields.

Phase imaging uses the difference in the optical length of light as it passes through a biological sample to reveal structural information about it. Fluorescence imaging provides information about specific molecules inside the biological sample, and can reveal their functions. However, the intracellular structure and motility are complex. The ability to visualize multidimensional physical information comprising phase and fluorescence imaging would be useful for understanding these aspects. An imaging system that could generate varied physical information simultaneously and instantaneously from 3D living cells would serve as a foundation technology for bringing about innovation in biology.

The hybrid multimodal imaging system constructed in this study can obtain phase and fluorescent 3D information in a single shot. It enables researchers to quantitatively and simultaneously visualize a biological sample’s structural or functional information using a single platform.

Research Methodology

In this study, the researchers constructed a multimodal digital holographic microscope that could record a sample’s fluorescent information and phase information simultaneously (Figure 1). This uses digital holography as a base, whereby interfered light information from the object is recorded and then optical calculations made by computer are used to generate 3D spatial information about the object.

The microscope in the study is composed of two different optical systems. Firstly, the holographic 3D fluorescence imaging system, as shown on the right-hand side of Figure 1. In order to obtain the 3D fluorescence information, a Spatial Light Modulator (*3) is used to split the fluorescent light emitted from fluorescent molecules into two light waves that can interfere with one another.

At this point, the 3D information from the object light is preserved by giving one of the lightwaves a slightly different curvature radius and propagation direction; at the same time, the two lightwaves are on a shared optical pathway (mostly proceeding along the same axis) allowing a temporally stable interference measurement to be taken. This optical system was formularized in this study,clarifying the recorded interference intensity distribution for the first time. This formula enabled the researchers to find experimental conditions that would improve the quality of the reconstructed fluorescent images. They were able to generate three-dimensional images of living cells and their structures by applying this fluorescent 3D holographic system. The molecules and structures of living cells were labelled with fluorescent proteins, allowing their dynamic behaviors to be observed through this new 3D fluorescence microscopy.

The imaging technology developed by this study enables 3D images, which up until now have taken comparatively longer to generate via laser scanning, to be generated in a single shot without the need for scanning.

Next, as pictured on the left of Figure 1, is the holographic 3D phase imaging system (*4). A living plant cell is made up of components such as a nucleus, mitochondria, chloroplasts, and a thin cell wall. It is possible to visualize the structures of these components from the differences in phase (optical path length). In this system, adopting the Mach-Zehnder interferometer allows the reference plane wave to be used, enabling the optimum interference fringe for each measured object to be easily obtained.

This digital holographic microscope was created by unifying fluorescence and phase measurement systems.

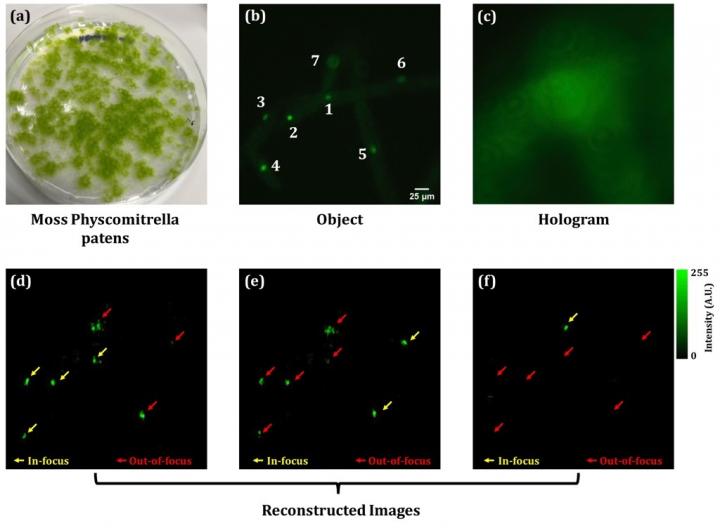

Subsequently, this microscope was utilized to visualize living plant cells. An experiment was carried out to prove that it is possible to conduct 4D observations by applying spatial 3D and a (one-dimensional) time axis. The moss Physcomitrella patens and fluorescent beads with a mean size of 10 μm were used. Figures 2 and 3 show the experiment results for simultaneous 3D fluorescence and phase imaging of the moss. Figure 2 (b) shows that seven nuclei are visible when a conventional full-field fluorescence microscope is used. Of these seven, the nuclei numbered 1, 2, and 4 are in focus, however, this is not the case for the nuclei in locations at different depths as it is a weak fluorescent image with widespread blurring. In this study’s proposed methodology to solve this issue, fluorescent lightwave information was extracted from the fluorescent hologram in Figure 2 (c). Based on this information, the numerical Fresnel propagation algorithm can obtain reconstructed fluorescent images at any depth or distance. Therefore, in-focus images of multiple fluorescent nuclei at different depths were restored from a single image without scanning.

Figure 2 (d), (e), and (f) show the reconstructed images at three different planes. The axial distance between (d) and (e) is 10 micrometers, and 15 micrometers between (e) and (f). The yellow arrows indicate the nuclei that are in focus. It is possible to see which of the seven nuclei in the fluorescence image are in focus across the three planes.

Figure 3 shows the quantitative phase distribution for the three planes at different depths. It was possible to visualize individual chloroplasts (there are many around the edges of the cells, as indicated by the red peaks in Figure 3 (b)). The cell thickness, as calculated from the measured quantitative phase values, was about 17 micrometers, a size very close to other reference values.

Further Developments

In this study a multimodal digital holographic microscope was developed, capable of simultaneous 3D phase and fluorescence measurements. With the ability to conduct quantitative phase and fluorescence imaging at the same time, it is believed that this method will serve as a new foundation technology for the visualization of living biological tissues and cells. In particular, it has been shown that this microscope can be applied to complex plant cells. It could be utilized to gain a diversified understanding of the stem cell formation process in plants, which reproduce more easily than animal cells. In the future, it may be possible to use this information to control the process of stem cells via stimulation by light. Efficient plant reproduction and growth achieved through the proposed imaging and future stimulation could be applied to the development of a food cultivation system.

This technology could be developed by further improving the light usage efficiency. In digital holography, it is necessary to spatially widen the beam diameter, split it into two, and then overlap them again in order to use the interference between the two paths of light. Therefore, it is also necessary to increase the fluorescent energy for it to be observed by the image sensor. In order to achieve this, a large amount of light energy would be required to illuminate the living cells, however, cellular damage caused by the light would be a big problem. It is thought that a volume hologram could be used to boost light usage efficiency while avoiding cellular phototoxicity.

Another issue is that the reconstructed 3D distribution extends into the light propagation direction (the object’s depth direction), decreasing axial resolution. The researchers are working on methods using deep learning and filtering in order to suppress this extension in depth direction and enhance image quality.

###

Acknowledgments

This research was funded by the following:

- The Japan Society for the Promotion of Science (JSPS) KAKENHI Grant-in-Aid for Scientific Research A) ‘Four-dimensional multimodal optical microscopy with nonlinear holographic parallel manipulation of cells’.

- The research project ‘Re-wiring neural connections by experimental optical stimulation of the brain’ (Research Director: Professor WAKE Hiroaki (Nagoya University)) conducted under the JST CREST Research area: ‘Development and Application of optical technology for spatiotemporal control of biological functions’ (Research Supervisor: Professor KAGEYAMA Ryoichiro).

- The Kobe University Organization for Advanced and Integrated Research’s Kiwami Project entitled: ‘Realization of 4D measurement and manipulation for life phenomena by holographic technology and its clinical applications’

Glossary

1. Digital Holography

Light is an electromagnetic wave, and the amplitude and phase describe optical electric fields. Holography is a technique to record both the amplitude and phase. Retrieving the phase and amplitude involves using the interference between the object light (that is reflected by or passes through the object) and the reference beam. The light intensity distribution of the interference pattern is then recorded by photosensitive film or an image sensor using the interference. When the photosensitive film is illuminated by the reference beam used during recording, the object light is regenerated- forming a virtual image. In digital holography, an image sensor records the interference intensity distribution and the object light is regenerated from the light transmission calculations by computer. This technique is used for 3D measuring.

2. Holographic Fluorescence 3D imaging

Fluorescence is different from laser light, in that it has lower spatial and temporal coherence. Holography requires inference between two light beams. A beam splitter or diffraction grating is used to separate the incident fluorescent beam. Splitting the light produced by the fluorescence itself into multiple beams and interfering these beams with one another enables 3D information about the object to be obtained.

3. Spatial Light Modulation

A device to control two-dimensional (2D) light intensity (amplitude) and the speed of the light (phase) by using liquid crystals or a micromirror.

4. Holographic phase 3D imaging

Interference measurement involves measuring the phase difference between two light beams from the interference intensity. By using a plane wave (a light wave with a uniform phase distribution) as the reference beam, the object light’s phase and amplitude distribution can be obtained. Moreover, both the amplitude distribution and phase distribution of the object light can be confirmed because the square of the amplitude equals the intensity. By calculating the light propagation up to the surface of the object using light propagation calculation methods (such as Fresnel propagation or angular-spectrum algorithms), both the original object’s light intensity distribution and phase distribution can be reconstructed by computer. As phase distribution is obtained quantitatively, it can also be utilized to measure aspects such as cell thickness and refractive index.

Media Contact

Verity Townsend

[email protected]

Related Journal Article

http://dx.