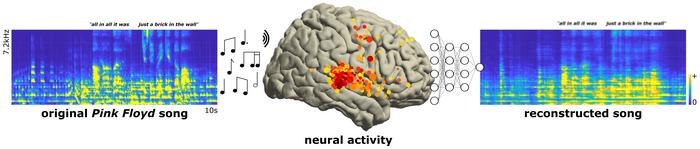

Researchers led by Ludovic Bellier at the University of California, Berkeley, US, demonstrate that recognizable versions of classic Pink Floyd rock music can be reconstructed from brain activity that was recorded while patients listened to the song. Published August 15th in the open access journal PLOS Biology, the study used nonlinear modeling to decode brain activity and reconstruct the song, “Another Brick in the Wall, Part 1”. Encoding models revealed a new cortical subregion in the temporal lobe that underlies rhythm perception, which could be exploited by future brain-machine-interfaces.

Credit: Ludovic Bellier, PhD (CC-BY 4.0, https://creativecommons.org/licenses/by/4.0/)

Researchers led by Ludovic Bellier at the University of California, Berkeley, US, demonstrate that recognizable versions of classic Pink Floyd rock music can be reconstructed from brain activity that was recorded while patients listened to the song. Published August 15th in the open access journal PLOS Biology, the study used nonlinear modeling to decode brain activity and reconstruct the song, “Another Brick in the Wall, Part 1”. Encoding models revealed a new cortical subregion in the temporal lobe that underlies rhythm perception, which could be exploited by future brain-machine-interfaces.

Past research has shown that computer modeling can be used to decode and reconstruct speech, but a predictive model for music that includes elements such as pitch, melody, harmony, and rhythm, as well as different regions of the brain’s sound-processing network, was lacking. The team at UC Berkeley succeeded in making such a model by applying nonlinear decoding to brain activity recorded from 2,668 electrodes, which were placed directly on the brains of 29 patients who then listened to classic rock. Brain activity at 347 of the electrodes was specifically related to the music, mostly located in three regions of the brain: the Superior Temporal Gyrus (STG), the Sensory-Motor Cortex (SMC), and the Inferior Frontal Gyrus (IFG).

Analysis of song elements revealed a unique region in the STG that represents rhythm, in this case the guitar rhythm in the rock music. To find out which regions and which song elements were most important, the team ran the reconstruction analysis after removing the different data and then compared the reconstructions with the real song. Anatomically, they found that reconstructions were most affected when electrodes from the right STG were removed. Functionally, removing the electrodes related to sound onset or rhythm also caused a degradation of the reconstruction accuracy, indicating their importance in music perception. These findings could have implications for brain-machine-interfaces, such as prosthetic devices that help improve the perception of prosody, the rhythm and melody of speech.

Bellier adds, “We reconstructed the classic Pink Floyd song Another Brick in the Wall from direct human cortical recordings, providing insights into the neural bases of music perception and into future brain decoding applications.”

#####

In your coverage, please use this URL to provide access to the freely available paper in PLOS Biology: http://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.3002176

Citation: Bellier L, Llorens A, Marciano D, Gunduz A, Schalk G, Brunner P, et al. (2023) Music can be reconstructed from human auditory cortex activity using nonlinear decoding models. PLoS Biol 21(8): e3002176. https://doi.org/10.1371/journal.pbio.3002176

Author Countries: United States

Funding: see manuscript

Journal

PLoS Biology

DOI

10.1371/journal.pbio.3002176

Method of Research

Experimental study

Subject of Research

People

COI Statement

Competing interests: The authors have declared that no competing interests exist.