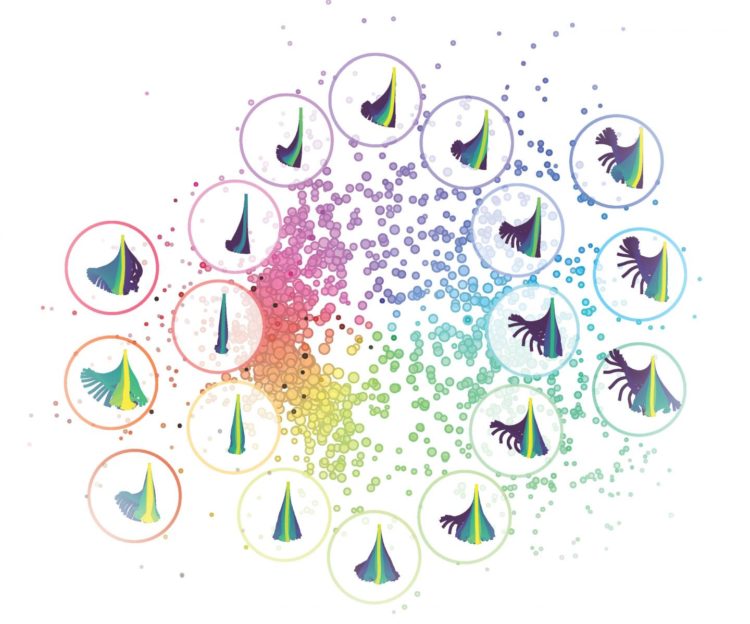

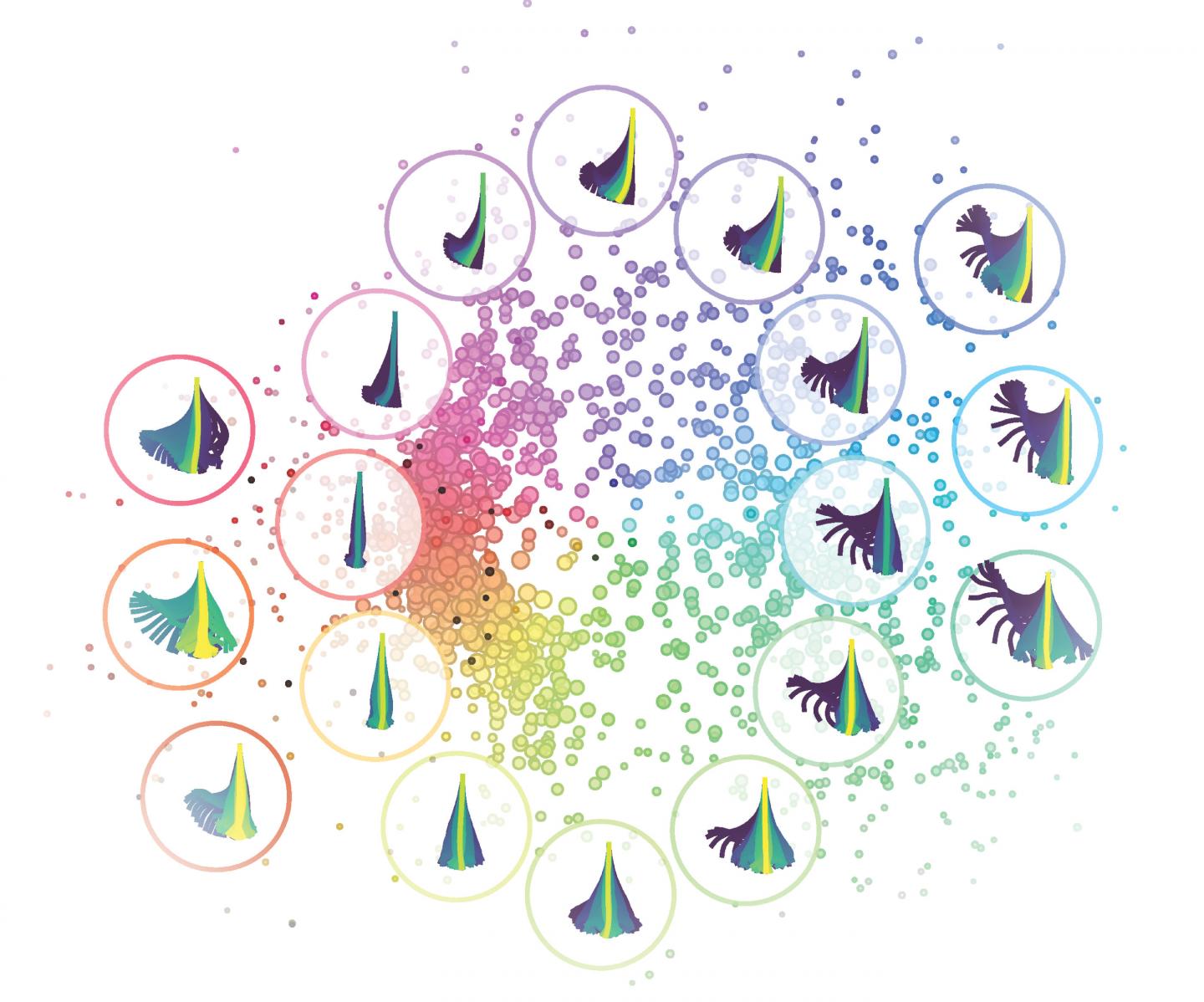

Computer algorithms disassemble prey capture behavior of zebrafish into its components

Credit: MPI of Neurobiology / Mearns

What is the common ground between making a cup of tea and putting on your shoes? Both actions consist of several movements in a row. “Just like language, which is composed of syllables arranged into sentences, many behaviors are comprised of several, sequential movements,” explains Duncan Mearns. “To understand how the brain generates behavior, we need to know the “syllables”, the building blocks of the behavior.” Aided by artificial intelligence, Mearns and his colleagues from the Max Planck Institute of Neurobiology have broken down the hunting behavior of larval zebrafish into its basic building blocks. They show how these building blocks combine to form longer sequences.

Although shorter than half a millimeter, zebrafish larvae already master all the behaviors they need to survive. Catching prey is such an innate behavioral sequence, fine-tuned by experience. However, how do neuronal circuits steer and combine the components of this behavior in order to lead to a successful prey capture?

The neurobiologists from the Baier department developed a high-tech assay to investigate the details of the fish behavior. High-speed cameras recorded eye, tail and jaw movements of the fish while the animals roamed freely in a small bowl. Specially designed computer algorithms then evaluated the recorded images and assigned them to a computer-learned behavioral component. The results of thousands of fish movements revealed three components of the prey capture behavior: orientation, approach and capture.

The motions of the fish tail are subject to continuous modulation depending on the position of the prey. Nevertheless, the computer algorithms were able to recognize three defined motion patterns for the three prey capture components. These motion patterns then always followed each other in the same stereotypic sequence. “This is much too fast to see with your eyes and even in slow motion we couldn’t separate the behavioral components as precisely from each other as the computer,” explains Duncan Mearns who developed the assay with his colleagues as part of his PhD thesis. When the prey was right in front of the fish’s mouth, the computer could even differentiate between two distinct movement types the fish use to capture their prey.

The prey always in view

The investigations confirmed that the fish need both eyes to take aim at their prey – and then to choose the appropriate catching behavior. Depending on the estimated distance, the fish decide between a quick sprint and gulp or a strong sucking movement. Thanks to these results, the researchers now know to look for neurons active on both sides of the brain, helping to determine how far away the prey is at this point in time.

The neurobiologists also received information on stimulus processing during prey capture behavior, when they replaced the prey with a virtual dot. Whenever the simulated prey dot disappeared, the fish aborted their hunting behavior – independent of when this happened during the behavior. “This shows us that the fish needs continuous feedback from the eyes about the prey to be able to display the entire hunting sequence,” says Mearns.

Search for behavior in the brain

With their experimental setup, the neurobiologists disassembled a complex behavior into individual components recognizable by the computer. The fact that the investigated behavior belongs to the zebrafish is no coincidence. The transparent fish larvae and available genetic and optogenetic methods enable the scientists to now search specifically for the neuronal circuits underlying the described behavioral components. “We now know much better what we are looking for and where we might find it,” explains Mearns.

###

Original Publication

Duncan S. Mearns, Joseph C. Donovan, António M. Fernandes, Julia L. Semmelhack & Herwig Baier

Deconstructing hunting behavior reveals a tightly coupled stimulus-response loop

Current Biology, online 19 December 2019

Media Contact

Stefanie Merker, PhD

[email protected]

49-898-578-3514

Original Source

https:/

Related Journal Article

http://dx.