Recent advancements in natural language processing (NLP) have ushered in a new era with the emergence of powerful language models, most notably the Generative Pretrained Transformer (GPT) series, which includes substantial language models such as ChatGPT (GPT-3.5 and GPT-4). These models undergo extensive pre-training on vast textual data, and their capability is evident in their exceptional performance across a broad spectrum of NLP tasks, including language translation, text summarization, and question-answering. Notably, ChatGPT has demonstrated its potential across various domains, spanning education, healthcare, reasoning, text generation, human-machine interaction, and scientific research, to name just a few.

Credit: YIHENG LIU

Recent advancements in natural language processing (NLP) have ushered in a new era with the emergence of powerful language models, most notably the Generative Pretrained Transformer (GPT) series, which includes substantial language models such as ChatGPT (GPT-3.5 and GPT-4). These models undergo extensive pre-training on vast textual data, and their capability is evident in their exceptional performance across a broad spectrum of NLP tasks, including language translation, text summarization, and question-answering. Notably, ChatGPT has demonstrated its potential across various domains, spanning education, healthcare, reasoning, text generation, human-machine interaction, and scientific research, to name just a few.

In line with this emerging and noteworthy trend, a team of researchers in China has undertaken a thorough analysis of research papers centered around ChatGPT. As of April 1st, 2023, a total of 194 papers referencing ChatGPT were identified on arXiv.

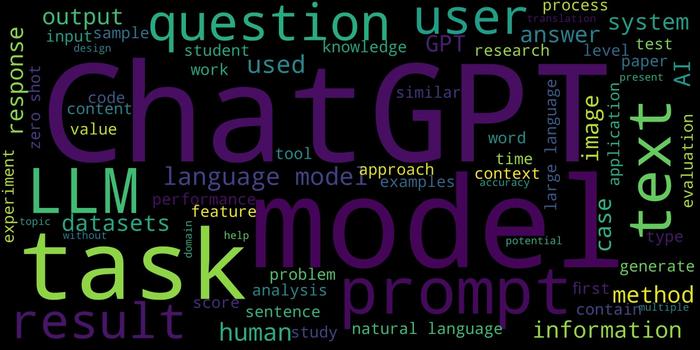

The team’s study encompassed a comprehensive trend analysis of these papers, resulting in the creation of a word cloud to visually depict the frequently used terminology. Additionally, they delved into the distribution of these papers across diverse fields, presenting statistical insights.

Moreover, their efforts extended to a comprehensive review of the existing literature on ChatGPT.

“Our review spans various dimensions, including an exploration of ChatGPT’s manifold applications, a thorough consideration of the ethical implications associated with its use, an evaluation of its capabilities, and an examination of its inherent limitations,” explains lead author Bao Ge.

The team’s findings indicate a rapid increase in interest in NLP models, showcasing their substantial potential across various domains.

“Nonetheless, it is essential to acknowledge the valid concerns surrounding biased or harmful content generation, privacy infringements and the potential for technology misuse,” Ge emphasizes.

Mitigating these concerns and establishing a framework for the responsible and ethical development and deployment of ChatGPT stands as a paramount priority.”

###

Contact the author: Bao Ge. School of Physics and Information Technology, Shaanxi Normal University, Xi’an, 710119, Shaanxi, China. [email protected]

The publisher KeAi was established by Elsevier and China Science Publishing & Media Ltd to unfold quality research globally. In 2013, our focus shifted to open access publishing. We now proudly publish more than 100 world-class, open access, English language journals, spanning all scientific disciplines. Many of these are titles we publish in partnership with prestigious societies and academic institutions, such as the National Natural Science Foundation of China (NSFC).

Journal

Meta-Radiology

DOI

10.1016/j.metrad.2023.100017

Method of Research

Literature review

Subject of Research

Not applicable

Article Title

Summary of ChatGPT-Related research and perspective towards the future of large language models

COI Statement

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.