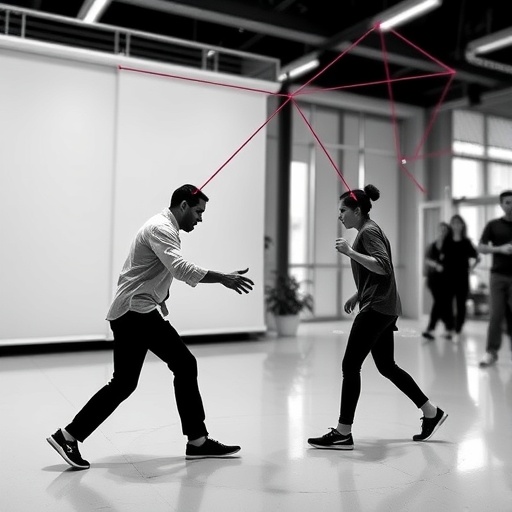

In a rapidly evolving landscape dominated by advancements in artificial intelligence, researchers are continuously striving to enhance our understanding and recognition of human actions within shared environments. A groundbreaking study conducted by Jiangtao Cheng, Wei Li, and Jinli Ding, slated for publication in 2026 in the journal “Discover Artificial Intelligence”, introduces an innovative method for recognizing two-person interactive actions, utilizing hypergraph convolutional networks (HGCNs). This research not only represents a significant technical leap but also unveils new potential applications in various fields, including surveillance, smart homes, and human-computer interaction.

Human action recognition has always posed significant challenges due to the complexities involved in identifying and interpreting mutual interactions between individuals. Traditional methods often rely on simplistic models that fail to capture the rich, collaborative dynamics inherent to partner-based activities. However, the researchers’ implementation of hypergraph convolutional networks provides a sophisticated framework that effectively encompasses the intricate relationships that emerge in two-person interactions, showcasing a progression in both computational capability and application potential.

At its core, the hypergraph convolutional network functions on the principle of hypergraphs, which are extensions of regular graphs. While traditional graphs consider pairwise connections between nodes, hypergraphs allow for the representation of multi-entity relationships, thereby enabling a deeper understanding of group interactions. By leveraging this advanced concept, the researchers can encode complex interactions as a unified structure, ensuring that the relational context between multiple actors is preserved and adequately analyzed.

The methodology proposed by the authors is underpinned by a robust training regime, involving extensive datasets that encapsulate a variety of two-person interactions. Utilizing such diverse training data not only enhances the model’s performance but also reduces bias and increases the versatility of the action recognition system. In contexts where the nuances of interaction differ dramatically, such a broad dataset enables the model to identify not only the actions themselves but also the context surrounding them, thereby improving its predictive accuracy.

Furthermore, the implementation of hypergraph convolutional networks introduces a unique approach to data representation. Unlike conventional methodologies that might isolate actions and actors, this approach offers a holistic view of interactions, mistaking neither person nor context. As a result, the network can discern subtle shifts in behavior based on components such as posture, relative movement, and interaction duration, leading to more accurate classification of dynamic actions. For domains like security, where detecting suspicious collaborative behavior is crucial, this increased precision could translate into substantial enhancements in safety protocols.

An essential aspect of this research is the potential it holds for real-time applications. Leveraging powerful computation resources and efficient algorithms, the model aims to operate at speeds suitable for practical implementation. This means utilizing hypergraph convolutional networks for action recognition can happen on the fly, allowing for immediate analysis of behaviors as they occur. This capability is critical for scenarios such as live surveillance feeds, where prompt identification of interactions can be pivotal in ensuring security and safety.

Equally notable is how this method addresses the issue of occlusion, a common problem in action recognition tasks. In many cases, actions by one individual can be obscured by the other, leading to challenges in accurate recognition. The hypergraph model, however, is particularly adept at navigating such complexities by inference across the graph structure, using data from the visible portions of the scene to inform its understanding of occluded actions. Thus, the researchers are not just enhancing identification of overt actions but also paving the way for breakthroughs in recognizing concealed behaviors.

The implications of this research extend far beyond mere academic inquiry. In real-world applications, the deployment of efficient human action recognition systems can garner significant benefits across diverse sectors. For instance, in the realm of healthcare, advanced recognition systems can assist caregivers in monitoring interactions between patients, leading to better support and intervention when required. Similarly, in the context of autonomous vehicles, understanding human actions can inform better navigation and interaction strategies, enhancing the safety and efficiency of transport systems.

Moreover, this research could catalyze advancements in human-computer interaction (HCI). Smart devices, equipped with sophisticated action recognition capabilities, could become responsive to user actions in a much more intuitive manner. Imagine technology that understands not just commands but also the context of human interactions, allowing for an enriched user experience that adjusts in real-time to the nuances of human behavior. Such advancements could redefine the boundaries of actionable intelligence embedded in our everyday gadgets.

As we gaze into the future, it becomes increasingly clear that the potential applications of this research are vast and varied. The trend of embedding intelligent systems into our daily lives creates a pressing need for enhanced action recognition methodologies that can adapt to evolving social dynamics. The shift towards smarter homes and environments necessitates frameworks that can seamlessly integrate into these ecosystems, providing users with an unprecedented level of interactivity and personalization.

Coupling these insights with continued advancements in processing technology and machine learning capabilities suggests that we are on the brink of a new era in action recognition research. With the groundwork laid by Cheng, Li, and Ding, we are not merely observing an incremental improvement in recognition tasks but rather contemplating transformative shifts that could redefine our interactions with technology and with each other.

The arrival of hypergraph convolutional networks represents one of those pivotal moments in research, prompting vital inquiries into the nature of human interactions and their implications in our increasingly interconnected world. As we dive deeper into the age of artificial intelligence, the findings from this study will undoubtedly spark new discussions, further explorations, and additional breakthroughs, propelling the field of human action recognition into even more innovative territories.

The blend of robust theoretical foundations with practical implications in this study signifies a remarkable achievement in the endeavor to understand human actions better. As we await the full publication of this research, it is evident that the work of Jiangtao Cheng, Wei Li, and Jinli Ding will resonate through various disciplines and industry applications, leading to a richer and more nuanced understanding of human interaction through the lens of artificial intelligence.

In conclusion, the future of action recognition seems bright, with hypergraph convolutional networks at the helm driving transformative changes. Researchers and practitioners alike stand to benefit from this robust methodology, as it proves to be a crucial advancement in our quest to navigate the complexities of human behavior within shared spaces. With every innovative leap, the opportunities for applied AI in real-world settings continue to expand, promising a future where smart systems enhance our understanding of action and interaction in unprecedented ways.

Subject of Research: Human Action Recognition

Article Title: Two-person interactive action recognition based on hypergraph convolutional networks.

Article References: Jiangtao, C., Li, W., Jinli, D. et al. Two-person interactive action recognition based on hypergraph convolutional networks. Discov Artif Intell (2026). https://doi.org/10.1007/s44163-025-00529-w

Image Credits: AI Generated

DOI: 10.1007/s44163-025-00529-w

Keywords: Hypergraph Convolutional Networks, Action Recognition, Two-person Interaction, Artificial Intelligence, Machine Learning, Human-computer Interaction.

Tags: artificial intelligence advancementscomputational capabilities in AIhuman action recognition challengeshuman-computer interaction improvementhypergraph convolutional networksinnovative action recognition techniquesinteractive action recognition methodsJiangtao Cheng research studymulti-entity relationship modelingsmart home technology integrationsurveillance applications of AItwo-person action recognition