In a groundbreaking study published in the journal Scientific Reports, researcher K. Mao has tackled the complexities of resource optimization within multi-access edge computing (MEC)-enabled heterogeneous networks (HetNets). The increasing demand for efficient network slicing has prompted the exploration of innovative approaches to optimize resource allocation. This research delves into the utilization of multi-agent reinforcement learning (MARL) to address the dynamic challenges presented by network slicing in MEC environments.

MEC is a transformative computation paradigm that brings data processing closer to the user, enhancing service delivery and reducing latency. As the number of devices connected to networks surges, the architecture of HetNets, which consists of diverse technologies and user requirements, demands sophisticated management strategies. The study by Mao highlights the pressing need to innovate resource optimization techniques to leverage the full potential of MEC.

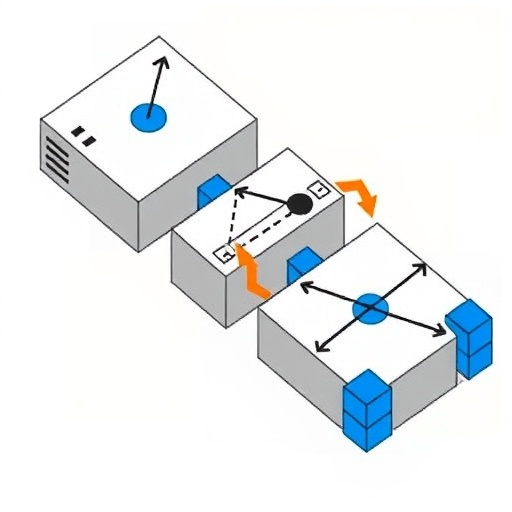

The innovation presented in this research revolves around using MARL as a framework for optimizing resource game strategies in MEC-enabled HetNets. By modeling the interaction between agents as a game, the research implements strategies that allow agents to learn from one another’s actions in real-time, thereby refining resource allocation mechanisms. This represents a significant shift from traditional optimization methods, which often struggle to adapt to the evolving dynamics of network environments.

One of the primary challenges in MEC-enabled HetNets is the efficient management of resources such as bandwidth, computational power, and storage. The traditional approaches to resource allocation often fall short when faced with the dynamic distribution of users and varying service requirements. By employing MARL, Mao’s research introduces a novel solution that not only improves resource optimization but also adapts to the changing landscape of network traffic.

Through extensive experimentation, the study confirms that MARL achieves superior performance in terms of resource allocation efficiency compared to classical methods. The findings indicate that agents operate collaboratively within a decentralized framework, allowing for flexible and robust resource distribution that adjusts to the specific demands of connected devices. This is pivotal in HetNets, where resource contention is common and can lead to service degradation if not managed effectively.

The implications of this research are vast, impacting industries reliant on MEC for delivery of real-time applications, including autonomous vehicles, smart cities, and IoT devices. The ability to optimize resource allocation in these contexts can significantly enhance operational efficiency, reduce costs, and improve user experiences. By embracing MARL, network operators can ensure a sustainable approach to resource management that evolves with user demands.

Furthermore, the study examines the practical implications of implementing MARL-driven solutions in real-world network scenarios. The results suggest that transitioning to such an approach could facilitate better resource utilization across various applications, leading to more consistent quality of service. This transition, however, is not without challenges. The complexity of MARL requires robust computational resources and an understanding of its underlying mechanics, which can be barriers for some organizations.

Mao’s work also highlights the importance of synergy between advanced algorithms and network infrastructure. The integration of MARL with existing MEC frameworks necessitates collaboration between engineers, data scientists, and network managers. The holistic development of such systems fosters an environment conducive to innovation, allowing for rapid advancements in network capabilities.

As the digital landscape continues to evolve, the need for effective resource management becomes increasingly crucial. The findings from Mao’s study serve as a catalyst for further research into adaptive learning systems that can respond to the unpredictable nature of network demands. Future studies may explore refinement of current algorithms or the incorporation of additional learning mechanisms to further enhance resource optimization across diverse network scenarios.

The significance of this research extends beyond theoretical implications, providing a potential roadmap for future advancements in network management. Operationalizing MARL in MEC-enabled HetNets could set a new standard for how resources are managed, paving the way for smarter, more efficient networks ready to meet the challenges of tomorrow.

In conclusion, Mao’s exploration of multi-agent reinforcement learning as a solution for resource optimization in MEC-enabled HetNets is a vital contribution to the field of network management. By combining game-theoretic approaches with cutting-edge machine learning techniques, this research opens new avenues for enhanced efficiency and adaptability in resource allocation. As the need for streamlined network operation grows, studies like this will play a critical role in shaping the future of telecommunications.

Mao’s work not only addresses current challenges but also sets the stage for further innovation in resource management technologies, emphasizing the essential role of interdisciplinary collaboration and advanced algorithmic development in creating future-proof network solutions.

The convergence of MEC and MARL represents a forward-thinking shift in how we view and manage network resources, promising improvements that could redefine user experience and operational efficiencies in highly competitive environments.

Subject of Research: Optimization of resource allocation in MEC-enabled HetNets using multi-agent reinforcement learning.

Article Title: Multi-agent reinforcement learning driven resource game optimization for network slicing in MEC-enabled HetNets.

Article References:

Mao, K. Multi-agent reinforcement learning driven resource game optimization for network slicing in MEC-enabled HetNets.

Sci Rep (2025). https://doi.org/10.1038/s41598-025-33190-5

Image Credits: AI Generated

DOI: 10.1038/s41598-025-33190-5

Keywords: Multi-agent reinforcement learning, resource optimization, network slicing, MEC, HetNets, machine learning.

Tags: adaptive strategies for HetNetsdynamic challenges in network slicingedge computing resource managementefficient resource optimization techniquesgame theory in network optimizationheterogeneous networks managementK. Mao’s research on network efficiencyMEC-enabled network innovationsmulti-agent reinforcement learning applicationsnetwork slicing optimizationreal-time learning in resource allocationresource allocation in MEC